1 Seeing inside the black box

Modern data science runs on powerful automation that often outpaces human understanding. This chapter argues that while models can be impressively usable, they can also fail abruptly, drift under new conditions, and amplify historical biases—especially when treated as opaque black boxes. It calls for a shift from mere execution to conceptual clarity and judgment, emphasizing interpretability and accountability in high‑stakes domains, and framing the book’s purpose: to reconnect today’s tools with the timeless ideas that underlie them so we can see inside the black box.

The text warns against the illusion of understanding created by polished dashboards, LLM‑generated workflows, and AutoML pipelines that hide assumptions about data, objectives, and error. It highlights how different algorithms embody different ways of seeing the world and can diverge for good reasons, making it essential to understand not just behavior but causation and failure modes. Foundational literacy is presented as practical leverage across five recurring needs: explaining and defending decisions; diagnosing drift, leakage, and assumption violations; selecting and designing models aligned to data structure and costs (from time series choices to threshold setting); navigating ethical and epistemological commitments (Bayesian vs. frequentist, generative vs. discriminative); and using automation without abdicating judgment.

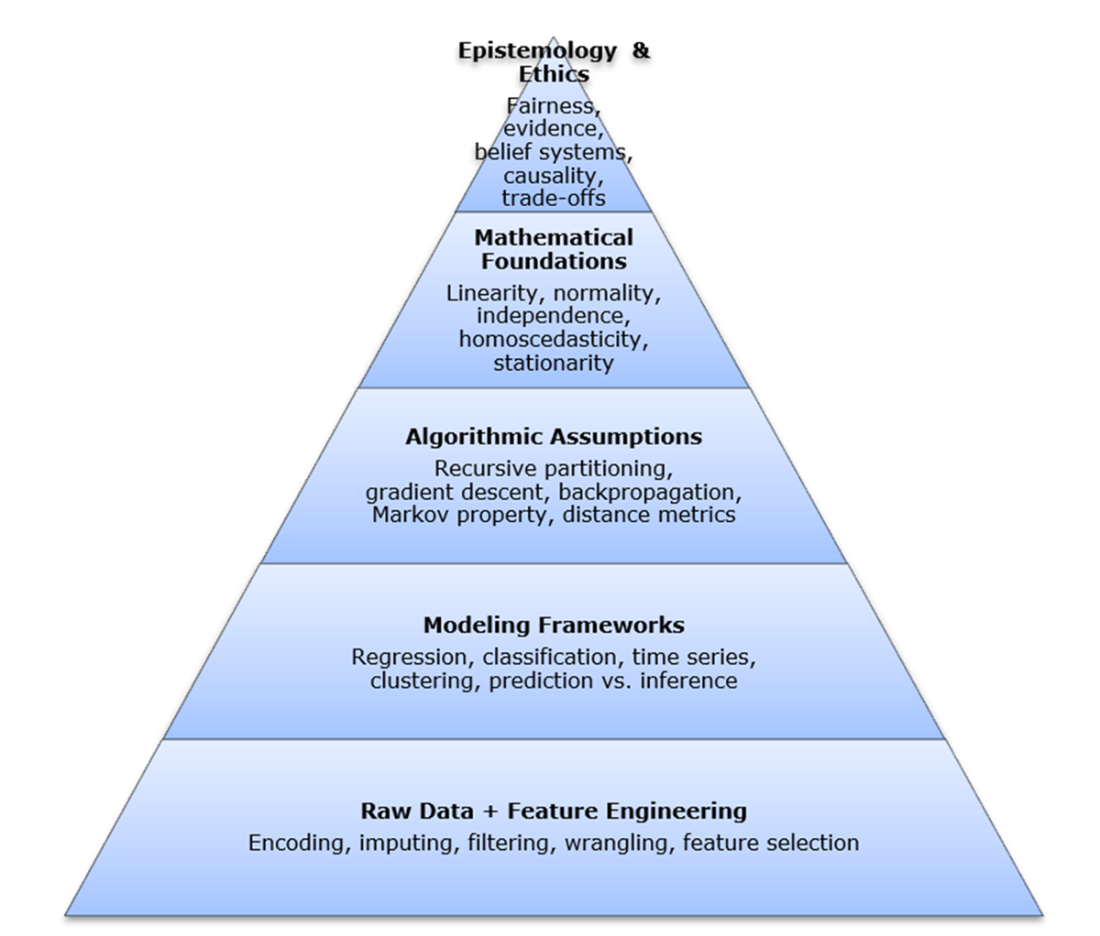

To anchor this literacy, the chapter introduces a conceptual “stack” that links raw data and feature choices to algorithmic assumptions, loss functions, mathematical principles, and overarching beliefs about knowledge and risk. It previews the foundational works—from Bayes, Fisher, and Shannon to Breiman and beyond—that still shape modern systems, illustrated by a loan‑default case where leakage, misaligned objectives, and shifting populations expose brittle modeling. The book’s approach is not a cookbook but a guide to reasoning: with modest technical fluency and a mindset that probes assumptions, readers will learn to diagnose, adapt, and defend models—understanding why they work, when they fail, and how to align them with real‑world stakes.

The hidden stack of modern intelligence. This conceptual diagram illustrates the layered structure beneath modern intelligence systems, from raw data to philosophical commitments. Each layer represents a critical aspect of data-driven reasoning: how we collect and shape inputs, structure problems, select and apply algorithms, validate results through mathematical principles, and interpret outputs through broader assumptions about knowledge and inference. While the remaining chapters in this book don’t map one-to-one with each layer, each foundational work illuminates important elements within or across them—revealing how core ideas continue to shape analytics, often invisibly.

Summary

- Interpretability is non-negotiable in high-stakes systems. When algorithms shape access to care, credit, freedom, or opportunity, technical accuracy alone is not enough. Practitioners must be able to justify model behavior, diagnose failure, and defend outcomes—especially when real lives are on the line.

- Automation without understanding is a recipe for blind trust. Tools like GPT and AutoML can generate usable models in seconds—but often without surfacing the logic beneath them. When assumptions go unchecked or objectives misalign with context, automation amplifies risk, not insight.

- Foundational works are more than history—they're toolkits for thought. The contributions of Bayes, Fisher, Shannon, Breiman, and others remain vital because they teach us how to think: how to reason under uncertainty, estimate responsibly, measure information, and question what algorithms really know.

- Assumptions are everywhere—and rarely visible. Every modeling decision, from threshold setting to variable selection, encodes a belief about the world. Foundational literacy helps practitioners uncover, test, and recalibrate those assumptions before they turn into liabilities.

- Modern models rest on layered conceptual scaffolding. This book introduces the “hidden stack” of modern intelligence, from raw data to philosophical stance—as a way to frame what lies beneath the surface. While each of the following chapters centers on a single foundational work, together they illuminate how deep principles continue to shape every layer of today’s analytical pipeline.

- Historical literacy is your best defense against brittle systems. In a field evolving faster than ever, foundational knowledge offers durability. It helps practitioners see beyond the hype, question defaults, and build systems that are not only powerful—but principled.

- The talent gap is real—and dangerous. As demand for data-driven systems has surged, the supply of deeply grounded practitioners has lagged behind. Too often, models are built by those trained to execute workflows but not to interrogate their assumptions, limitations, or risks. This mismatch leads to brittle systems, ethical blind spots, and costly surprises. This book is a direct response to that gap: it equips readers not just with technical fluency, but with the judgment, historical awareness, and conceptual depth that today’s data science demands.

FAQ

What does “seeing inside the black box” mean in this chapter?

It means moving beyond running code to understanding the assumptions, trade-offs, and reasoning that produce a model’s outputs. The chapter argues that real skill is not building models quickly, but knowing why they behave as they do, when they fail, and how to explain and fix them—especially when decisions affect people’s lives.

What is the “illusion of understanding” created by modern tools?

Tools like LLMs and AutoML can generate polished workflows that look correct, but they can mask mismatched assumptions, poorly chosen metrics, and hidden biases. You can end up trusting one black box to configure another, without knowing whether the result aligns with your data or goals.

Why do timeless foundations still matter, and which works anchor the book?

Modern algorithms rest on older ideas about uncertainty, evidence, and optimization. The book revisits foundational works—Bayes, Fisher, Neyman-Pearson, Shannon, Bellman, Raiffa & Schlaifer, Vapnik, Breiman, MacKay, LeCun/Bengio/Hinton, Vaswani et al., Kaplan et al.—to show how their concepts still power today’s models and how misunderstanding them leads to brittle systems.

What is the hidden stack of modern intelligence?

It’s a conceptual stack beneath every prediction, spanning layers such as raw data and feature engineering, modeling frameworks, algorithmic assumptions, mathematical foundations, and epistemology and ethics. Missteps at any layer—bad inputs, wrong objectives, unchecked assumptions—can distort outcomes, even when outputs look plausible.

In what areas does foundational understanding make the biggest difference?

- Interpretability and accountability: explaining and defending decisions.

- Diagnostic power: detecting drift, leakage, overfitting, and assumption violations.

- Model selection and design: matching methods to data structure and goals.

- Ethical and epistemological insight: recognizing how models encode values and beliefs.

- Beyond automation: using tools like LLMs/AutoML without abdicating judgment.

What failures commonly undermine models in the wild, and how do we diagnose them?

Frequent issues include data drift, data leakage, overfitting, unhandled outliers or missingness, and violated assumptions (e.g., stationarity, IID, homoscedasticity). Diagnose with EDA, careful preprocessing, residual checks, calibration, monitoring, and tests aligned to model assumptions.

How should I choose and tune models responsibly?

Match method to structure and purpose: use interpretable models (e.g., logistic regression) when explanation matters and ensure assumptions are reasonable; use ensembles (e.g., random forests) for nonlinear interactions while planning for explainability. In time series, compare simpler smoothing to ARIMA by inspecting autocorrelation and residuals. Set thresholds by cost trade-offs using ROC curves and confusion matrices, not a default 0.5.

How do algorithms embed assumptions, values, and ethical choices?

Loss functions encode priorities and penalties; features like zip code can proxy sensitive attributes; class imbalance can hide harm. Beyond fairness, modeling choices reflect beliefs about what counts as evidence (Bayesian vs frequentist) and what is knowable (generative vs discriminative). Foundational literacy helps surface and justify these commitments.

What background do I need to get the most from this book?

- Modeling basics (classification, estimation, evaluation: accuracy, RMSE, AUC).

- Core statistics (probability rules, common distributions, confidence intervals, standard errors).

- Math fluency (functions, notation, derivatives, optimization concepts).

- Exposure to Monte Carlo and Markov chains.

- A mindset that prioritizes conceptual understanding over rote execution.

How will the rest of the book teach these ideas?

Each chapter centers on one foundational work with an origin story, core insight, modern manifestations, and common misuses. Mathematics is introduced gently and only as needed. The goal is conceptual clarity—seeing how timeless ideas shape today’s tools, and using that insight to build, diagnose, and explain models responsibly. A special emphasis is placed on calibrated probabilities: scores are beliefs to be updated, not certainties.

Timeless Algorithms ebook for free

Timeless Algorithms ebook for free