3 Failure tolerance

This chapter presents a clear, practical way to think about failure in distributed systems with the goal of ensuring failure tolerance—keeping system behavior well-defined even when things go wrong. It unifies terminology by simply using “failure,” and frames the discussion in two complementary parts: theory (what failures are and how they can be reasoned about) and practice (how to detect and mitigate them in real systems). A central theme is that end-to-end correctness demands complete processes: in the presence of failures, outcomes should be observably equivalent either to full success or to no effect at all.

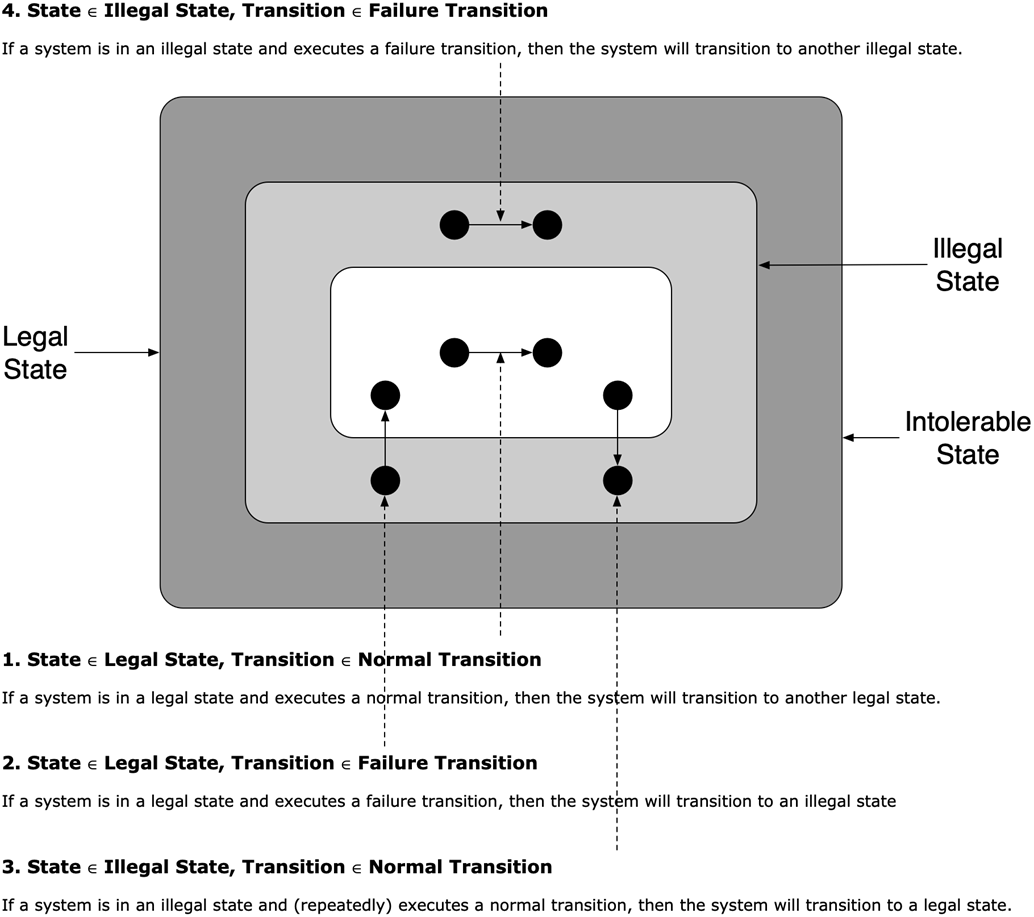

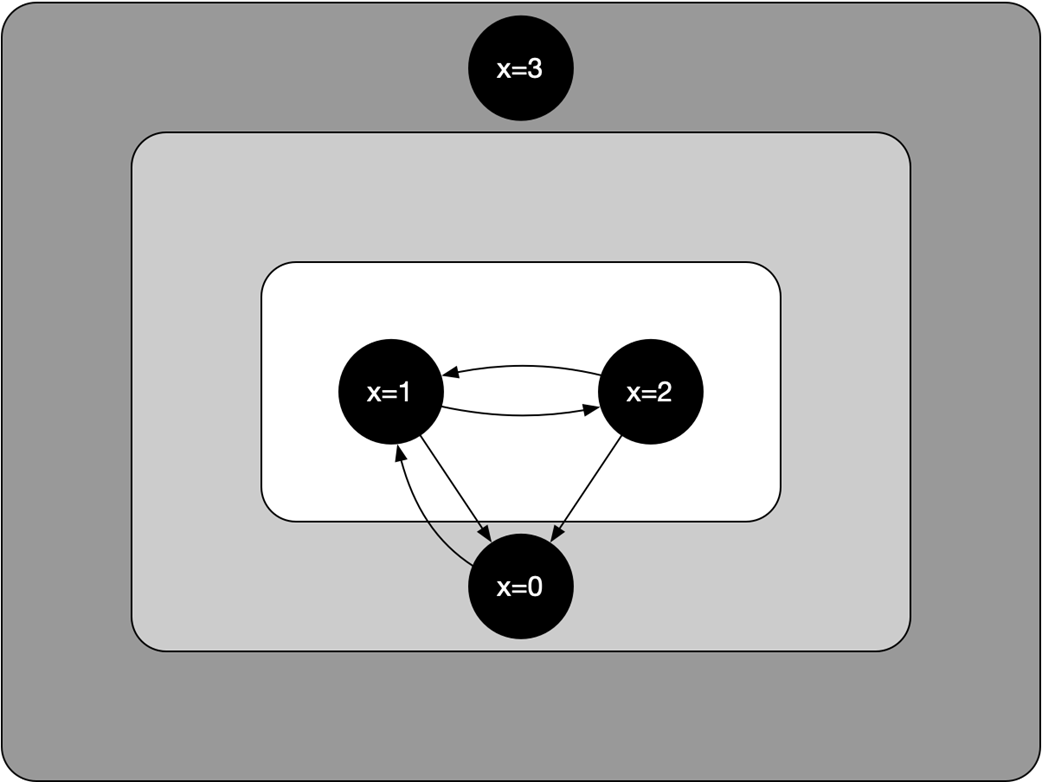

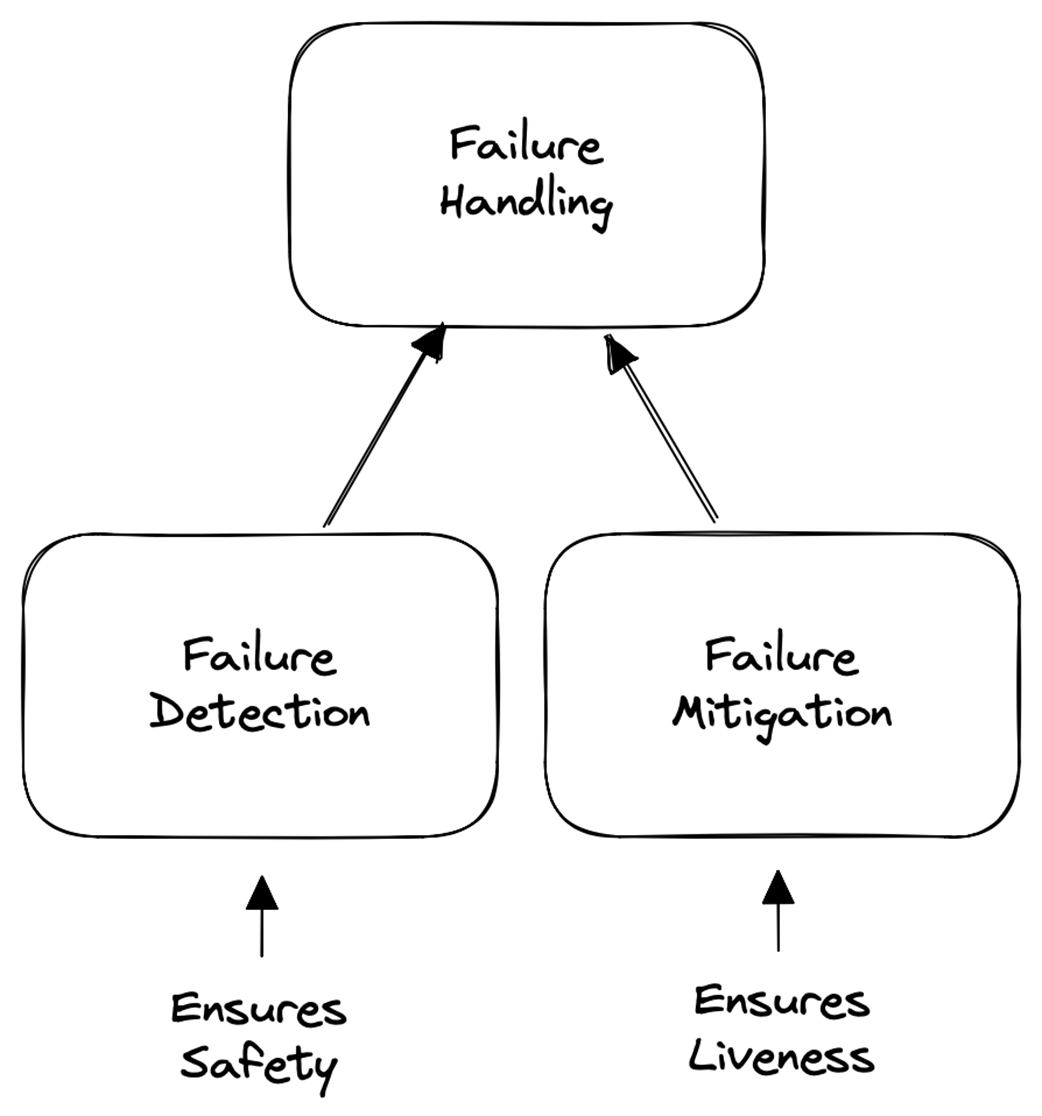

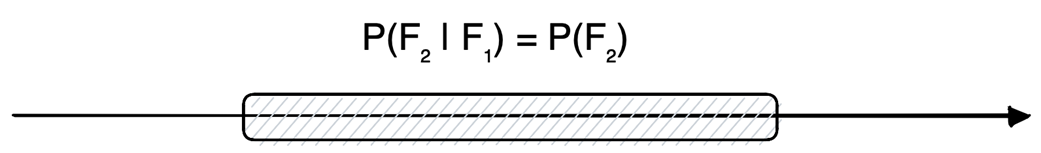

The theoretical core defines failure as an unwanted but possible transition that moves a system from a legal (good) state to an illegal (bad) state, with intolerable states excluded from tolerance by definition. Systems evolve via normal and failure transitions, and recovery is the sequence that returns from illegal to legal states. Correctness is captured by safety (nothing bad happens) and liveness (something good eventually happens), leading to a taxonomy of failure tolerance: masking (both safety and liveness), non-masking (liveness only), fail-safe (safety only), and none. Practical limits (e.g., trade-offs captured by impossibility results) mean full masking is often infeasible. The chapter also links failure detection to maintaining safety (halt dangerous actions) and mitigation to restoring liveness (resume progress). It broadens “failure detectors” beyond crash suspicion to any predicate that witnesses an illegal state, while noting that timeout-based detectors cannot be both complete and accurate in asynchronous, unreliable networks.

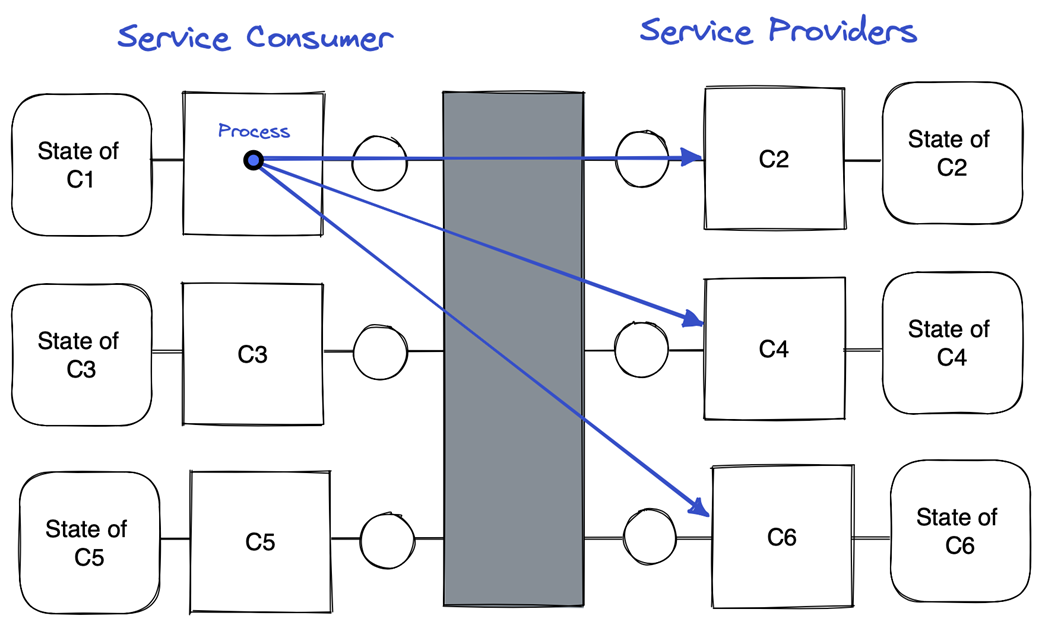

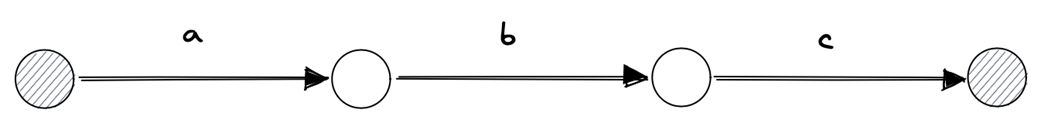

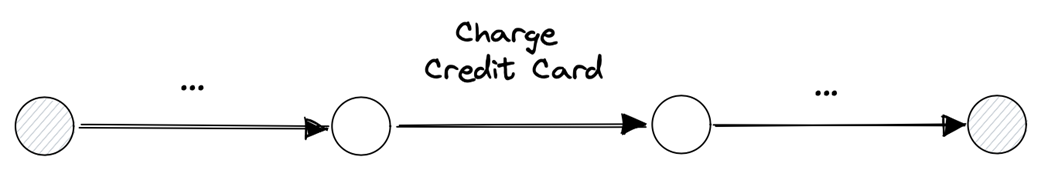

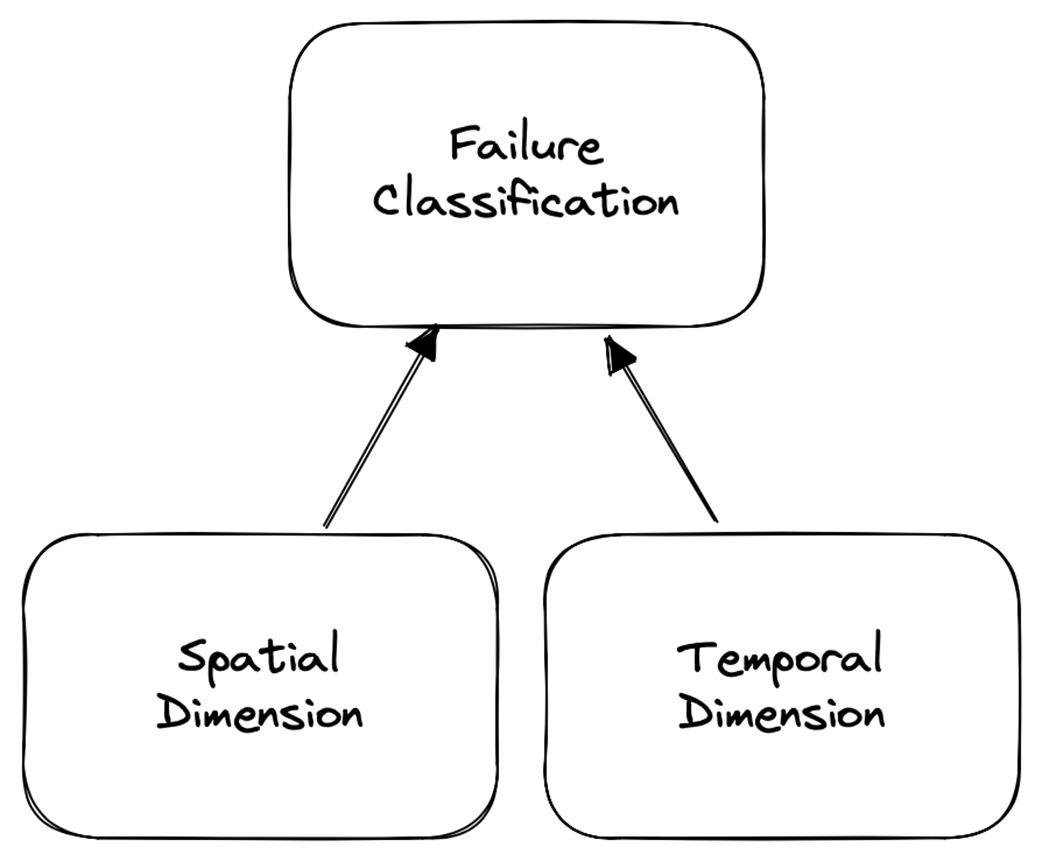

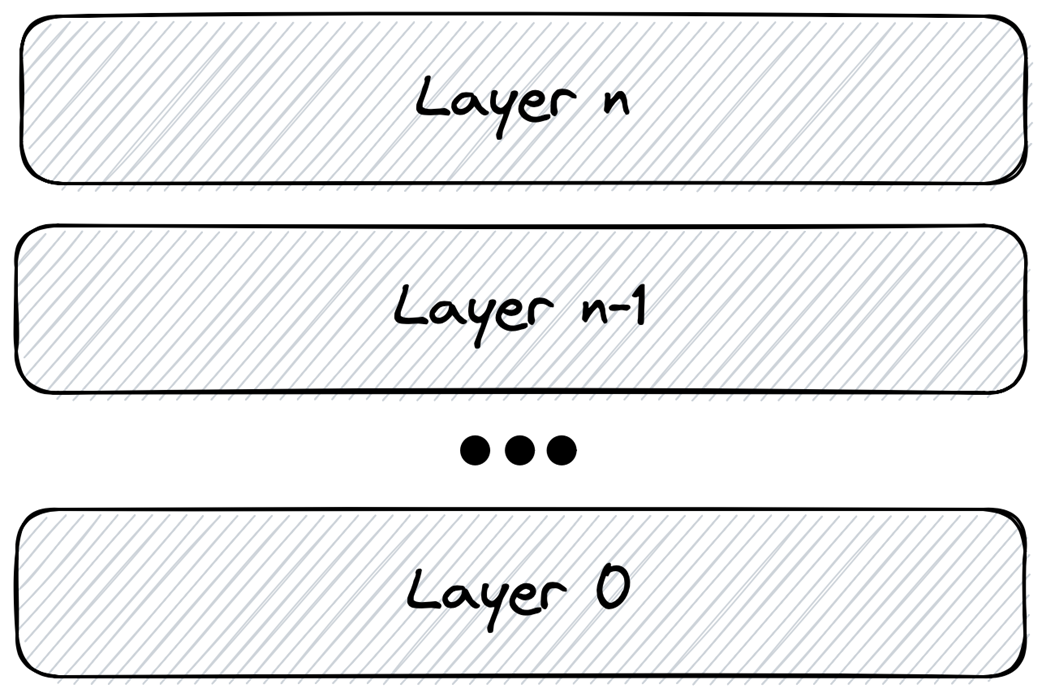

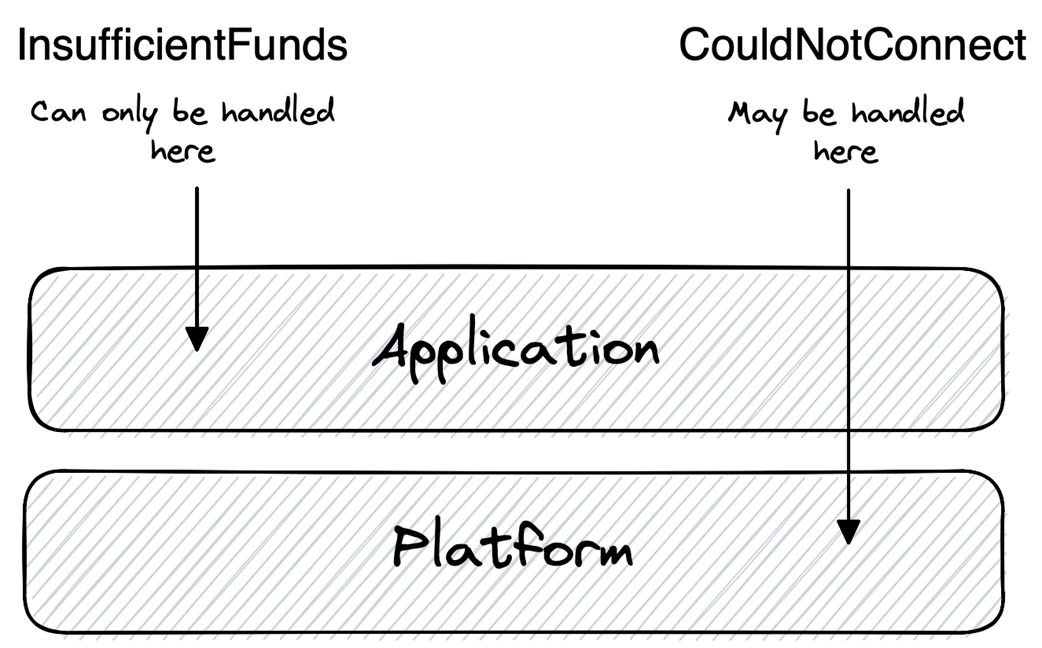

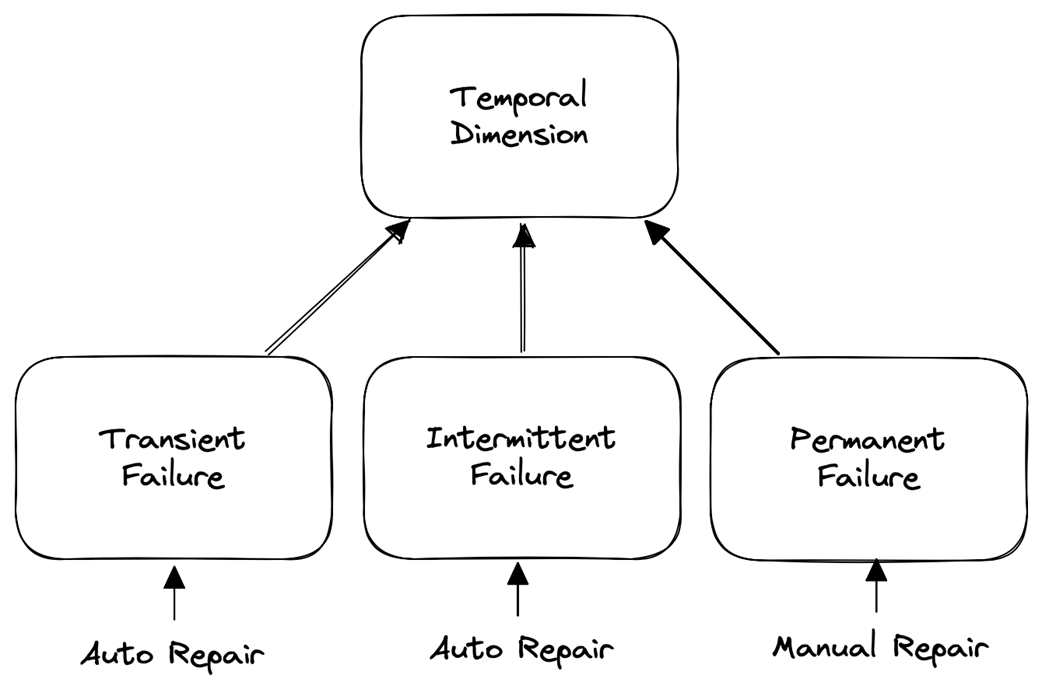

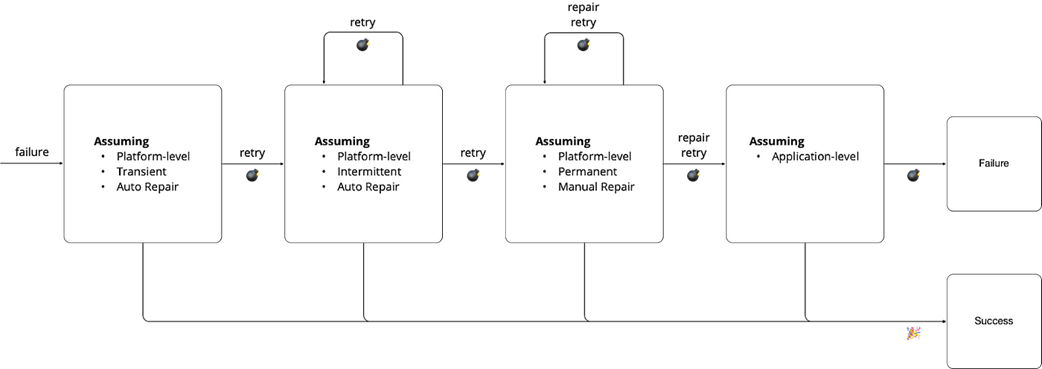

The practical part adopts a service-orchestration model where a consumer executes a multi-step process against providers, aiming to avoid partial application. Mitigation follows two axes. Spatially, per the end-to-end argument, handle failures at the lowest layer able to do so correctly and completely: application-level failures (e.g., business rule violations) are addressed with backward recovery and compensation; platform-level failures (e.g., connectivity) are handled with forward recovery such as retries. Temporally, failures are classified as transient (quick auto-repair), intermittent (elevated likelihood but auto-repair), or permanent (require manual repair), guiding tactics like immediate retry, backoff-based retries, or suspending until fixed and then resuming. An ideal strategy first attempts platform mitigation (immediate retry, then backoff, then suspend-and-resume), escalates to application-level compensation if needed, and, if compensation itself fails, escalates to human operators—always preserving safety, restoring liveness when possible, and ensuring effects like “charge exactly once” in workflows such as e-commerce checkout.

System states and state transitions

An illustration of the states and state transitions defined in Listing 3.1

Service orchestration

A process as a sequence of steps

E-commerce process

Failure handling

Failure classification

Thinking in layers

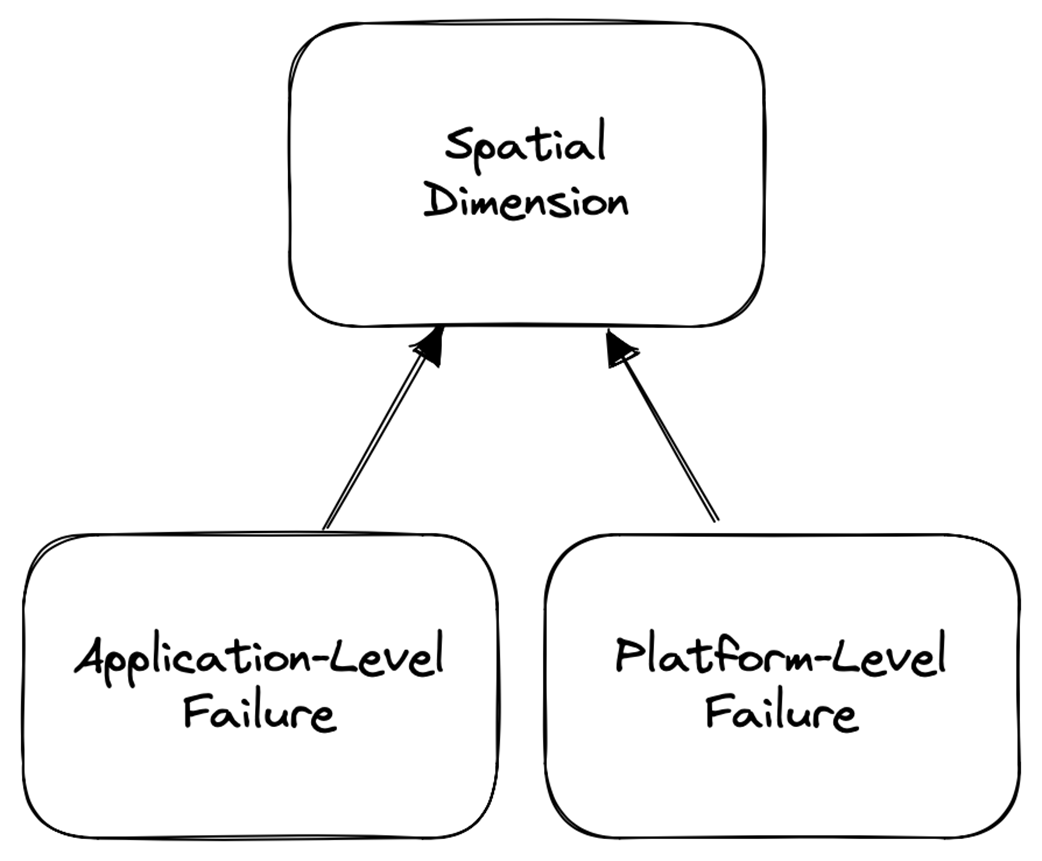

Spatial classification

Application-level versus platform-level failure

Temporal dimension

Transient failure

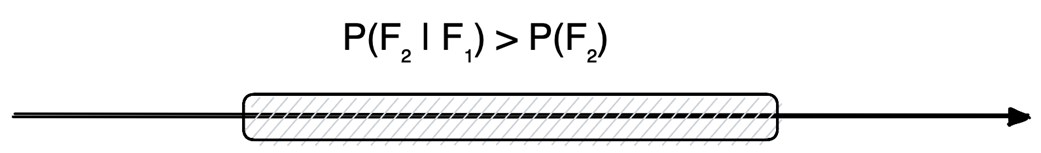

Intermittent failure

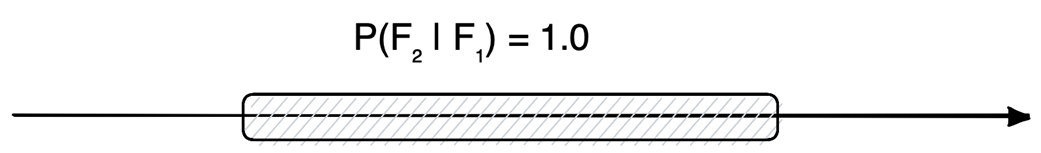

Permanent failure

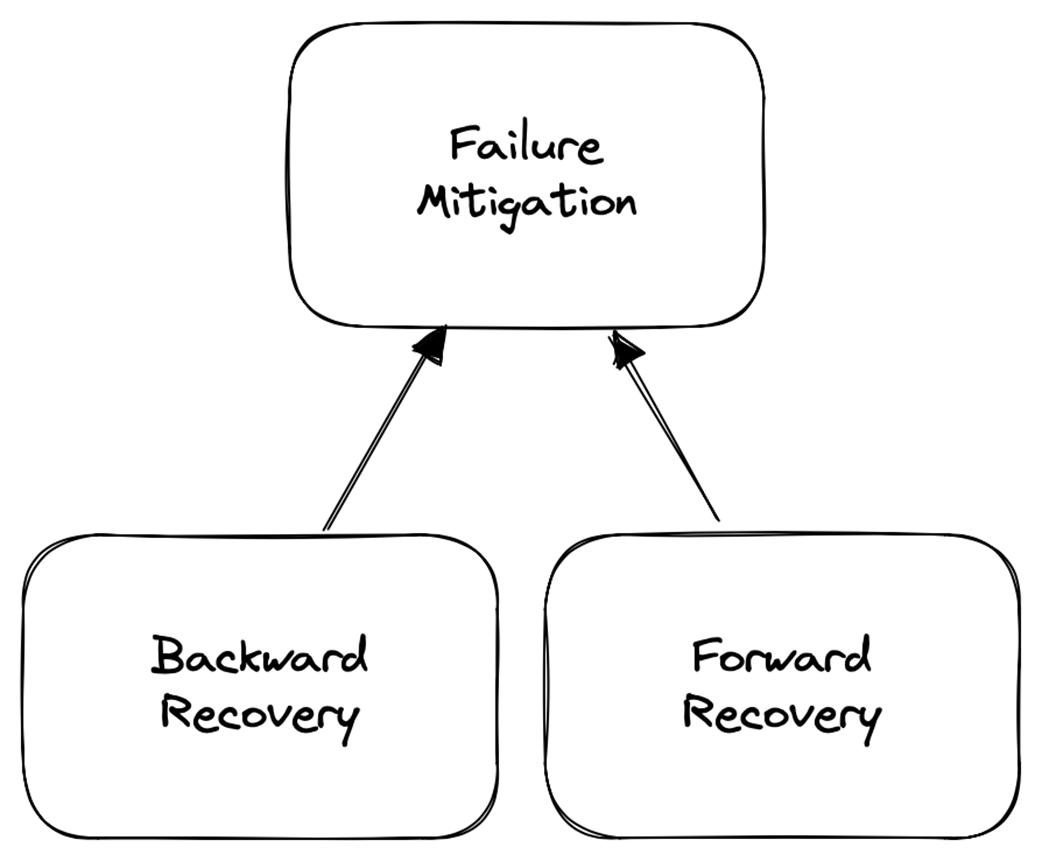

Failure mitigation

Outline of failure-handling strategy in an orchestration scenario

Summary

- Failure tolerance is the goal of failure handling.

- Failure handling involves two key steps: failure detection and failure mitigation.

- Failures can be classified across two dimensions: spatial and temporal.

- Spatially, failures are classified as application-level or platform-level.

- Temporally, failures are classified as transient, intermittent, or permanent.

- Different failure tolerance strategies, such as masking, non-masking, and fail-safe, address the safety and liveness of the system.

- Failure detection and mitigation strategies vary based on the classification of the failure and the desired class of failure tolerance.

FAQ

What is “failure” and what is “failure tolerance” in this chapter?

Failure is an unwanted but possible state transition that moves a system from a good (legal) state to a bad (illegal) state. Failure tolerance is the system’s ability to keep behaving in a well-defined way even when it is in a bad state.How are system states and transitions modeled?

The model uses three states—legal (good), illegal (bad), and intolerable (“everything is lost”). Transitions are of two kinds: normal transitions (intended behavior) and failure transitions (unwanted). Moving from an illegal state back to a legal state is failure recovery.What are safety and liveness, and why do they matter for failure tolerance?

Safety means nothing bad ever happens; liveness means something good eventually happens. In the absence of failures, both should hold. Under failure, different approaches trade off safety and liveness to deliver different types of failure tolerance.What are masking, non-masking, and fail-safe failure tolerance?

- Masking: guarantees both safety and liveness under failure (failure is transparent). Often costly or impossible (e.g., CAP trade-offs).- Non-masking: guarantees liveness but not safety (system keeps making progress, may make mistakes temporarily; e.g., a queue delivers out of order during failure).

- Fail-safe: guarantees safety but not liveness (system avoids mistakes by halting progress; e.g., a queue stops delivering to preserve order).

How does the end-to-end argument guide where to handle failures?

Handle failures in the lowest layer (from the top down) that can correctly and completely detect and mitigate them. If the lowest adequate layer is the application, it’s an application-level failure (e.g., InsufficientFunds). If the platform can fully handle it, it’s platform-level (e.g., CouldNotConnect via retries).What’s the difference between transient, intermittent, and permanent failures?

- Transient: “come and go,” auto-repair quickly; a second failure is no more likely than usual (retry soon).- Intermittent: “linger,” auto-repair after some delay; a second failure is more likely in the short term (retry with backoff).

- Permanent: persist until fixed; a second failure is certain without manual intervention (suspend, repair, then resume).

How should failure handling be structured in practice?

Two steps: failure detection (recognize the failure) and failure mitigation (recover). In service orchestration, aim for complete execution of a process; on failure, end in either a forward-recovered outcome (equivalent to success) or a backward-recovered outcome (equivalent to no-op).What is a failure detector, and why can’t it be both complete and accurate in asynchronous systems?

A failure detector is a “witness” (predicate) that a failure occurred (often via timeouts over heartbeats or requests—pull or push). In partially synchronous/asynchronous systems with unreliable networks, messages can be delayed or lost, so a detector cannot be both complete (no misses) and accurate (no false suspicions) at the same time.When should I use backward vs. forward recovery?

- Backward recovery (compensation): move the system back to an initial legal state; typical at the application layer (e.g., refund a charge if checkout fails). Doesn’t require fixing underlying causes.- Forward recovery (retries): move toward the intended final legal state; typical at the platform layer (e.g., immediate retry, backoff, suspend-and-resume). Requires addressing the underlying cause.

Think Distributed Systems ebook for free

Think Distributed Systems ebook for free