17 Deploying Per-Node Workloads with DaemonSets

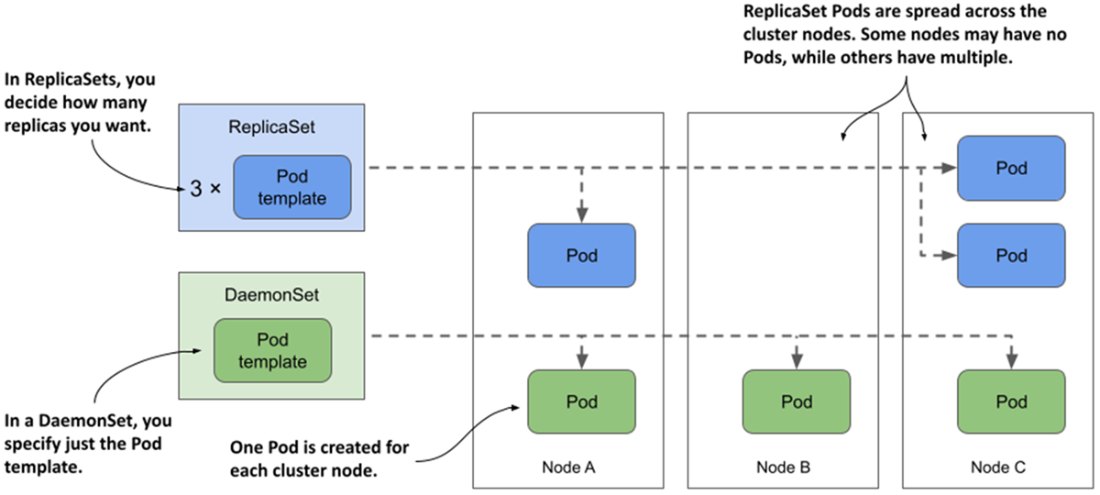

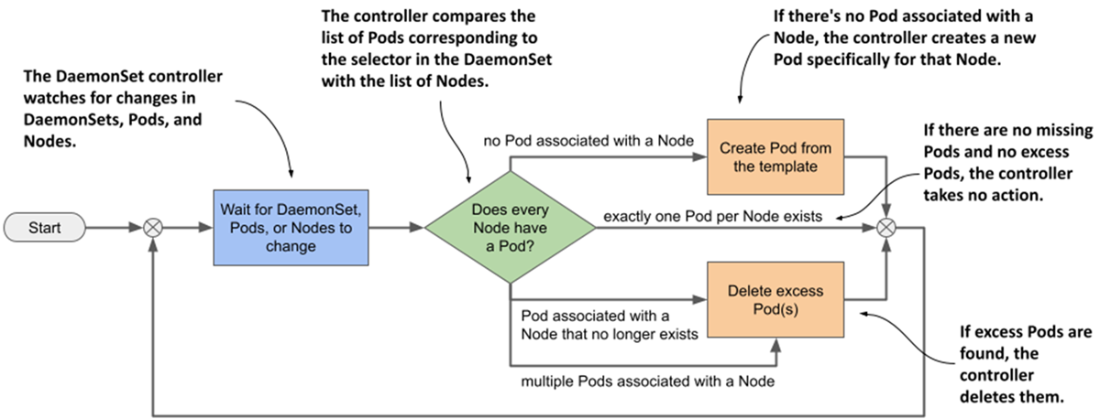

DaemonSets run exactly one Pod replica on each eligible node, making them ideal for per-node infrastructure such as log collectors, metrics agents, kube-proxy, and CNI plugins. Unlike Deployments, you don’t specify a replica count; the controller reconciles against the node list, creating one Pod per node and reacting to node joins/leaves and stray Pods. You can scope placement to certain nodes with a node selector, and, because control-plane nodes are usually tainted, add tolerations if the daemon must run there. This unified, Kubernetes-native approach replaces ad hoc node-level installation methods so you manage system daemons the same way as application workloads.

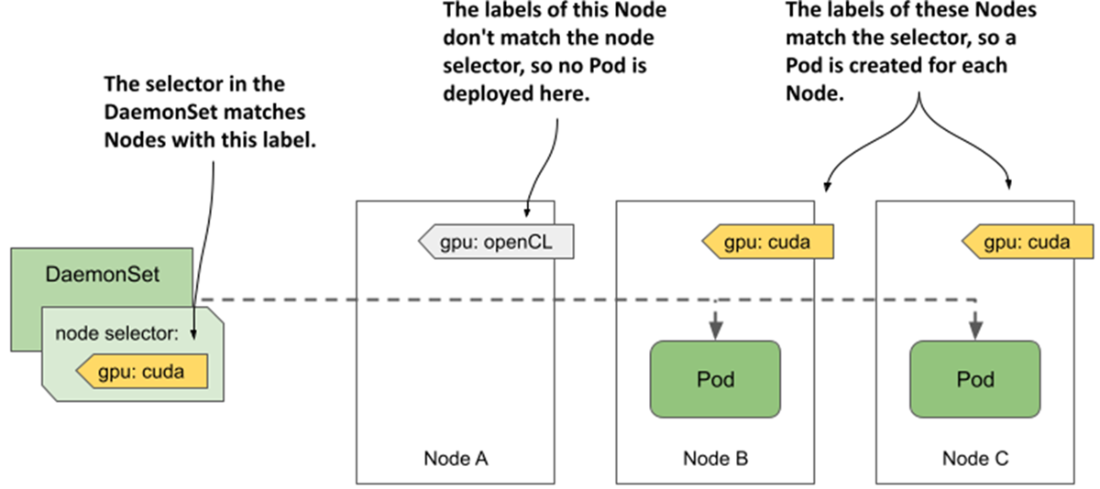

A DaemonSet defines a label selector and a Pod template; the controller injects nodeAffinity into each Pod so the scheduler targets a specific node. Its status reports node-oriented counts (desired, current, ready, available, updated, misscheduled), reflecting that updates may briefly run old/new Pods side-by-side on a node. You can dynamically move nodes into or out of scope by changing node labels, use standard labels (kubernetes.io/arch, kubernetes.io/os) for heterogeneous clusters, or split multi-arch images across multiple DaemonSets. Updates support RollingUpdate (defaults maxSurge=0, maxUnavailable=1, governed by minReadySeconds) for safe, one-node-at-a-time replacement, or OnDelete for manual, high-control rollouts of cluster-critical daemons; deleting the DaemonSet removes its Pods.

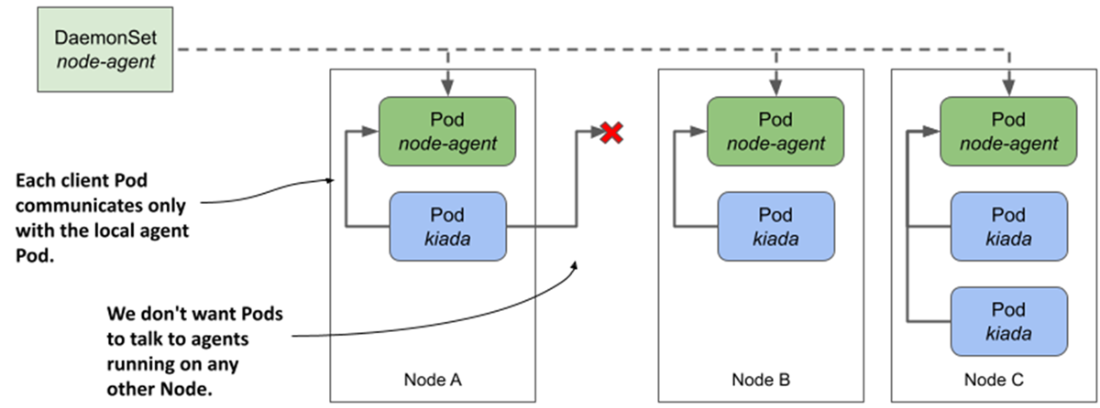

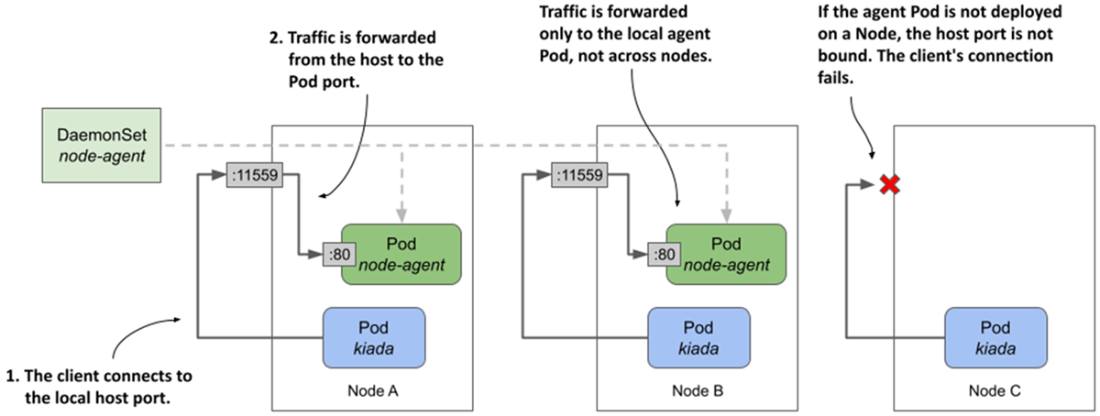

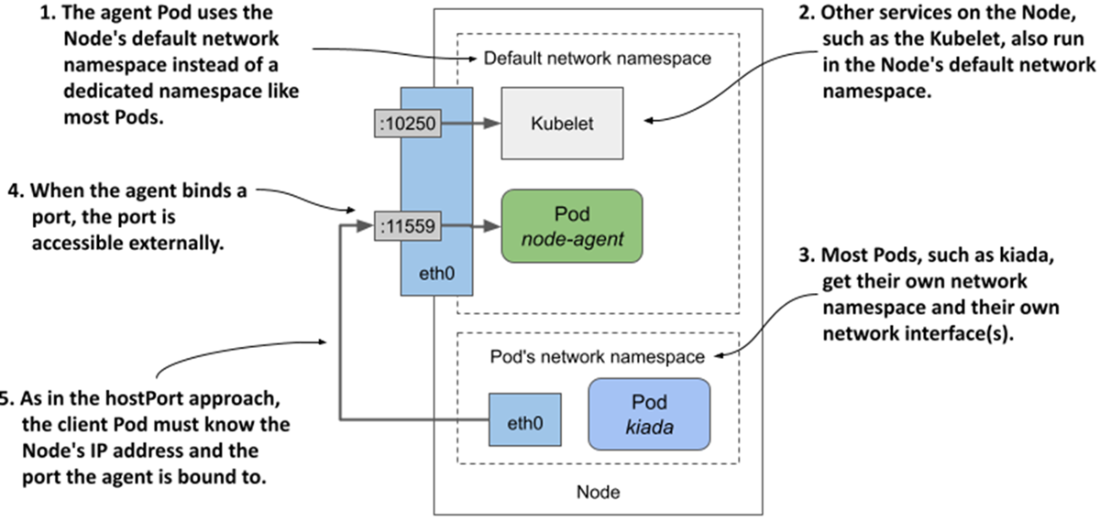

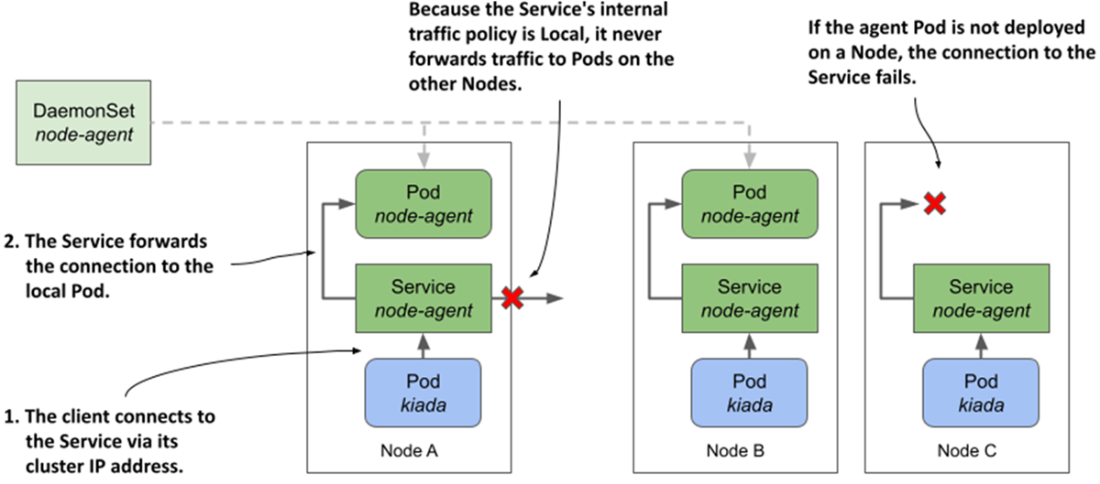

Node agents often need elevated host access. You can grant full privileges (privileged: true) or minimal kernel access via Linux capabilities, mount hostPath volumes to reach host files (for example, kernel modules or locks), and optionally share host namespaces (hostNetwork, and if required hostIPC/hostPID). Critical daemons should use PriorityClasses (for example, system-node-critical) so they preempt lower-priority workloads when resources are tight. For node-local client communication, three patterns are shown: bind a container port to a hostPort and let clients discover the node IP via the Downward API; run with hostNetwork and bind directly to a host port; or, preferably, expose the daemon through a ClusterIP Service configured with internalTrafficPolicy=Local, which routes each client Pod to the agent on its own node without opening node ports to the outside.

DaemonSets run a Pod replica on each node, whereas ReplicaSets scatter them around the cluster.

The DaemonSet controller’s reconciliation loop

A node selector is used to deploy DaemonSet Pods on a subset of cluster nodes.

How do we get client pods to only talk to the locally-running daemon Pod?

Exposing a daemon Pod via a host port

Exposing a daemon Pod by using the host node’s network namespace

Exposing daemon Pods via a Service with internal traffic policy set to Local

Summary

- A DaemonSet object represents a set of daemon Pods distributed across the cluster Nodes so that exactly one daemon Pod instance runs on each node.

- A DaemonSet is used to deploy daemons and agents that provide system-level services such as log collection, process monitoring, node configuration, and other services required by each cluster Node.

- When you add a node selector to a DaemonSet, the daemon Pods are deployed only on a subset of all cluster Nodes.

- A DaemonSet doesn't deploy Pods to control plane Nodes unless you configure the Pod to tolerate the Nodes' taints.

- The DaemonSet controller ensures that a new daemon Pod is created when a new Node is added to the cluster, and that it’s removed when a Node is removed.

- Daemon Pods are updated according to the update strategy specified in the DaemonSet. The RollingUpdate strategy updates Pods automatically and in a rolling fashion, whereas the OnDelete strategy requires you to manually delete each Pod for it to be updated.

- If Pods deployed through a DaemonSet require extended access to the Node's resources, such as the file system, network environment, or privileged system calls, you configure this in the Pod template in the DaemonSet.

- Daemon Pods should generally have a higher priority than Pods deployed via Deployments. This is achieved by setting a higher PriorityClass for the Pod.

- Client Pods can communicate with local daemon Pods through a Service with internalTrafficPolicy set to Local, or through the Node's IP address if the daemon Pod is configured to use the node's network environment (hostNetwork) or a host port is forwarded to the Pod (hostPort).

Kubernetes in Action, Second Edition ebook for free

Kubernetes in Action, Second Edition ebook for free