1 Introducing Kubernetes

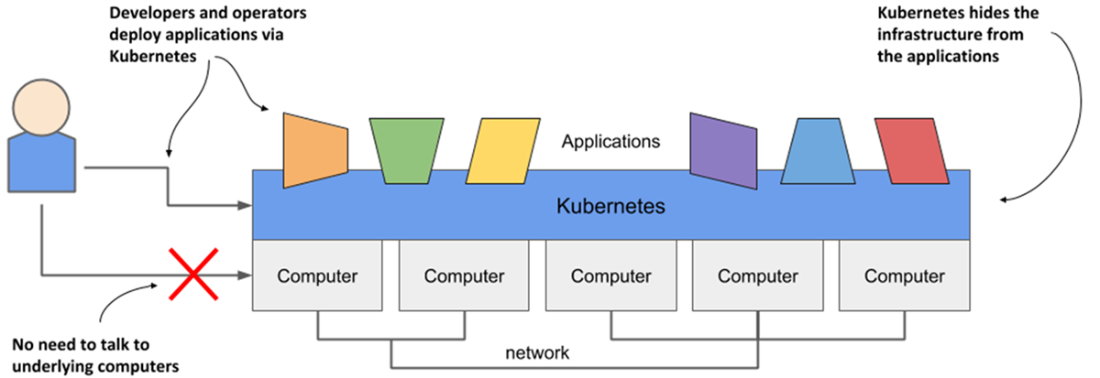

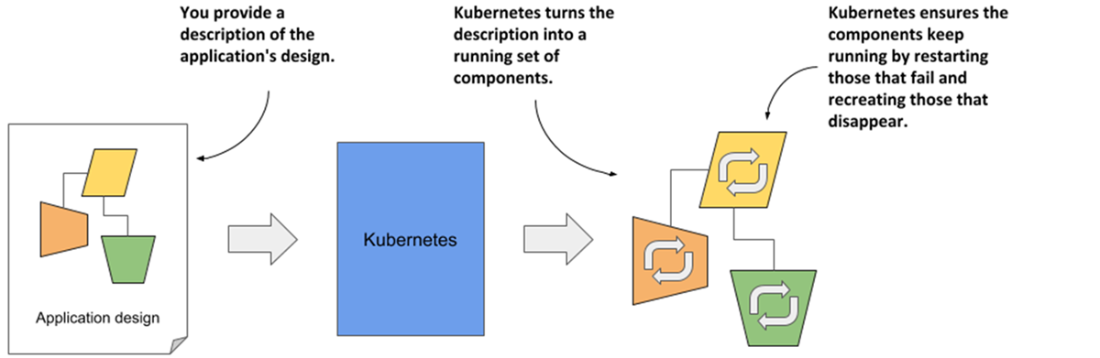

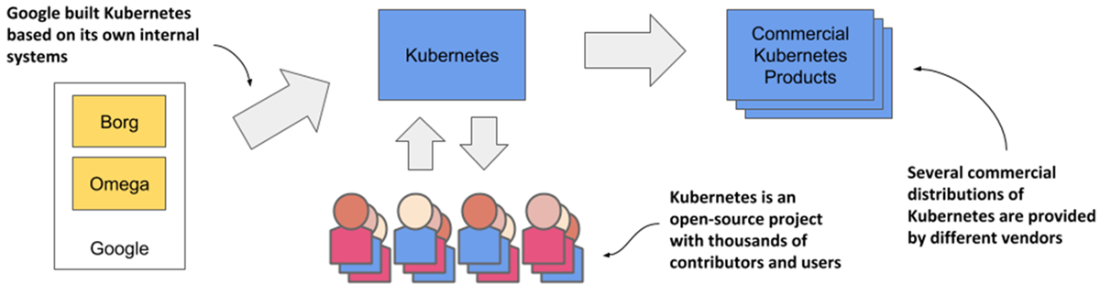

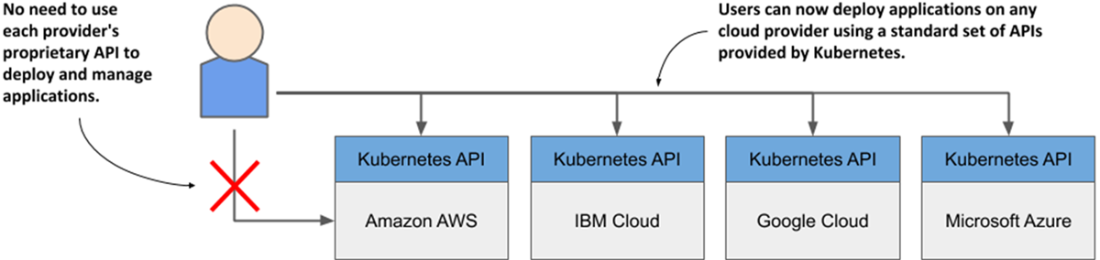

This chapter introduces Kubernetes as a system that “steers” containerized applications, abstracting away infrastructure so teams can focus on business logic. It explains how Kubernetes standardizes deployment across data centers and clouds through a declarative model: you describe the desired state and Kubernetes continuously reconciles reality to match it, handling restarts and replacements when needed. The text traces Kubernetes’ origins at Google, its lineage from Borg and Omega, and the rapid growth of its open-source ecosystem under the CNCF, which helped make Kubernetes a de facto standard for running modern, microservice-based systems and for reducing cloud lock-in through common APIs.

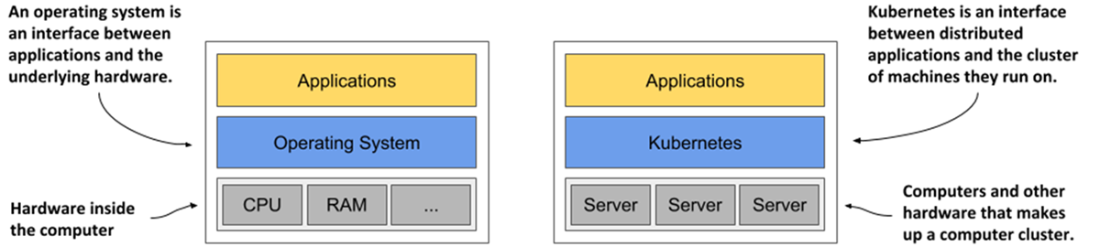

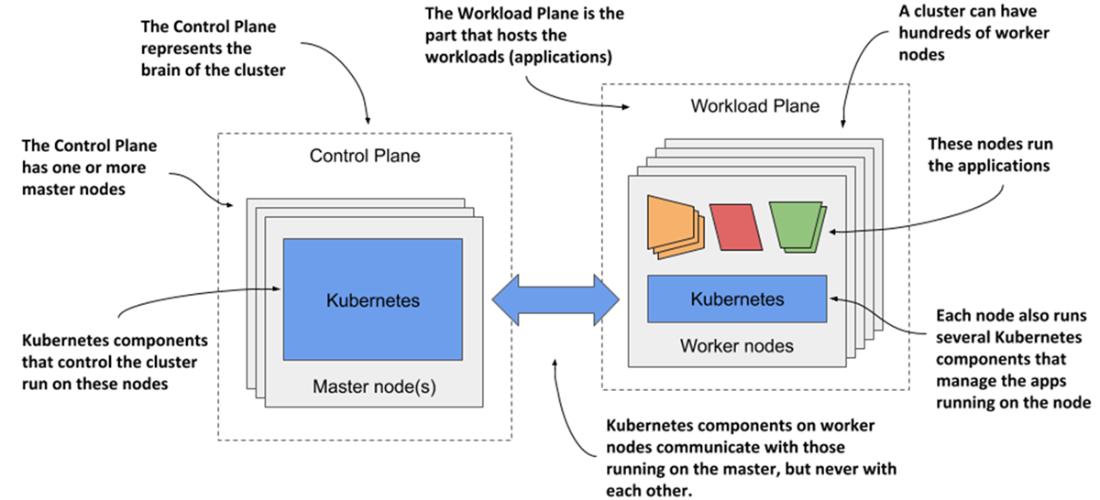

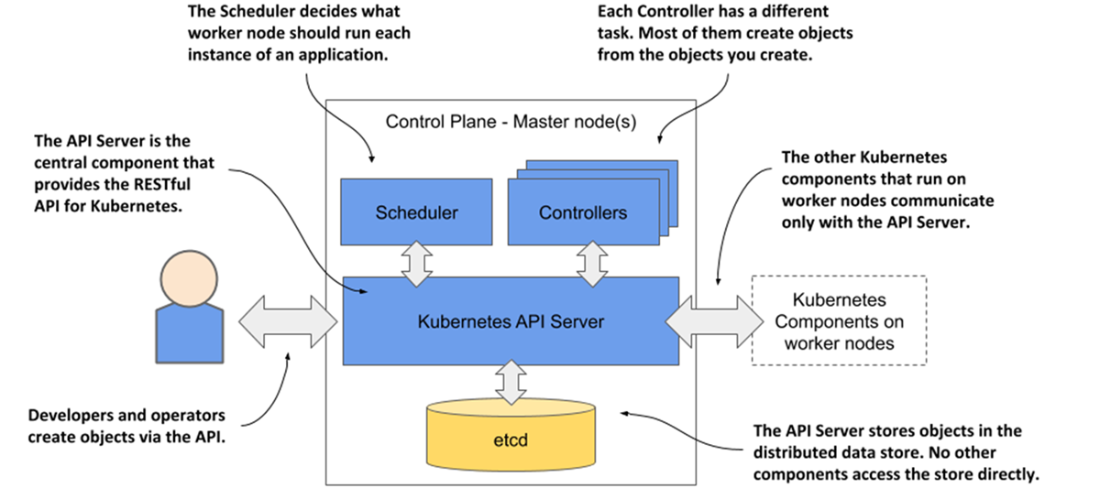

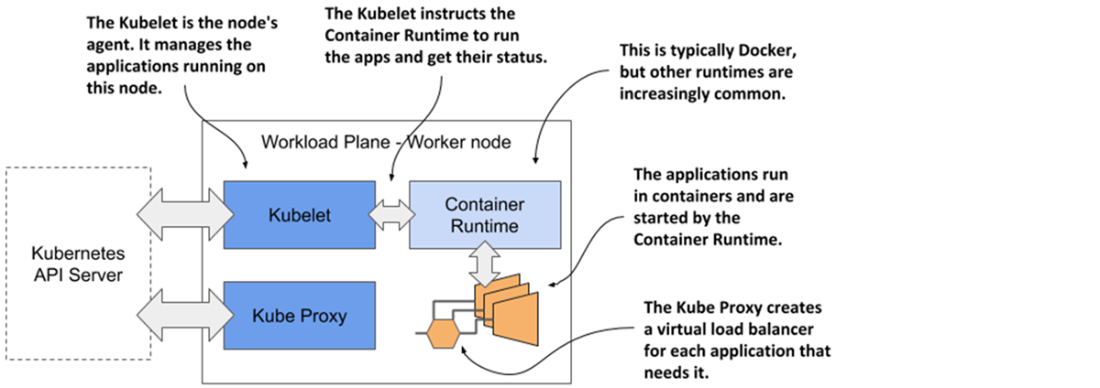

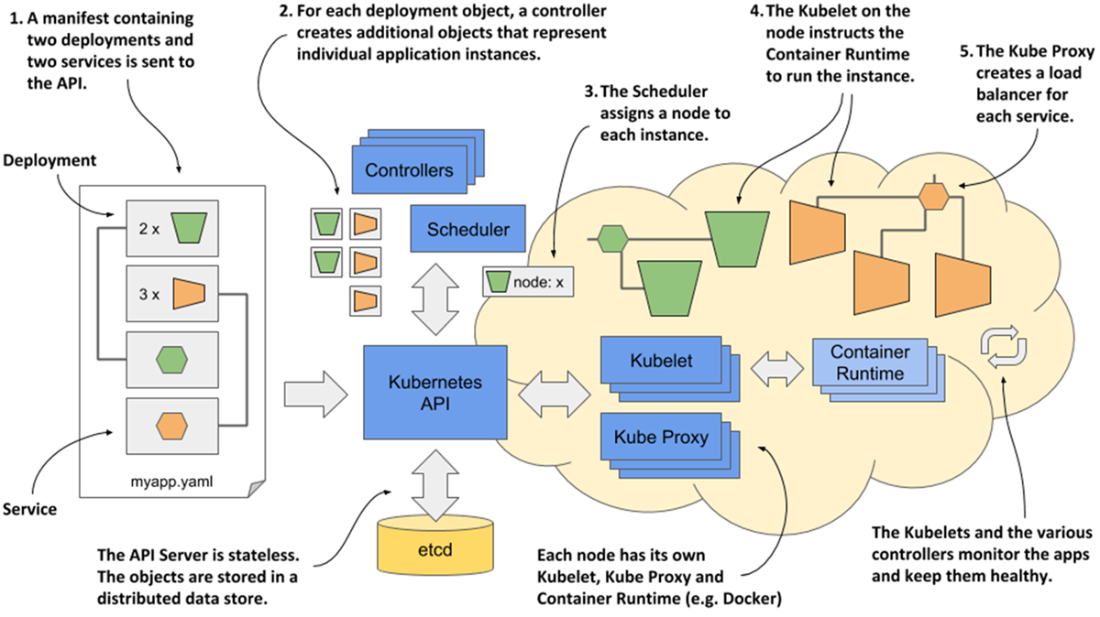

The chapter then reframes a cluster as a single, OS-like platform that provides essential distributed systems capabilities—service discovery, horizontal scaling, load balancing, self-healing, and leader election—so applications don’t need to implement them. It outlines the two-plane architecture: the Control Plane (API server, etcd, scheduler, and controllers) that holds and drives desired state, and the Workload Plane (worker nodes running kubelet, a container runtime, and kube-proxy) that executes workloads. Deployments are defined in YAML/JSON and submitted via kubectl; controllers create and maintain the required objects, the scheduler places workload instances on nodes, kubelet runs them in containers, and kube-proxy exposes them behind stable virtual endpoints, while the system continually monitors and heals applications and nodes.

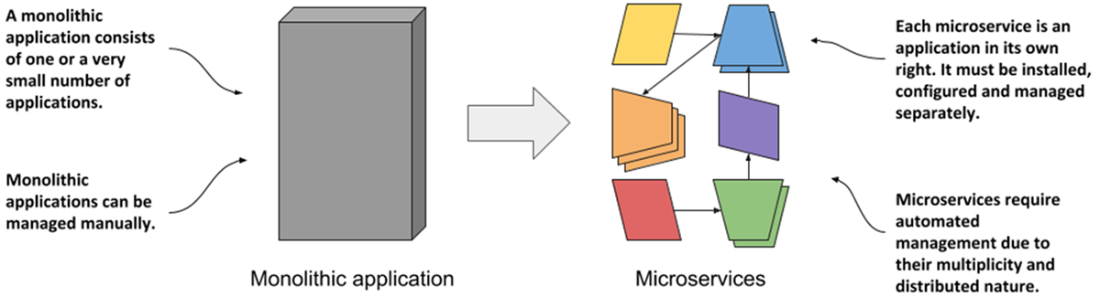

Finally, the chapter surveys adoption options and trade-offs. Organizations can run Kubernetes on-premises, in the cloud, or in hybrid configurations; cloud deployments add elasticity, and managed services offload the substantial operational burden of running Kubernetes yourself. Teams may choose vanilla upstream Kubernetes for cutting-edge features or enterprise distributions for hardened defaults, added capabilities, and integrated tooling. The chapter also cautions that Kubernetes’ complexity and learning curve carry upfront costs, and it may be unnecessary for monoliths or very small microservice estates; when scale, reliability, portability, and automation needs are high, however, Kubernetes can markedly improve utilization, resilience, and delivery speed.

Infrastructure abstraction using Kubernetes

The declarative model of application deployment

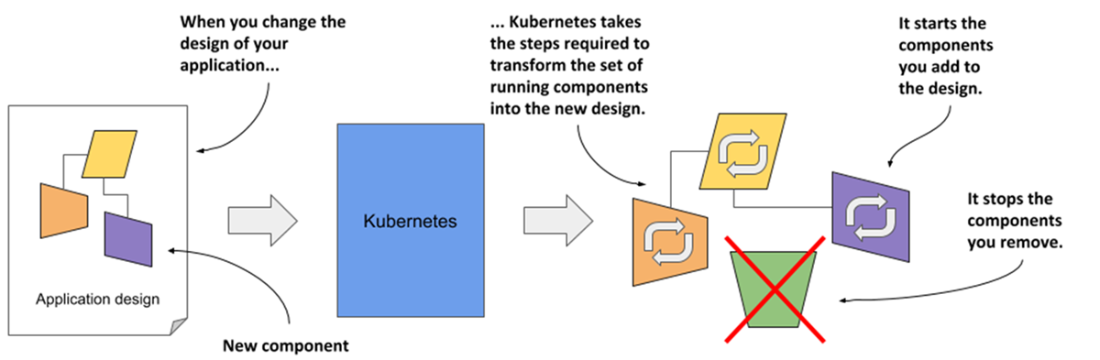

Changes in the description are reflected in the running application

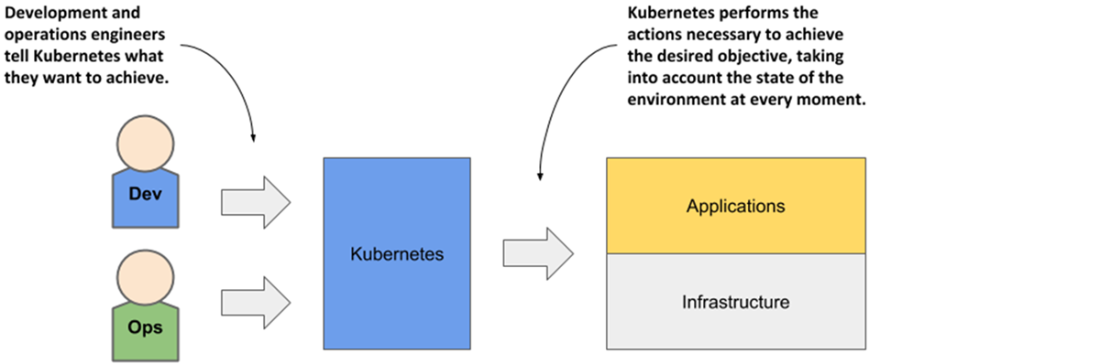

Kubernetes takes over the management of applications

The origins and state of the Kubernetes open-source project

Comparing monolithic applications with microservices

Kubernetes has standardized how you deploy applications on cloud providers

Kubernetes is to a computer cluster what an Operating System is to a computer

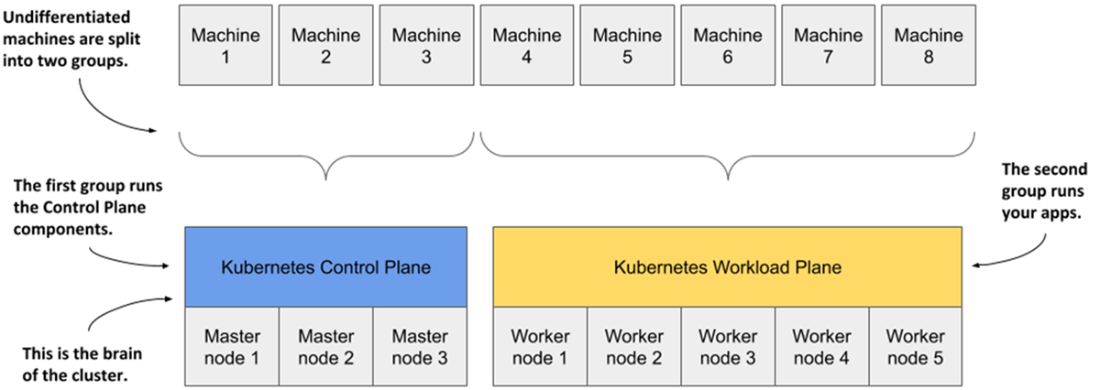

Computers in a Kubernetes cluster are divided into the Control Plane and the Workload Plane

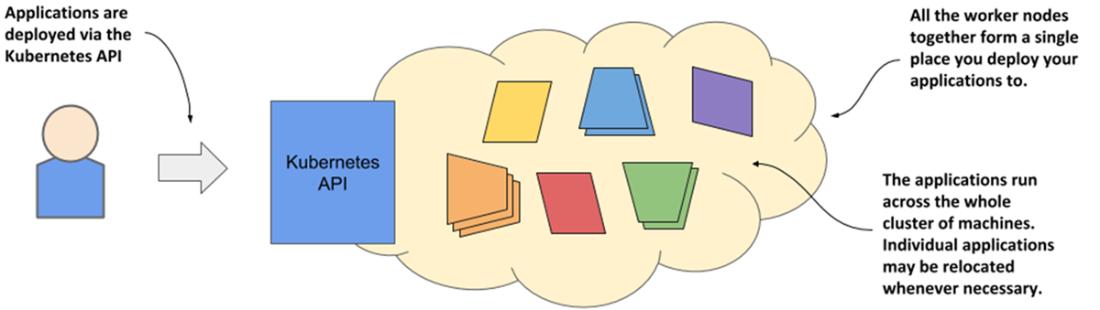

Kubernetes exposes the cluster as a uniform deployment area

The two planes that make up a Kubernetes cluster

The components of the Kubernetes Control Plane

The Kubernetes components that run on each node

Deploying an application to Kubernetes

Summary

- Kubernetes is Greek for helmsman. As a ship’s captain oversees the ship while the helmsman steers it, you oversee your computer cluster, while Kubernetes performs the day-to-day management tasks.

- Kubernetes is pronounced koo-ber-netties. Kubectl, the Kubernetes command-line tool, is pronounced kube-control.

- Kubernetes is an open-source project built upon Google’s vast experience in running applications on a global scale. Thousands of individuals now contribute to it.

- Kubernetes uses a declarative model to describe application deployments. After you provide a description of your application to Kubernetes, it brings it to life.

- Kubernetes is like an operating system for the cluster. It abstracts the infrastructure and presents all computers in a data center as one large, contiguous deployment area.

- Microservice-based applications are more difficult to manage than monolithic applications. The more microservices you have, the more you need to automate their management with a system like Kubernetes.

- Kubernetes helps both development and operations teams to do what they do best. It frees them from mundane tasks and introduces a standard way of deploying applications both on-premises and in any cloud.

- Using Kubernetes allows developers to deploy applications without the help of system administrators. It reduces operational costs through better utilization of existing hardware, automatically adjusts your system to load fluctuations, and heals itself and the applications running on it.

- A Kubernetes cluster consists of master and worker nodes. The master nodes run the Control Plane, which controls the entire cluster, while the worker nodes run the deployed applications or workloads, and therefore represent the Workload Plane.

- Using Kubernetes is simple, but managing it is hard. An inexperienced team should use a Kubernetes-as-a-Service offering instead of deploying Kubernetes by itself.

FAQ

What is Kubernetes and why is it called that?

Kubernetes is an open-source system that automates deploying, scaling, and operating containerized applications. Its name comes from the Greek word for “helmsman” or “pilot,” reflecting its role in steering applications toward the desired state while you decide the course.

How do you pronounce “Kubernetes,” and what does “K8s” mean?

In English, you’ll commonly hear “koo-ber-NET-eez” or “koo-ber-NAY-teez.” The community often shortens it to “K8s” (pronounced “kates”), where the 8 counts the letters between the first and last.

What problems does Kubernetes solve?

It abstracts away underlying servers and networks, standardizes deployments across environments, provides declarative desired-state management, and automates day‑to‑day operations like scaling, load balancing, and self‑healing.

What does “declarative deployment” mean in Kubernetes?

You describe the desired state of your app in manifests (YAML/JSON). Kubernetes continuously reconciles the actual state to match your description—starting, restarting, scaling, or relocating workloads as needed when you change the manifests.

Why has Kubernetes seen such wide adoption?

It fits modern architectures (microservices), bridges Dev and Ops with standard APIs and tooling, and reduces cloud lock-in by offering a consistent interface across providers. It also grew a strong ecosystem under the CNCF, accelerating innovation and adoption.

How does Kubernetes view a data center or cloud environment?

It turns a cluster into a single logical deployment surface. You deploy to the cluster, not to specific machines. Kubernetes schedules workloads onto suitable nodes and may move them when needed. Each workload must still fit on an individual node; Kubernetes isn’t a single-machine “stretcher.”

What are the main Control Plane components and their roles?

- API Server: Exposes the Kubernetes API.

- etcd: Stores cluster state and objects.

- Scheduler: Assigns pods to nodes.

- Controllers: Drive the system toward the desired state (creating/updating objects, integrating with external systems).

What runs on each worker node?

- Kubelet: Manages pods on the node and reports status.

- Container Runtime: Runs containers (Docker or other compatible runtimes).

- Kube-Proxy: Provides service networking and load balancing.

Clusters often add DNS, networking plugins, logging, and other add-ons.

How does an application get from a manifest to running in the cluster?

- You submit manifests (usually via kubectl) to the API Server, which validates and persists them in etcd.

- Controllers create lower-level objects for the requested replicas.

- The Scheduler binds each pod to a node.

- Kubelet on that node asks the container runtime to start the containers.

- Kube-Proxy wires up networking and load balancing for ready pods.

- Controllers and Kubelets monitor and keep the app healthy.

How should my organization adopt Kubernetes (and when might it be the wrong choice)?

Run on-prem, in the cloud, or hybrid; consider managed services (GKE, AKS, EKS, etc.) over self-managing due to complexity. Use enterprise distributions (e.g., OpenShift, Rancher) if you need hardened defaults and extra features, or vanilla Kubernetes for cutting-edge flexibility. Kubernetes shines with many microservices and a need for automation. For monoliths or very few services, the added complexity, training, and interim costs may outweigh the benefits.

Kubernetes in Action, Second Edition ebook for free

Kubernetes in Action, Second Edition ebook for free