1 Knowledge graphs and LLMs: a killer combination

Artificial intelligence—especially generative models—has changed how people interact with technology, yet mission-critical domains still demand accuracy, transparency, and fresh, domain-grounded knowledge that generic LLMs struggle to provide on their own. This chapter introduces knowledge graphs (KGs) as structured, contextual, and explainable representations of entities and their relationships, and shows why pairing them with LLMs creates a compelling foundation for advanced applications. The two technologies are presented as complementary: KGs bring verifiable, updatable knowledge and provenance, while LLMs contribute powerful natural language understanding and generation. The result is a “killer combination” aimed at sectors like healthcare, finance, and law enforcement, and at practitioners who need both expressive knowledge management and intuitive user experiences.

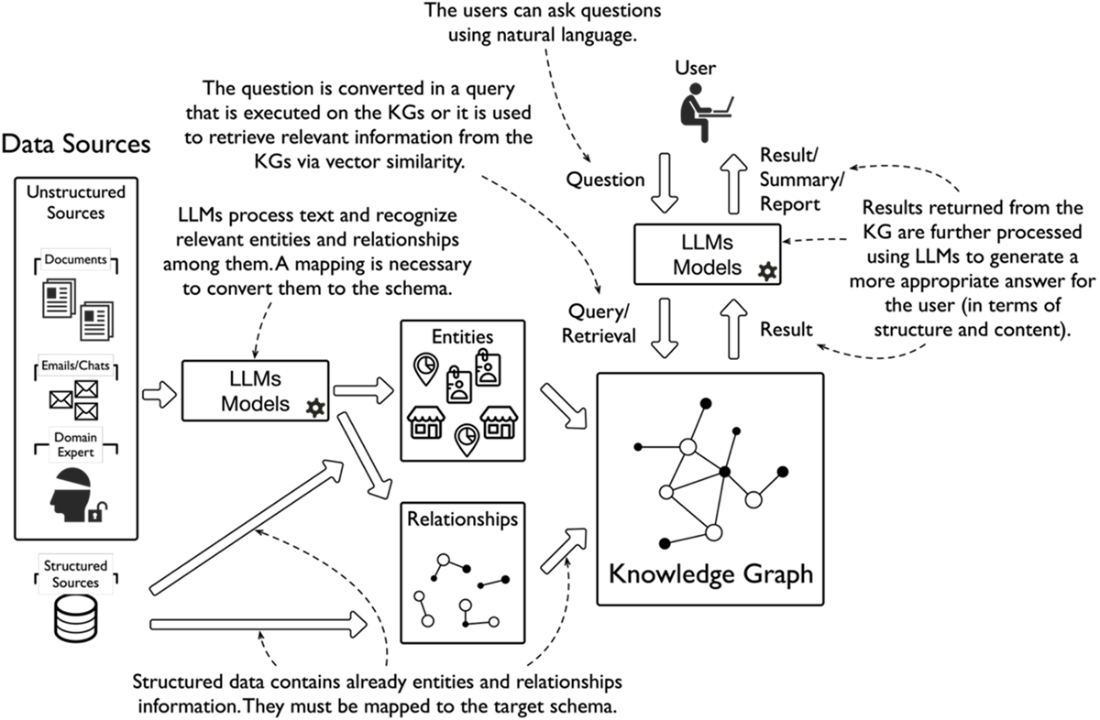

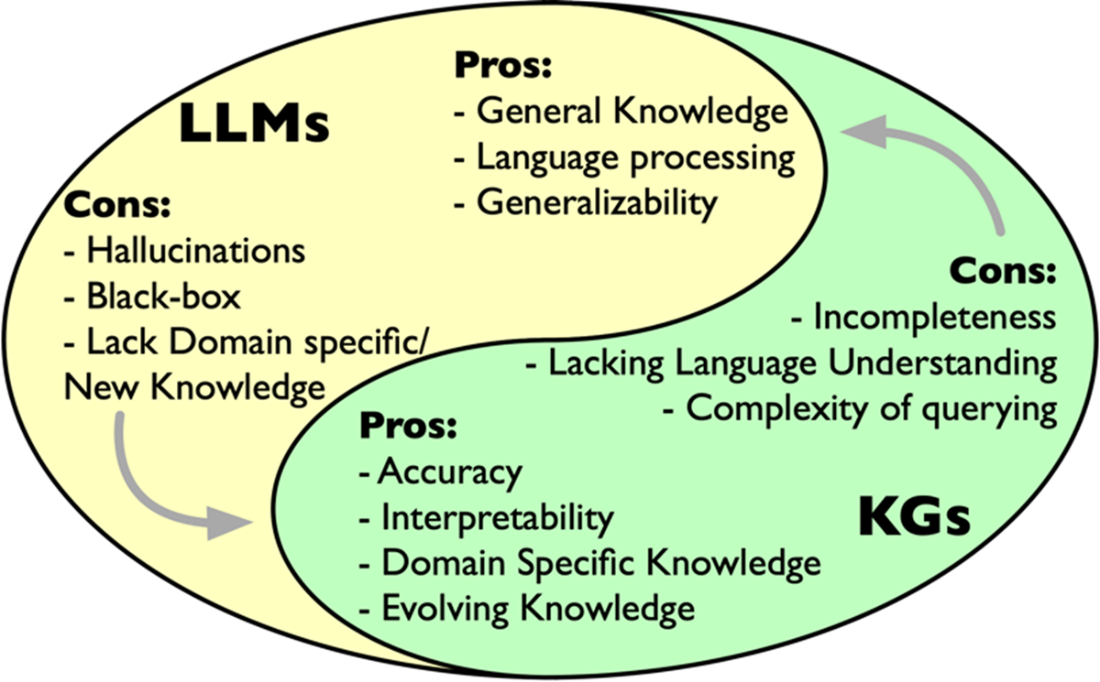

The chapter details how LLMs strengthen KGs by extracting entities and relations from unstructured text, accelerating graph construction, easing complex querying through natural language, and summarizing multi-hop results into clear answers. In the opposite direction, KGs mitigate LLM limitations by grounding responses to trusted data, reducing hallucinations, improving explainability, and keeping knowledge current without retraining an entire model. Together they enable natural language access to deeply connected organizational knowledge, harmonize heterogeneous sources, and support sophisticated reasoning and analysis. This synergy sets up a positive flywheel in which better graphs yield better model behavior, which in turn enriches the graphs. Concrete scenarios—such as drug discovery and conversational customer support—illustrate the practical value of the approach.

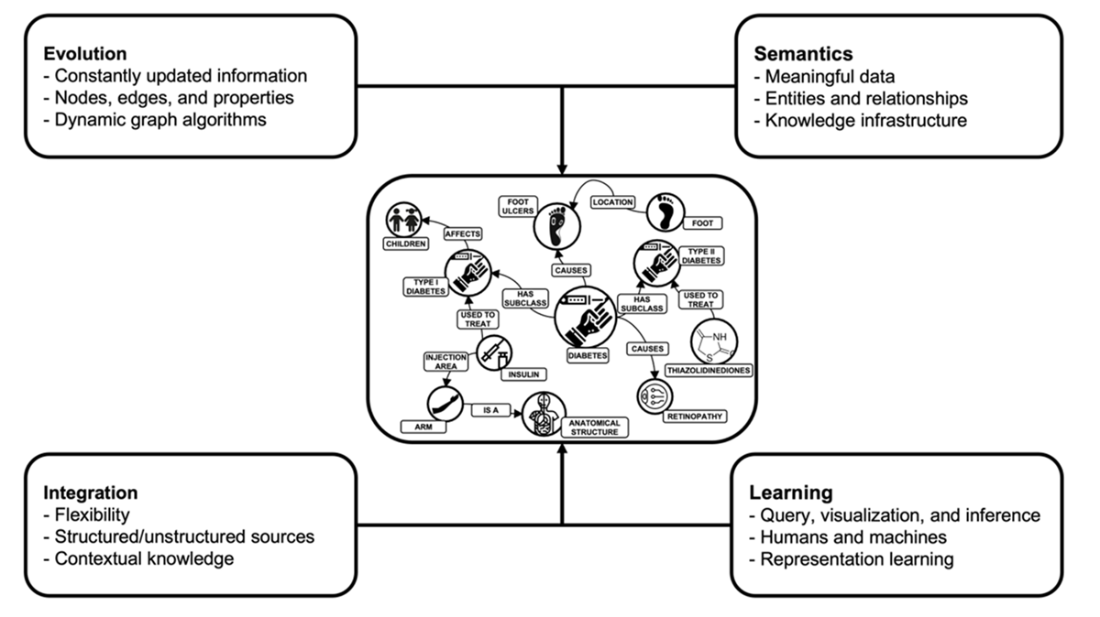

From an implementation view, the chapter frames a paradigm shift from rigid, siloed databases to graph-centered knowledge substrates built on four pillars: evolution (flexible, ever-growing structures), semantics (typed entities and meaningful relationships), integration (uniting structured and unstructured sources), and learning (reasoning and analytics for humans and machines). It advocates pragmatic, “just enough” semantics via taxonomies and ontologies, avoiding unnecessary rigidity while preserving interpretability and inference. The book is technology-agnostic, drawing on both RDF/SPARQL and labeled property graphs with openCypher/Gremlin, and explains their complementary strengths. Readers are guided to model schemas, ingest and validate data, apply modern ML (including GNNs), and use LLMs for extraction, query generation, and summarization—always anchored in business goals and demonstrated through real-world, end-to-end examples.

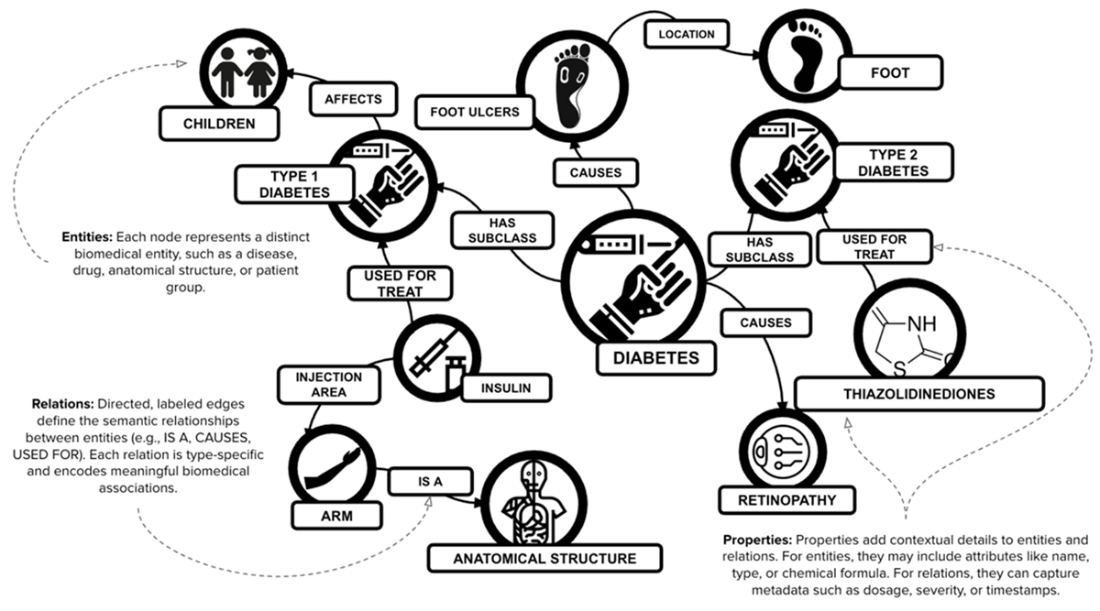

Example of a KG in the healthcare domain. The nodes (the circles) represent the entities, like people, diseases, anatomic parts, etc. The edges represent meaningful connections among entities. Both nodes and edges have properties describing relevant details.

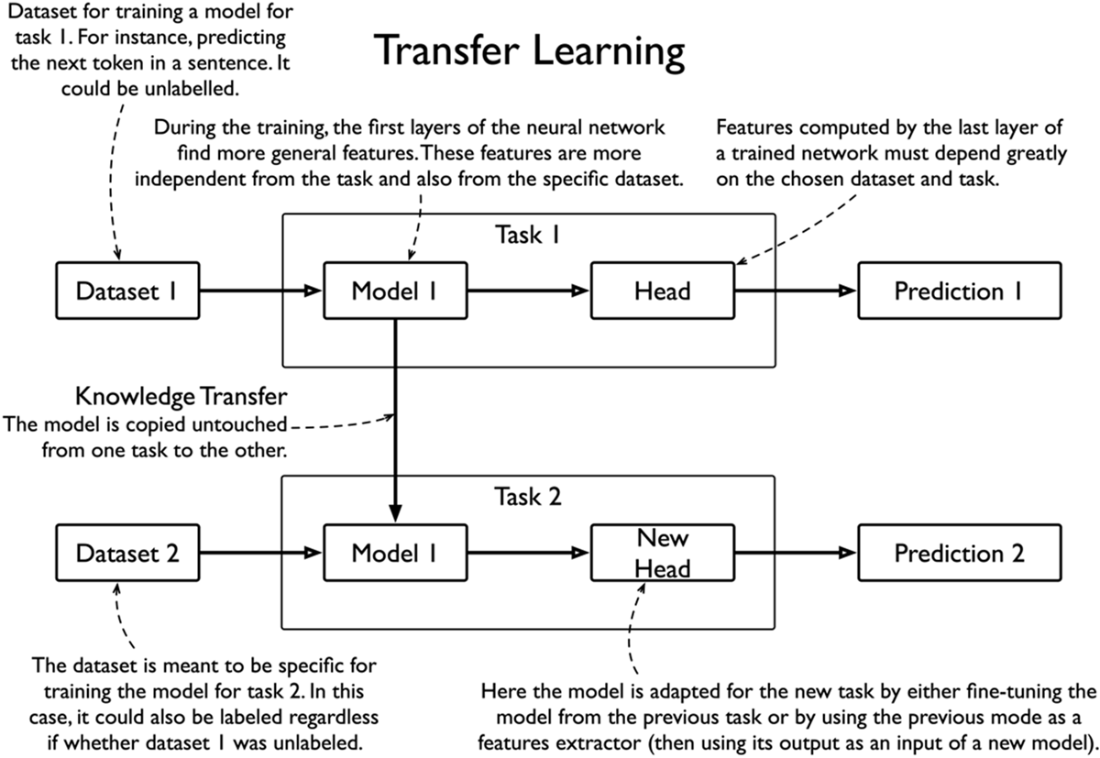

Transfer Learning high-level principles. In transfer learning a model (or part of it) trained on a specific task (e.g. predicting randomly masked tokens) is then copied to be part of the training and the prediction for another task (e.g. relation extraction).

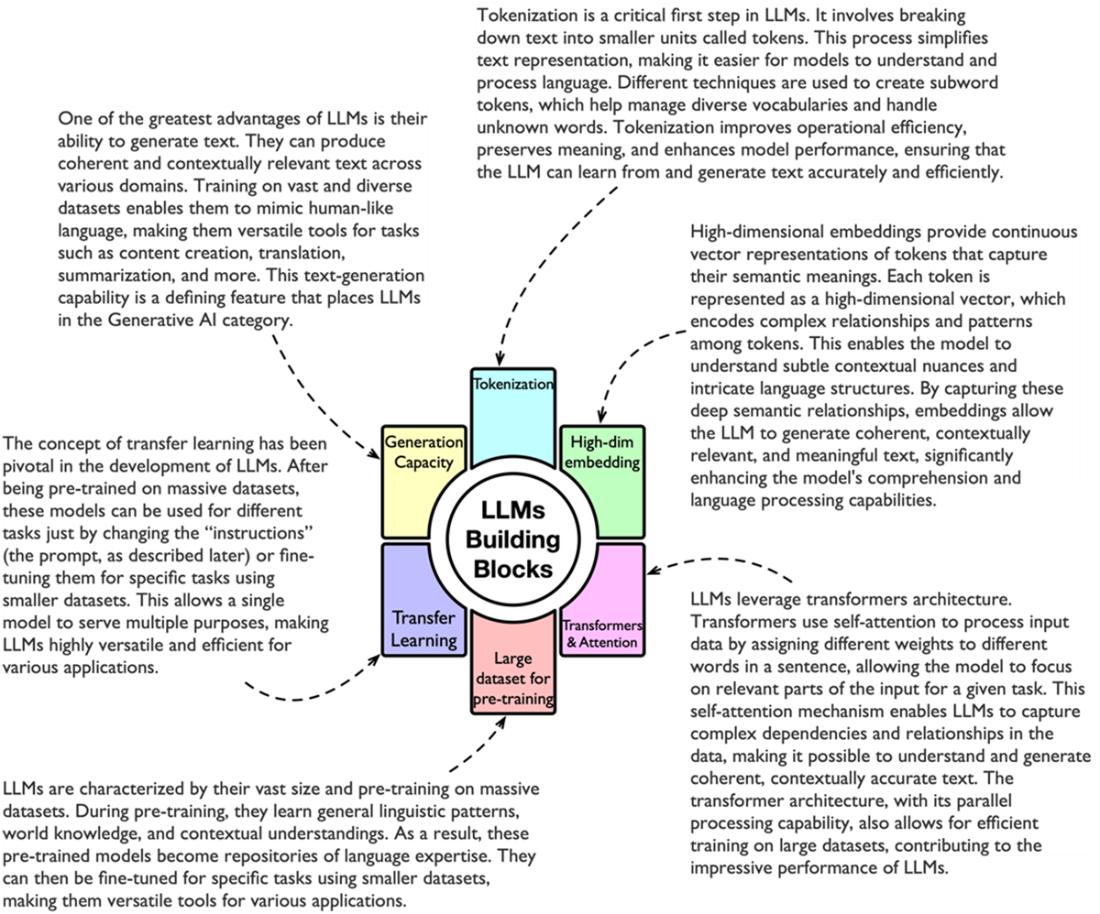

LLMs building blocks and differentiating characteristics.

Knowledge graph building with and without LLMs and LLMs support for querying and retrieval.

Summary of how LLMs and KGs can complement each other. Inspired by [11].

The four pillars of KGs: evolution, semantics, integration, and learning

Summary

- LLMs and KGs empower each other by overcoming individual limitations when used in isolation.

- LLMs support KG creation from structured data and simplify the querying phase.

- KGs provide ground knowledge for LLMs to answer domain-specific questions using up-to-date and private data.

- Data-driven systems with contextualized knowledge are strategic for high-impact applications like recruitment tools and medical predictions.

- KGs represent a core abstraction for incorporating human knowledge into machines, while LLMs provide natural language understanding capabilities.

- KG and LLM adoption represents a paradigm shift where intelligent behavior is encoded once in a unique source of trust. This empowers data representation for different applications and diverse tasks.

- KGs are ever-evolving graph data structures containing typed entities, their attributes, and meaningful relationships. They are built for specific domains from structured and unstructured data to craft knowledge for both humans and machines.

- KGs have four pillars: evolution, semantics, integration, and learning.

- KGs and LLMs support critical domains with data-driven decisions across multiple applications. These include Customer 360 for banking, drug discovery, retail recommendations, and conversational systems.

- Two key technologies represent KGs: Resource Description Framework (RDF) and labeled property graphs (LPG).

- Taxonomies and ontologies play fundamental roles by incorporating semantic metadata that makes traditional graphs smarter.

- J. Launchbury. “A DARPA Perspective on Artificial Intelligence” (2020). Accessed: May 5, 2022. [Online].

- J. Yosinski, et al., "How transferable are features in deep neural networks?," Proceedings of the 27th International Conference on Neural Information Processing Systems - Volume 2 (p./pp. 3320--3328), Cambridge, MA, USA: MIT Press, Accessed: July 26, 2024. [Online]. Available: https://arxiv.org/abs/1411.1792

- A. Vaswani et al., "Attention is all you need," In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS'17). Curran Associates Inc., Red Hook, NY, USA, 6000–6010, 2017. Accessed: July 26, 2024. [Online]. Available: https://arxiv.org/abs/1706.03762

- J. Devlin et al., "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding," 2018, Accessed: July 26, 2024. [Online]. Available: https://arxiv.org/abs/1810.04805

- A. Radford et al., "Language models are unsupervised multitask learners," 2019. Accessed: July 26, 2024. [Online]. Available: https://api.semanticscholar.org/CorpusID:160025533

- OpenAI, GPT-4 Technical Report. 2023, Accessed: July 26, 2024. [Online]. Available: https://arxiv.org/abs/2303.08774

- J. W. Rae et al., "Scaling language models: Methods, analysis & insights from training Gopher," arXiv preprint arXiv:2112.11446, 2021, Accessed: July 26, 2024. [Online]. Available: https://arxiv.org/abs/2112.11446

- A. Chowdhery et al., "PaLM: Scaling language modeling with pathways," The Journal of Machine Learning Research, Volume 24, Issue 1 Article No.: 240, Pages 11324 - 11436, 2023, Accessed: July 26, 2024. [Online]. Available: https://dl.acm.org/doi/10.5555/3648699.3648939

- J. Kaplan et al., “Scaling Laws for Neural Language Models,” 2020, arXiv:2001.08361 [cs.LG], Accessed: July 26, 2024. [Online]. Available: https://arxiv.org/abs/2001.08361

- J. Z. Pan et al., "Large Language Models and Knowledge Graphs: Opportunities and Challenges," Transactions on Graph Data and Knowledge, 1, 2:1--2:38. doi: 10.4230/TGDK.1.1.2, 2023, Accessed: July 26, 2024. [Online]. Available: https://arxiv.org/abs/2308.06374

- S. Pan et al., "Unifying Large Language Models and Knowledge Graphs: A Roadmap," IEEE Transaction on Knowledge Data Engineering, 36, 3580-3599. 2024, Accessed: July 26, 2024. [Online]. Available: https://ieeexplore.ieee.org/document/10387715

- D. Grande et al., “Reducing data costs without jeopardizing growth.” McKinsey Digital, 2020. Accessed: May 5, 2022. [Online]. Available: https://www.mckinsey.com/business-functions/mckinsey-digital/our-insights/reducing-data-costs-without-jeopardizing-growth

- F. Lecue, “On the role of knowledge graphs in explainable AI,” Semantic Web, vol. 11 no. 1, pp. 41-51, 2020. Accessed: May 5, 2022. [Online]. Available: http://semantic-web-journal.org/system/files/swj2259.pdf

- H. Zhang et al., “Grounded conversation generation as guided traverses in commonsense knowledge graphs,” arXiv Labs, arXiv preprint arXiv:1911.02707, May 5, 2020. Accessed: May 5, 2022. [Online]. Available: https://arxiv.org/abs/1911.02707

- J. Barrasa, A. E. Hodler, and J. Webber, Knowledge Graphs. Sebastopol, CA, USA: O'Reilly Media, Inc., 2021. Accessed: May 5, 2022. [Online]. Available: https://www.oreilly.com/library/view/knowledge-graphs/9781098104863/

Knowledge Graphs and LLMs in Action ebook for free

Knowledge Graphs and LLMs in Action ebook for free