1 Big Picture, What is GPT?

Artificial intelligence has surged into everyday conversation, largely due to ChatGPT, and this chapter sets the stage by explaining what GPT is and why it matters. It introduces GPT as a Generative Pre-trained Transformer, situating it within the broader category of large language models that create new content based on patterns learned from vast text data. The authors aim to demystify how these systems work in plain language, clarifying what they can and cannot do and why. The discussion emphasizes that ChatGPT’s impact stems from scale—models that are far larger and trained on far more data than earlier approaches—enabling convincing conversation, summarization, and instruction following.

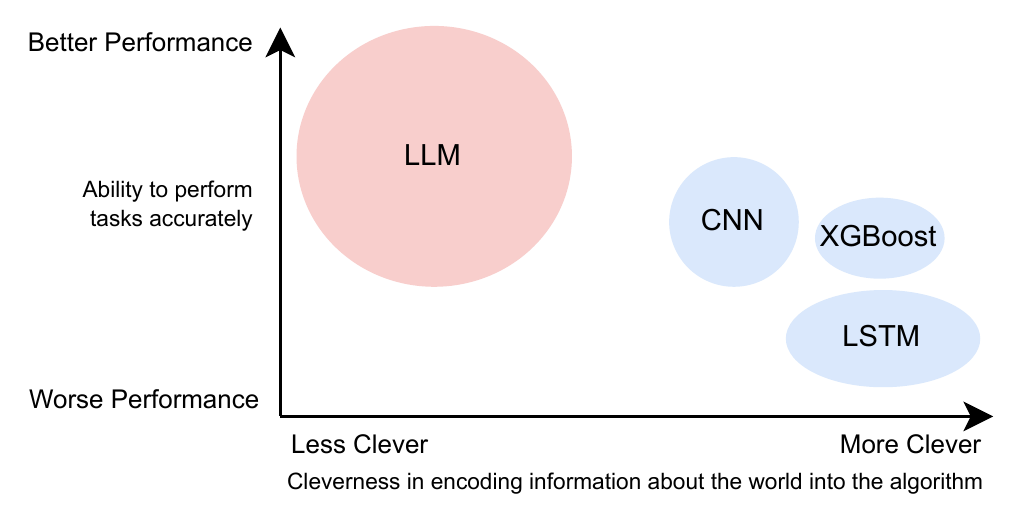

At a high level, GPTs are language models from the field of natural language processing that learn statistical relationships among words, not human-like understanding. They rely on neural networks—especially Transformers—to encode and generate text, and their effectiveness arises from massive datasets, enormous parameter counts, and specialized hardware. The chapter contrasts human, interactive language learning with the model’s static training process, stressing that the learned representation is powerful but fallible and steerable. A central lesson is that “bigger often beats clever”: with LLMs, performance gains frequently come more from more data and larger models than from intricate algorithmic tweaks. Training such systems is computationally expensive, so the book focuses on durable concepts rather than fast-changing implementation details.

The chapter also frames both the promise and the risks. GPTs can accelerate tasks like summarization, content creation, and dialogue, yet they can make confident mistakes, fail at seemingly simple problems, and be manipulated into harmful outputs. Responsible use requires skepticism, validation, and thoughtful system design that anticipates failure modes and ethical concerns. The book targets a broad audience with minimal coding and math prerequisites, offering the vocabulary and mental models needed to evaluate applications, understand limitations, and decide when to use or avoid LLMs. By the end, readers should be equipped to engage with GPTs’ capabilities and constraints in real-world contexts.

A simple Haiku generated by ChatGPT

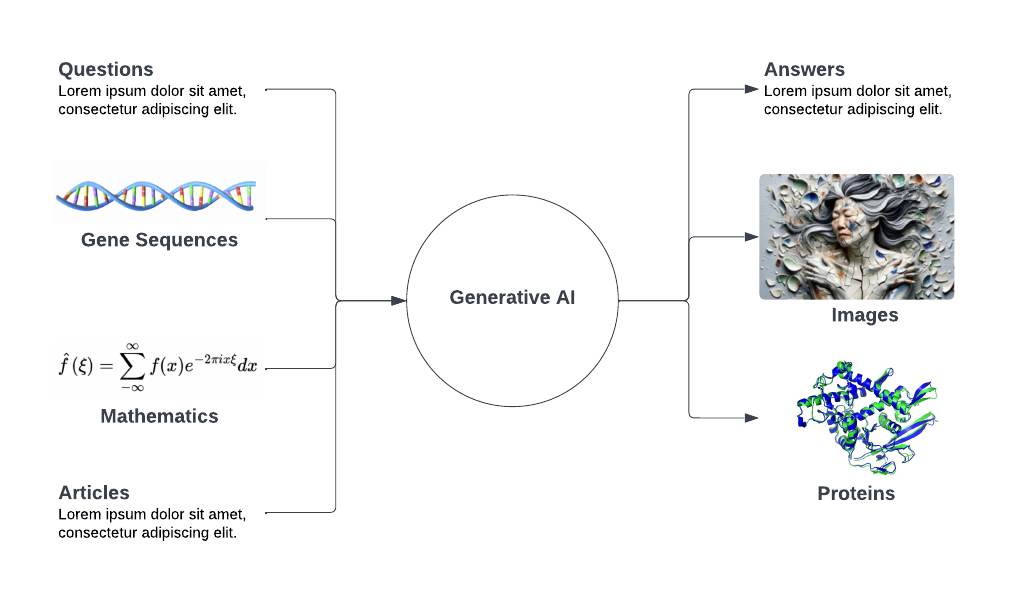

Generative AI is about taking some input (numbers, text, images) and producing a new output (usually text or images). Any combination of input/output options is possible, and the nature of the output depends on what the algorithm was trained for. It could be to add detail, re-write something to be shorter, extrapolate missing portions, and more.

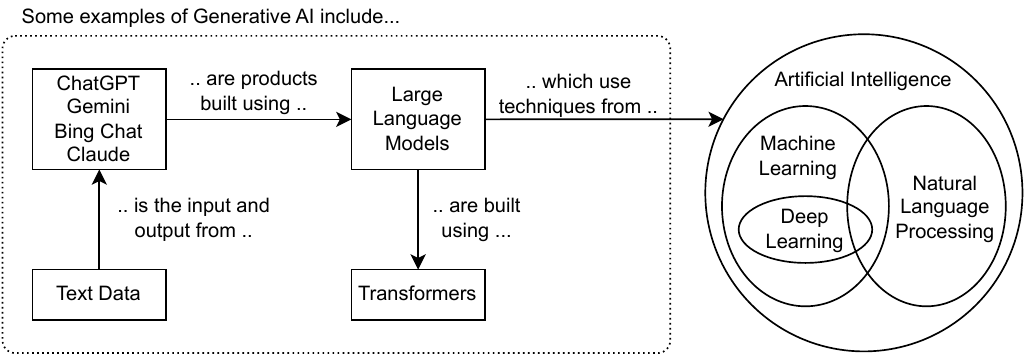

A high-level map of the various terms you’ll become familiar with and how they relate. Generative AI is a description of functionality: the function of generating content and using techniques from AI to accomplish that goal.

When you sign up for OpenAI’s ChatGPT, you have two options: the GPT-3.5 model that you can use for free or the GPT-4 model that costs money.

If the cleverness of an algorithm is based on how much information you encode into the design, older techniques often increase performance by being more clever than their predecessors. As reflected by the size of the circles, LLMs have mostly chosen a “dumber” approach of just using more data and parameters - imposing minimal constraints on what the algorithm can learn.

Summary

- ChatGPT is one type of Large Language Model, which is itself in the larger family of Generative AI/ML. Generative models produce new output, and LLMs are unique in the quality of their output but are extremely costly to make and use.

- ChatGPT is loosely patterned after an incomplete understanding of human brain function and language learning. This is used as inspiration in design, but it does not mean ChatGPT has the same abilities or weaknesses as humans.

- Intelligence is a multi-faceted and hard-to-quantify concept, making it difficult to say if LLMs are intelligent. It is easier to think about LLMs and their potential use in terms of capabilities and reliability.

- Human language must be converted to and from an LLM’s internal representation. How this representation is formed will change what an LLM learns and influence how you can build solutions using LLMs.

How Large Language Models Work ebook for free

How Large Language Models Work ebook for free