1 Why you should care about statistics

Statistics turns raw data into understanding by describing patterns and inferring truths about a larger population from samples. In a world saturated with digital traces, statistical thinking provides a timeless, transferable toolkit for extracting value from information. This chapter argues for a practical, intuition-first approach—favoring clear concepts and simple Python over rote formula lookups—so readers stay focused on insight rather than mechanics.

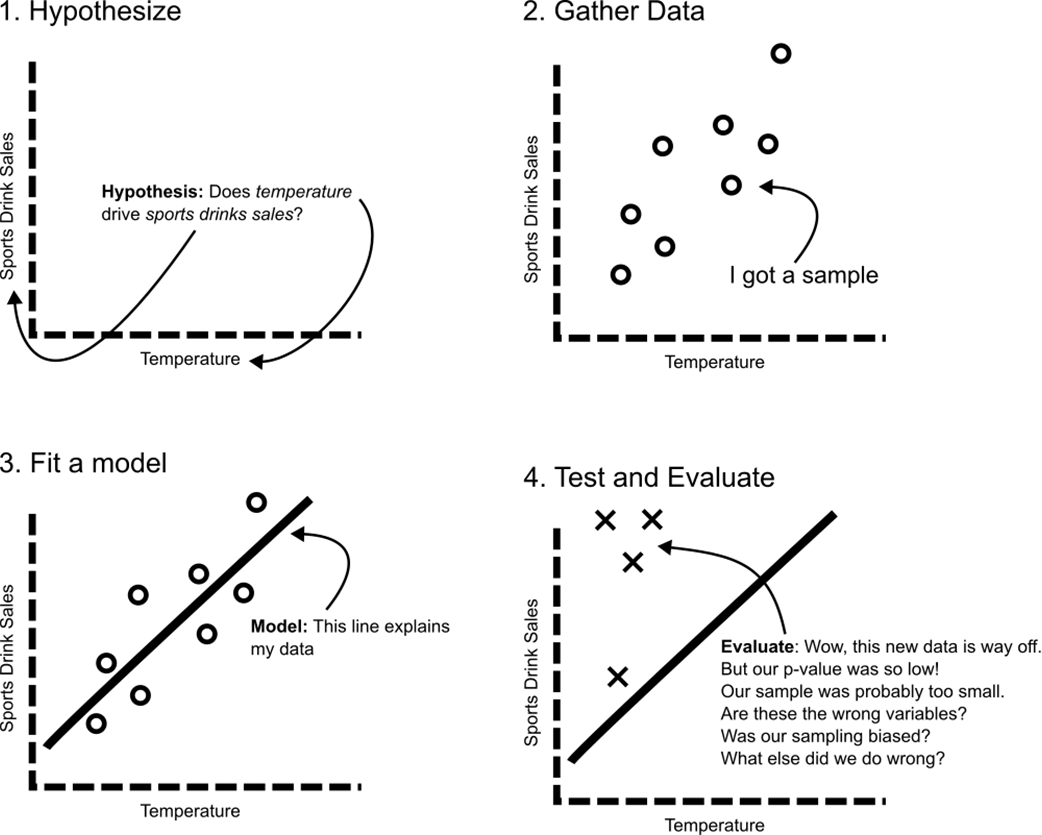

Statistical literacy boosts employability, unlocks underused data, supports decision-making under uncertainty, and strengthens work in machine learning through sound sampling and measurement. Analytical proficiency means distilling thousands of numbers into a few meaningful estimates with quantified confidence. The chapter frames a simple mental model—hypothesize, gather data, fit a model, test on new data—illustrating how tools like time series, hypothesis testing, and regression inform choices in contexts such as inventory planning, reliability, and product optimization.

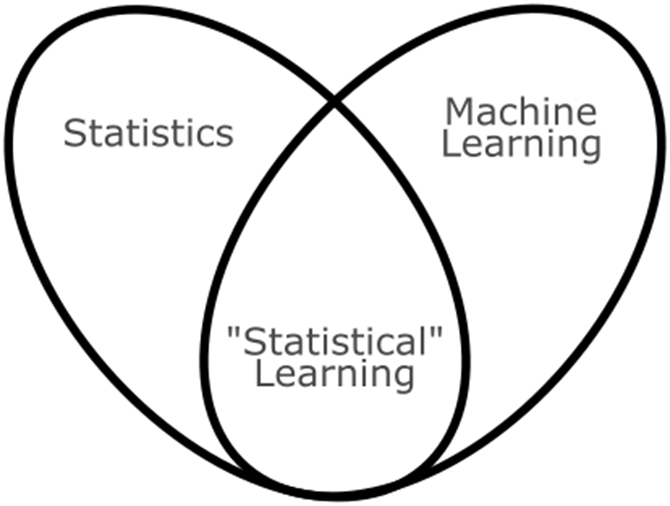

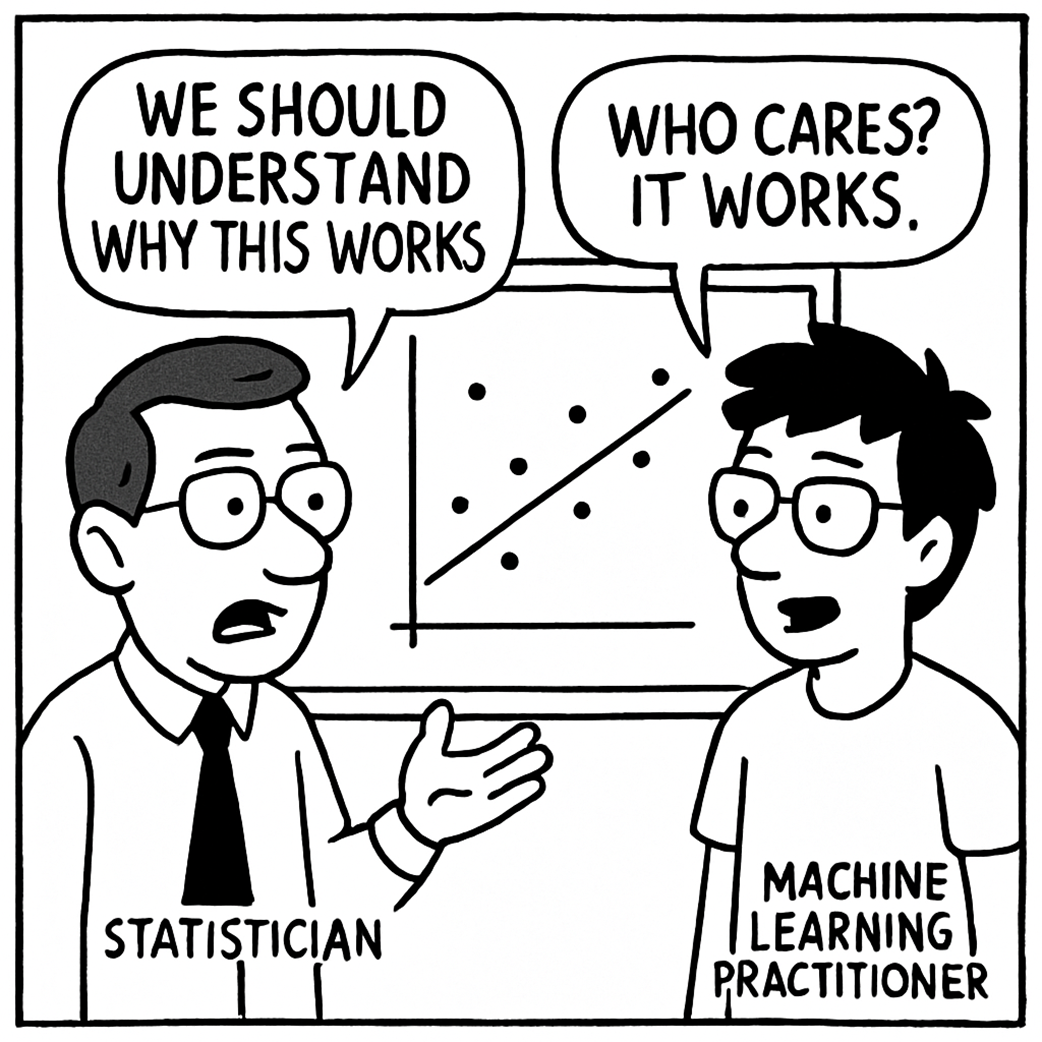

Equally important is a critical eye for studies and claims: incentives, sampling bias, confounders, and overzealous data cleaning can mislead, whether in media narratives or workplace metrics. Many roles benefit—analysts, researchers, engineers, data and ML practitioners—because real systems are noisy and uncertainty must be measured and managed. The chapter contrasts statistics’ emphasis on explanation and uncertainty with machine learning’s predictive focus, urging practitioners to combine both: use statistical rigor to validate models, detect bias, communicate limits, and choose the right level of complexity for reliable real-world outcomes.

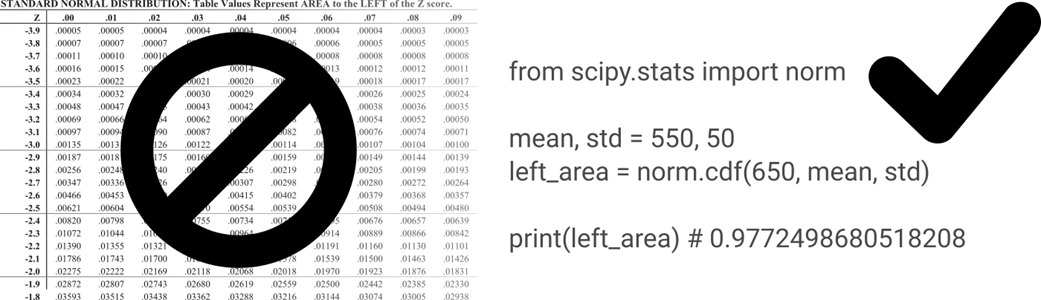

Instead of the classroom approach using lookup tables, we will use Python to simplify our statistics calculations.

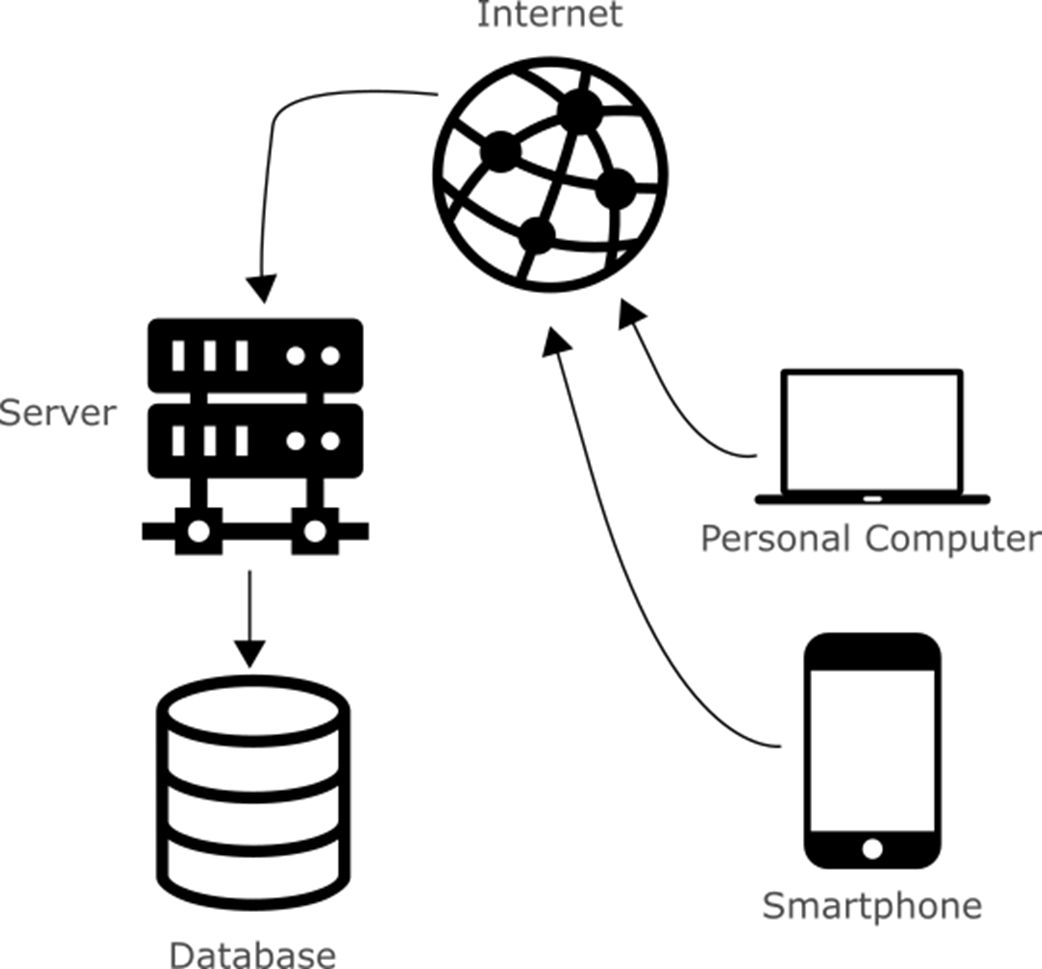

Digital databases, the Internet, and portable electronic devices have enabled data gathering at a global scale.

An example of the four steps in statistics, studying whether temperature has an impact on sports drinks sales.

Summary

- Statistics is describing and inferring truths from data, which takes the form of analyzing a sample representing a larger population or domain.

- Statistics is relevant to any profession that involves data, from analysts to machine learning practitioners and software engineers.

- Statistics and machine learning have a lot in common, sharing the same techniques but with different mindsets and approaches.

- Python is a practical and employable platform for practicing statistical concepts, and it can use readily available, stable libraries for tasks such as plotting (matplotlib), data wrangling (pandas), and numerical computing (NumPy).

- This book will cover a mix of theory, practical hands-on, and “real-world” advice, so you never miss the big picture but still be actionable in the implementation details.

- “Statistics.” Merriam-Webster.com Dictionary, Merriam-Webster, https://www.merriam-webster.com/dictionary/statistics. Accessed 28 Apr. 2025.

- https://www.youtube.com/watch?v=tm3lZJdEvCc

- https://hbr.org/2012/10/data-scientist-the-sexiest-job-of-the-21st-century

- https://www.thestreet.com/automotive/car-insurance-companies-quietly-use-these-apps-to-hike-your-rates

- https://www.statlearning.com/

FAQ

What is statistics and why should I care about it?

Statistics is about using data to describe what’s happening and to infer truths about a larger population from a sample. Because data is everywhere—from ancient censuses to today’s nonstop digital streams—statistical thinking is a timeless way to turn raw numbers into insight and better decisions.What practical benefits does statistical literacy provide?

- Employability: Spot signals others miss by combining domain expertise with data.

- Data utility: Turn underused data into actionable value.

- Decision making: Quantify uncertainty when choices are risky or unclear.

- Machine learning/AI: Build and evaluate models more thoughtfully.

- Effective sampling: Design better experiments and draw sound conclusions about populations.

Grokking Statistics ebook for free

Grokking Statistics ebook for free