1 Small Language Models

Amid the hype surrounding large language models, this chapter sets out to provide a clear, practical foundation for understanding where language models deliver value and where they fall short. It contrasts general-purpose, closed-source LLMs with open and domain-specialized alternatives, outlining benefits, risks, and decision criteria. The narrative orients readers to definitions, core architecture, major application areas, open-source momentum, and the business and technical motivations for adopting domain-specific models—especially small language models (SLMs).

SLMs are built on the same Transformer foundations as LLMs but use far fewer parameters—typically from hundreds of millions up to under ten billion—yielding faster inference, lower memory and energy use, and suitability for on-device, edge, and on-prem deployment with stronger data locality and privacy. They inherit capabilities from self-supervised training and the attention mechanism, and can be specialized efficiently via transfer learning and parameter-efficient fine-tuning on private, domain data. The chapter sketches key Transformer evolutions (encoder-only and decoder-only variants, embeddings, and RLHF) and highlights the growing view that SLMs are both sufficient and economical for many agentic AI workflows, often as part of heterogeneous systems that mix models by task.

Practically, language models now power a broad range of tasks—classification, QA, summarization, code generation, basic reasoning, and more—but generalist closed-source LLMs carry notable risks: data leaving organizational boundaries, limited transparency and reproducibility, potential bias and hallucinations, and guardrail gaps for generated code. Open-source models provide a strong alternative, cutting development and training costs by starting from pretrained checkpoints and enabling private, compliant deployment. For regulated or sensitive domains, domain-specific models typically yield higher accuracy and better alignment to context, and SLMs further reduce operational footprint and environmental impact. The book’s focus is on making such models practical: optimizing and quantizing for efficient inference, serving through diverse APIs, deploying across constrained hardware, and integrating with RAG and agentic patterns.

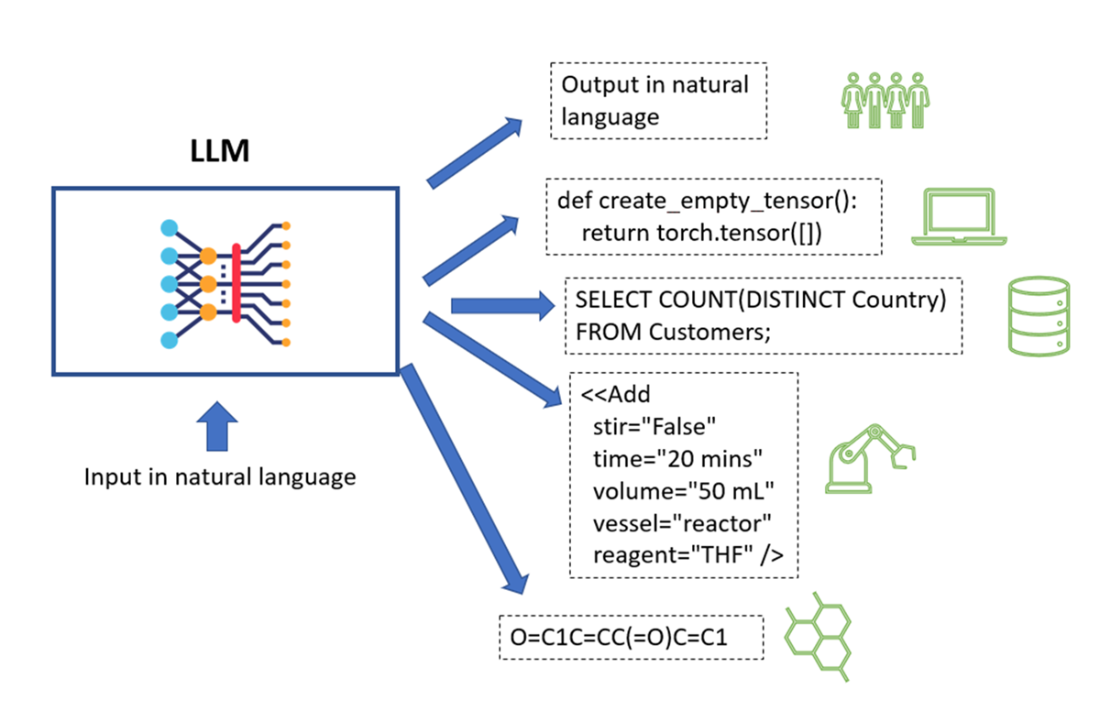

Some examples of diverse content an LLM can generate.

The timeline of LLMs since 2019 (image taken from paper [3])

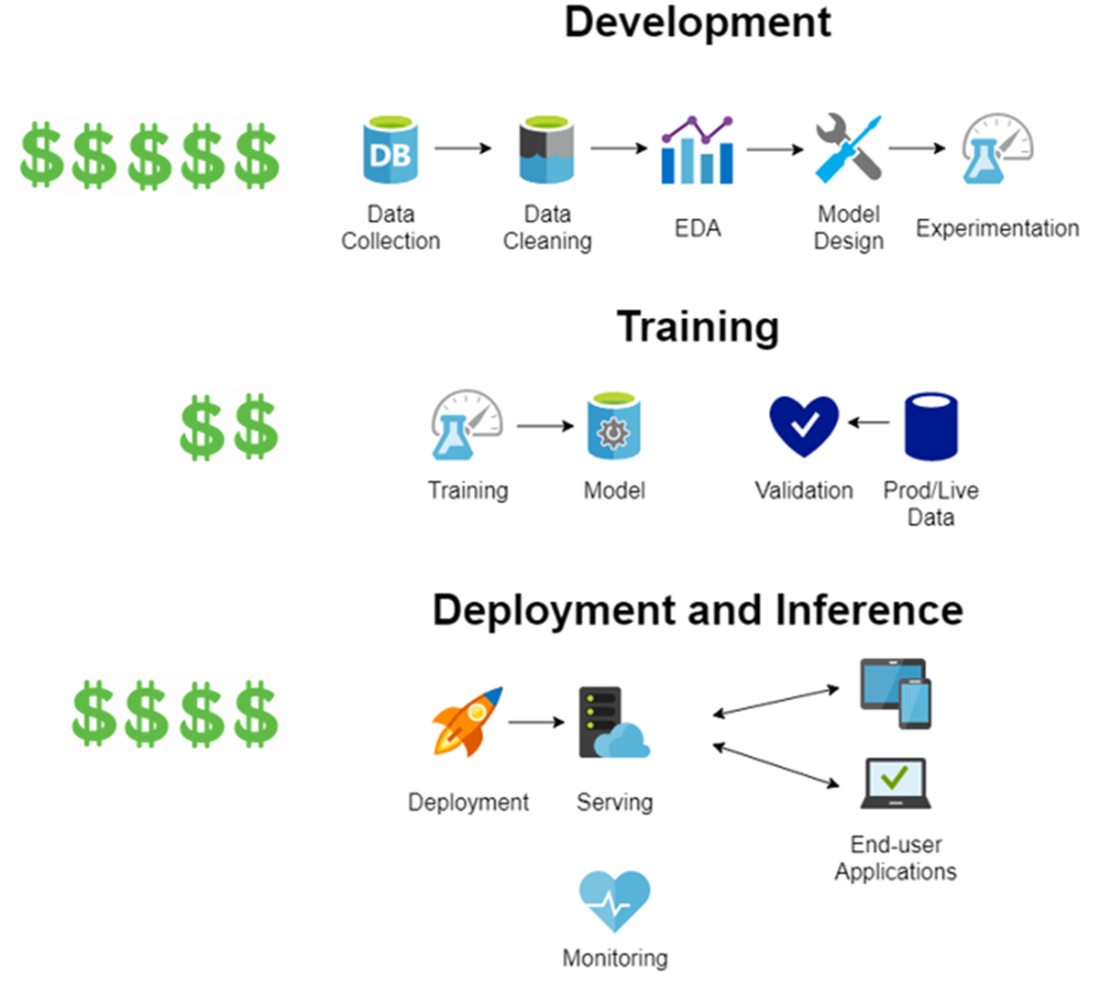

Order of magnitude of costs for each phase of LLM implementation from scratch.

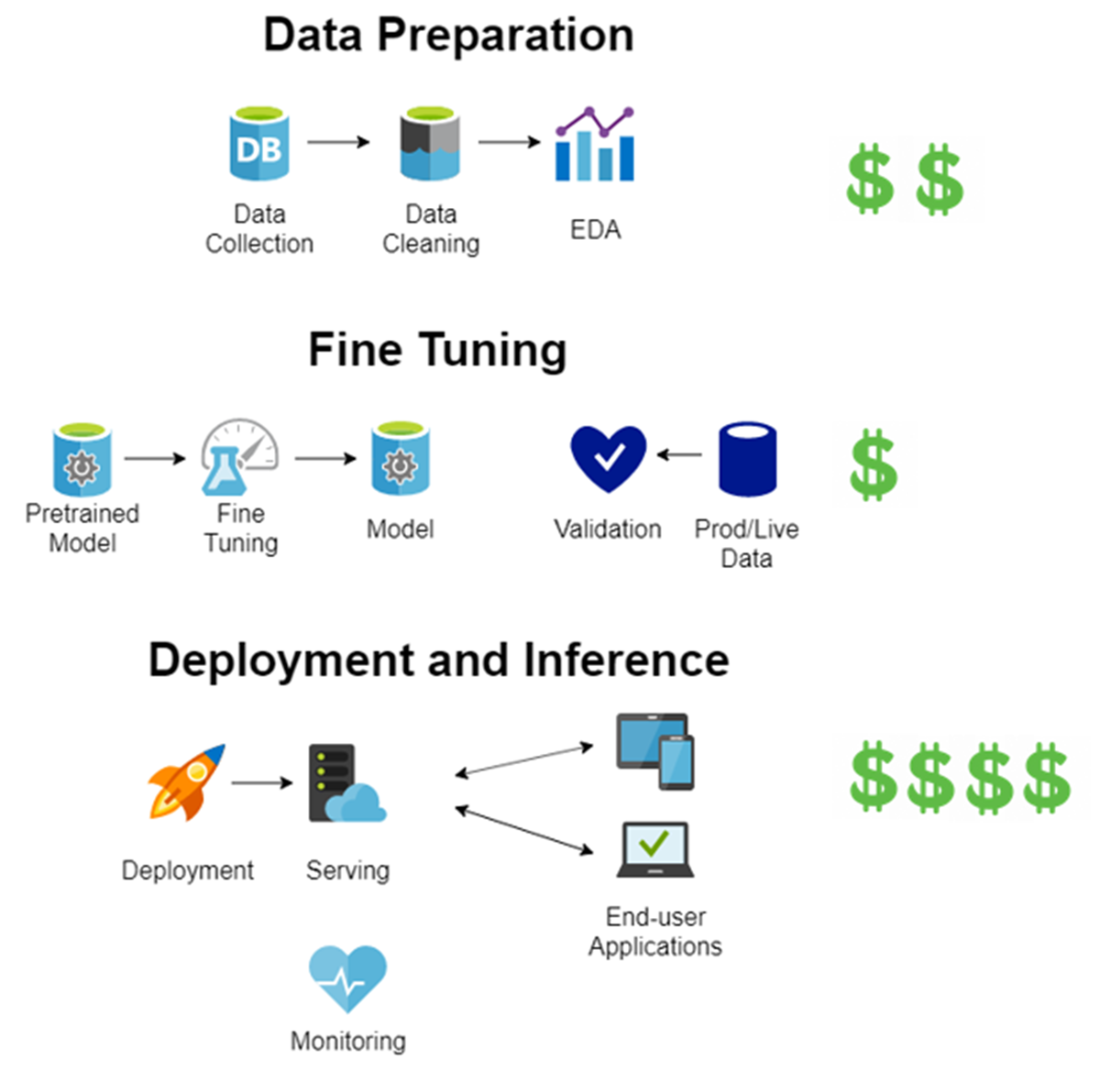

Order of magnitude of costs for each phase of LLM implementation when starting from a pretrained model.

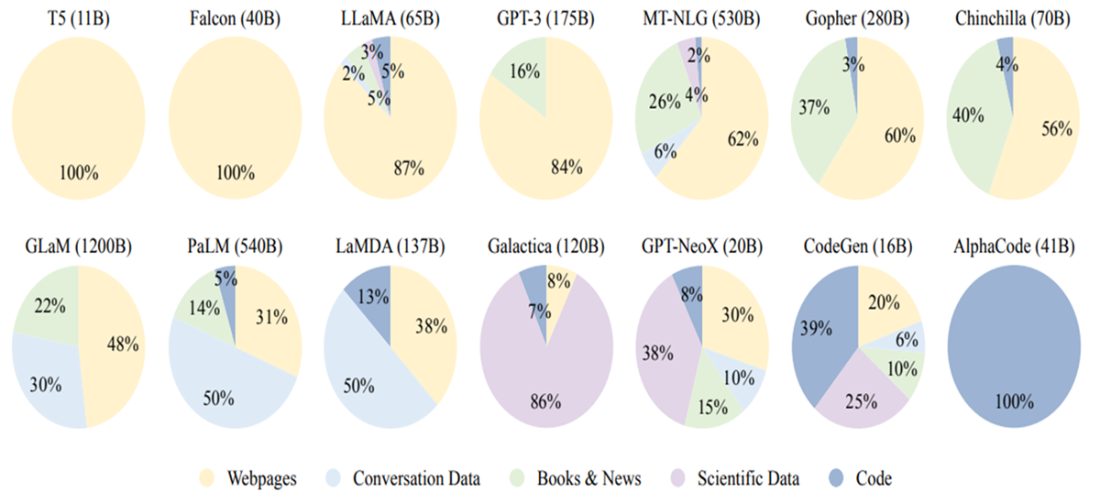

Ratios of data source types used to train some popular existing LLMs.

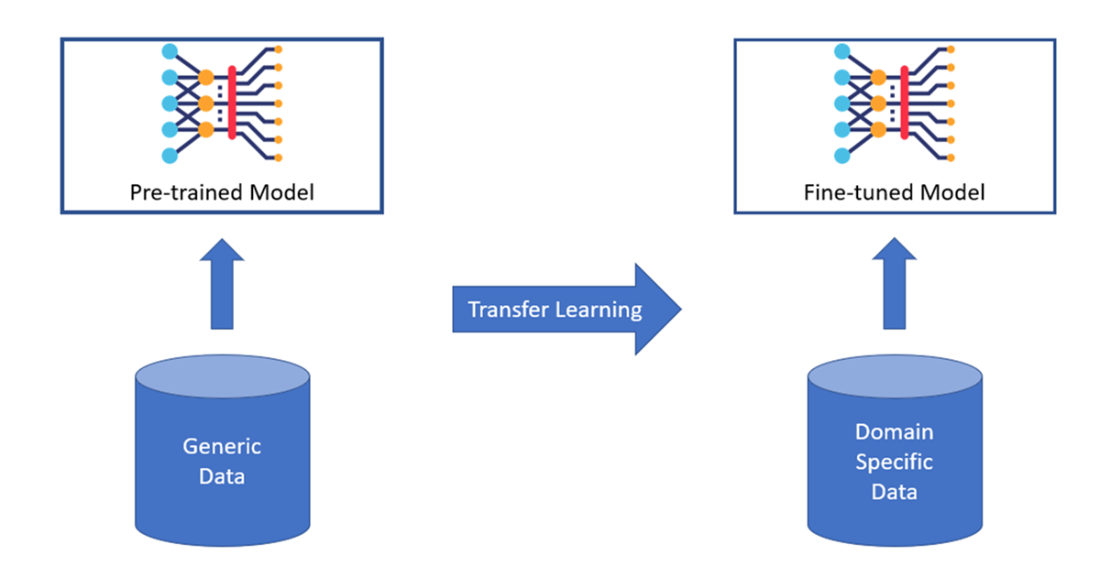

Generic model specialization to a given domain.

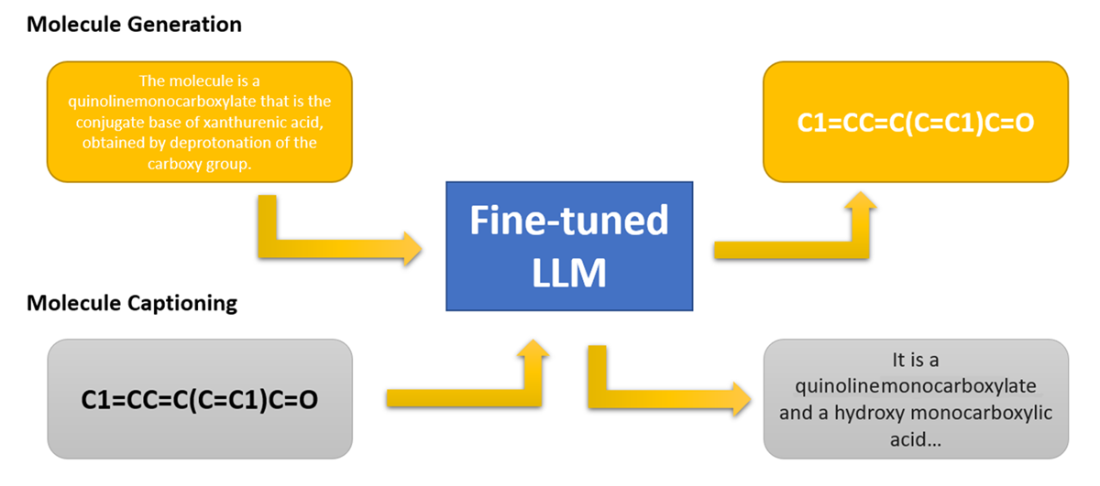

A LLM trained for tasks on molecule structures (generation and captioning).

Summary

- The definition of SLMs.

- Transformers use self-attention mechanisms to process entire text sequences at once instead of word by word.

- Self-supervised learning creates training labels automatically from text data without human annotation.

- BERT models use only the encoder part of Transformers for classification and prediction tasks.

- GPT models use only the decoder part of Transformers for text generation tasks.

- Word embeddings convert words into numerical vectors that capture semantic relationships.

- RLHF uses reinforcement learning to improve LLM responses based on human feedback.

- LLMs can generate any symbolic content including code, math expressions, and structured data.

- Open source LLMs reduce development costs by providing pre-trained models as starting points.

- Transfer learning adapts pre-trained models to specific domains using domain-specific data.

- Generalist LLMs risk data leakage when deployed outside organizational networks.

- Closed source models lack transparency about training data and model architecture.

- Domain-specific LLMs provide better accuracy for specialized tasks than generalist models.

- Smaller specialized models require less computational power than large generalist models.

- Fine-tuning costs significantly less than training models from scratch.

- Regulatory compliance often requires domain-specific models with known training data.

Domain-Specific Small Language Models ebook for free

Domain-Specific Small Language Models ebook for free