1 Supercharging Traditional Programming with Generative AI

The chapter opens by framing the rapid ascent of Generative AI and Large Language Models as a paradigm shift that is reshaping software development across industries. Beyond creating content, these models act as problem-solvers that can reason over context, assist with complex tasks, and augment traditional engineering workflows. Yet integrating heterogeneous models, managing evolving services, and operationalizing solutions introduces new complexity. To bridge this gap—especially for the .NET ecosystem—the chapter introduces Microsoft’s Semantic Kernel as an orchestration layer that streamlines model usage and aligns generative capabilities with real-world application needs. It also positions Semantic Kernel among adjacent tools, noting LangChain’s Python-centric chaining strengths and ML.NET’s complementary focus on classical machine learning and AutoML.

Semantic Kernel is presented as a lightweight, open-source SDK for building AI-orchestrated applications that work with LLMs, SLMs, and multimodal services. Its core value lies in abstraction and modularity—plugins encapsulate both semantic (AI-driven) and native (conventional) functions—while remaining model-agnostic across providers like OpenAI, Azure OpenAI, Mistral, Gemini, and HuggingFace. Enterprise-grade features such as interoperability with C#, Python, and Java, scalability and telemetry, and responsible AI filters are emphasized, alongside advanced capabilities for memory, planning, and extensibility. A concise code example illustrates how few lines are needed to prompt a model via the kernel, showing how complex instructions can be decomposed into actionable steps and hinting at richer scenarios through parameterized prompts, reusable plugins, and pluggable services.

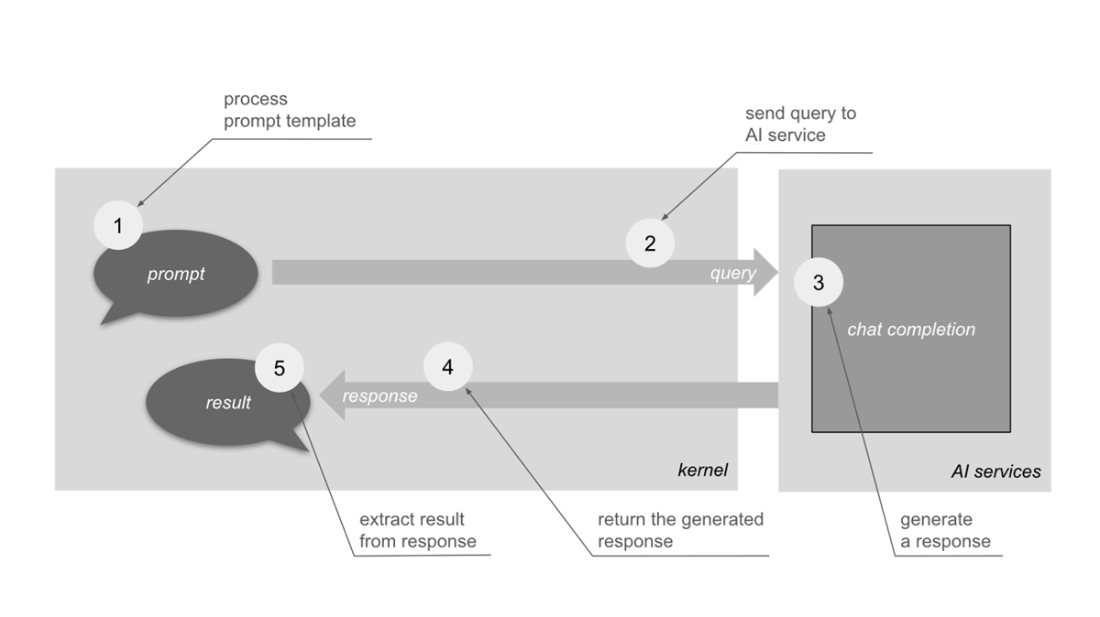

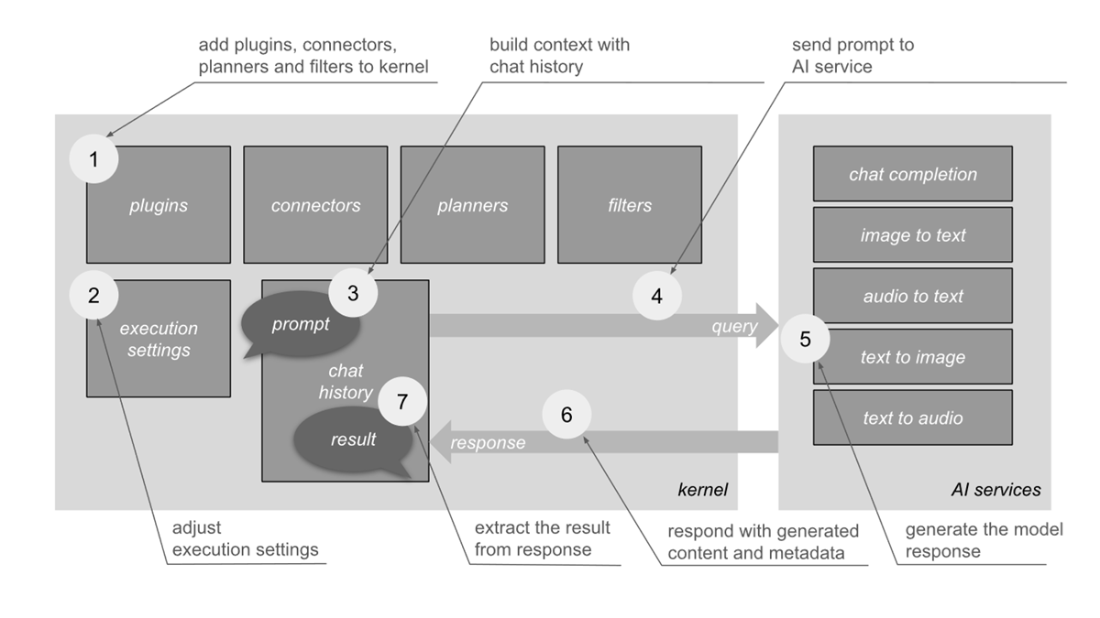

To explain how it works, the chapter uses a human-body analogy that maps senses to connectors, the nervous system to plugins, planning to planners, selective attention to filters, and short-term memory to chat history, with AI services playing the role of “thinking.” It then details two architectural views: a basic flow that transforms prompts into queries, sends them to an AI service, and parses results; and an advanced flow that adds connectors, planners, filters, execution settings, and chat history to support complex, context-aware interactions. This structured approach helps developers reason about composing capabilities, controlling behavior, and scaling solutions. The chapter concludes by setting expectations for subsequent hands-on chapters that build chatbots and copilots step by step using Semantic Kernel’s orchestration patterns.

The image compares human cognitive processes to Microsoft Semantic Kernel's architecture, illustrating how sensory systems like eyes and ears gather data, how the brain processes this information and forms memories, and how the mind filters out irrelevant stimuli while focusing on important details, simulating planning and adaptation through the Kernel's filtering and planning functionalities. (image generated using Bing Copilot)

The diagram illustrates Semantic Kernel core functionality: building a prompt, sending the query to an AI service for chat completion, receiving the response from the AI service, and parsing the response into a meaningful result. These are essential steps for interacting with large language models through Semantic Kernel.

The diagram illustrates Semantic Kernel's advanced workflow: integrating connectors, plugins, planners, and filters; configuring execution settings; building prompts and managing chat history; querying AI for chat completion; updating chat history with responses; and parsing results into meaningful output.

Summary

- Generative AI and LLMs are transforming industries, solving complex challenges across various fields

- Microsoft's Semantic Kernel simplifies integration of generative AI models for AI-orchestrated applications

- Semantic Kernel's architecture analogous to human body functions for easier understanding

- Core components: connectors, plugins, planners, filters, chat history, execution settings, and AI services

- Semantic Kernel enables AI-powered applications with minimal code, offering a large range of integration possibilities

FAQ

What are Generative AI and Large Language Models (LLMs), and why are they important?

Generative AI creates novel content (text, code, images, audio, video) and can solve problems by understanding context and producing coherent outputs. LLMs are a prominent form of generative AI, enabling human-like text generation and even code synthesis. Their rapid progress is transforming industries and expanding what software can do, from intelligent chatbots to proactive copilots.Why should .NET developers consider Microsoft Semantic Kernel?

Integrating generative models directly can be complex and model-specific. Semantic Kernel abstracts this complexity, standardizes interactions across different AI services, and adds enterprise-ready capabilities (telemetry, security, scalability). It lets you focus on application logic while easily swapping or combining models as the landscape evolves.What exactly is Semantic Kernel?

Semantic Kernel is a lightweight, open-source SDK that simplifies building AI-orchestrated applications. It primarily targets LLMs but also supports SLMs and multimodal services (for example, text-to-video or video-to-text). It provides an orchestration layer that works across multiple AI providers to help you compose prompts, functions, and workflows.What are the key features of Semantic Kernel?

- Abstraction: Shields developers from low-level model and API differences.

- Modularity: Plugin architecture for both AI-powered and conventional functions.

- Flexibility: Model-agnostic; works with OpenAI, Azure OpenAI, Mistral, Gemini, HuggingFace, and more.

- Interoperability: Integrates with C#, Python, and Java codebases.

- Scalability and observability: Enterprise-ready deployments with telemetry.

- Security and responsibility: Built-in filtering (for example, moderation, bias/PII checks).

- Advanced capabilities: Memory (chat history), planning, and extensible plugins.

Building AI Agents in .NET ebook for free

Building AI Agents in .NET ebook for free