1 Understanding reasoning models

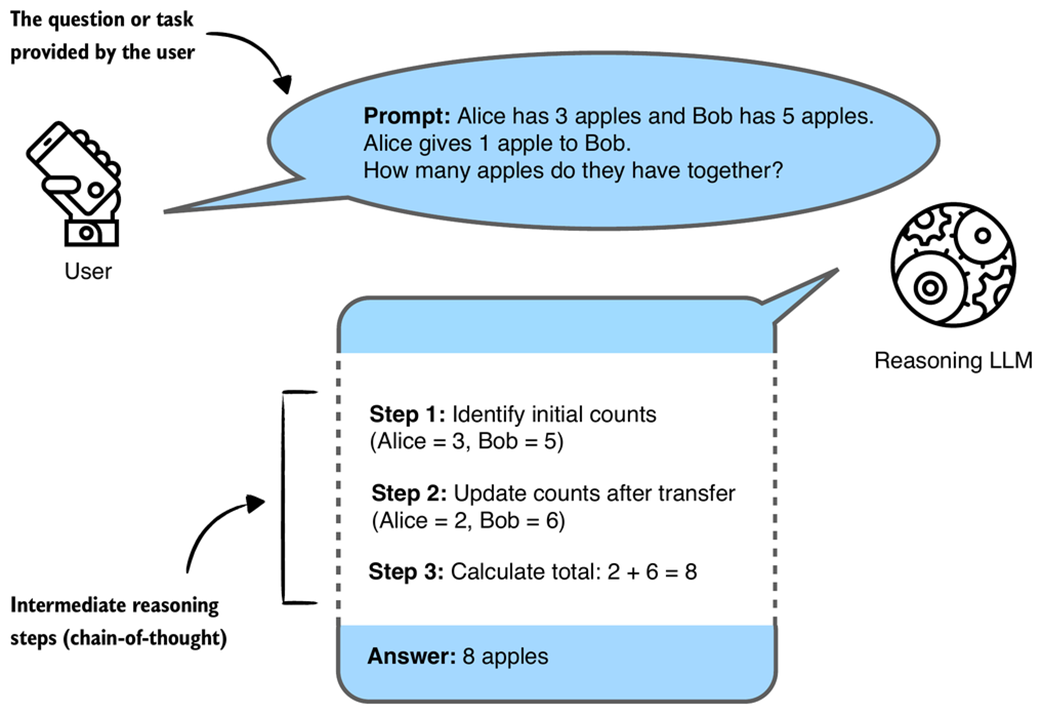

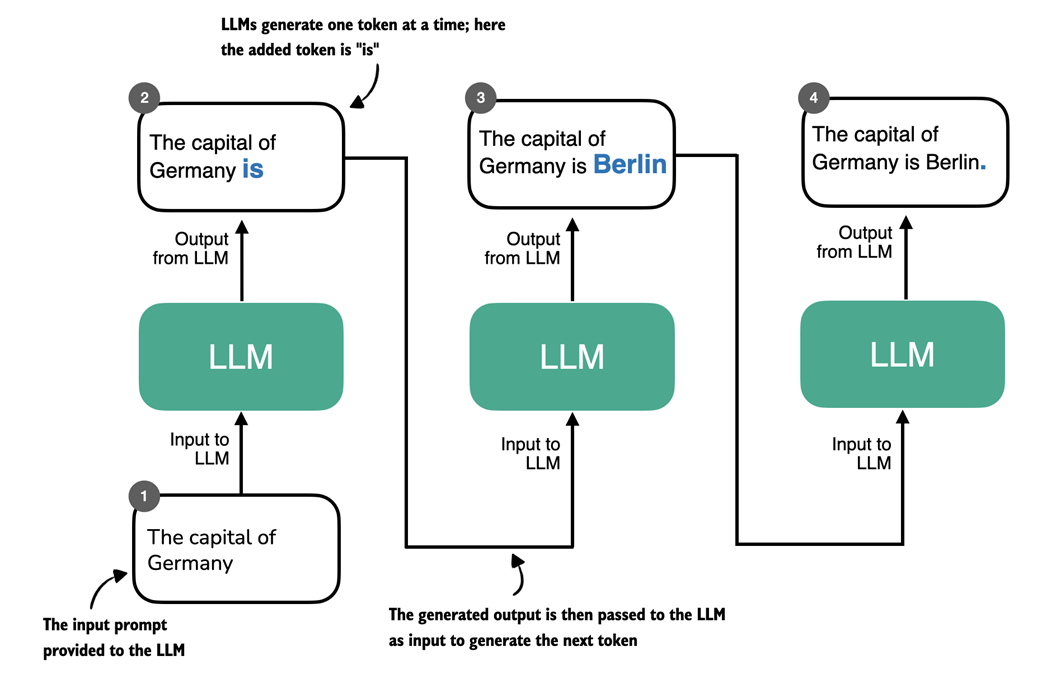

Reasoning in large language models is framed as the ability to show intermediate steps on the way to an answer, often called chain-of-thought. This step-by-step articulation tends to boost accuracy on complex tasks such as coding, logic puzzles, and multi-step math, and is central to making more agentic AI practical. Unlike deterministic, rule-based systems, LLMs generate these steps probabilistically via next-token prediction, so their “reasoning” can be persuasive without guaranteed logical soundness. The chapter sets this practical, hands-on focus, clarifying that terms like reasoning and thinking are used in an engineering sense rather than to imply human-like cognition.

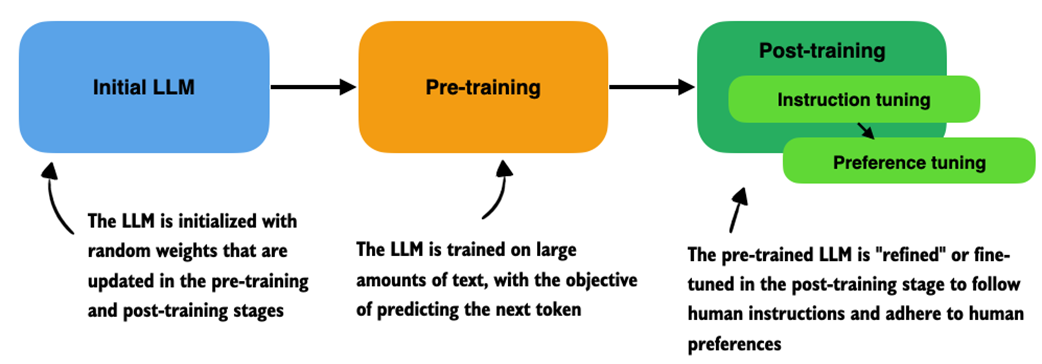

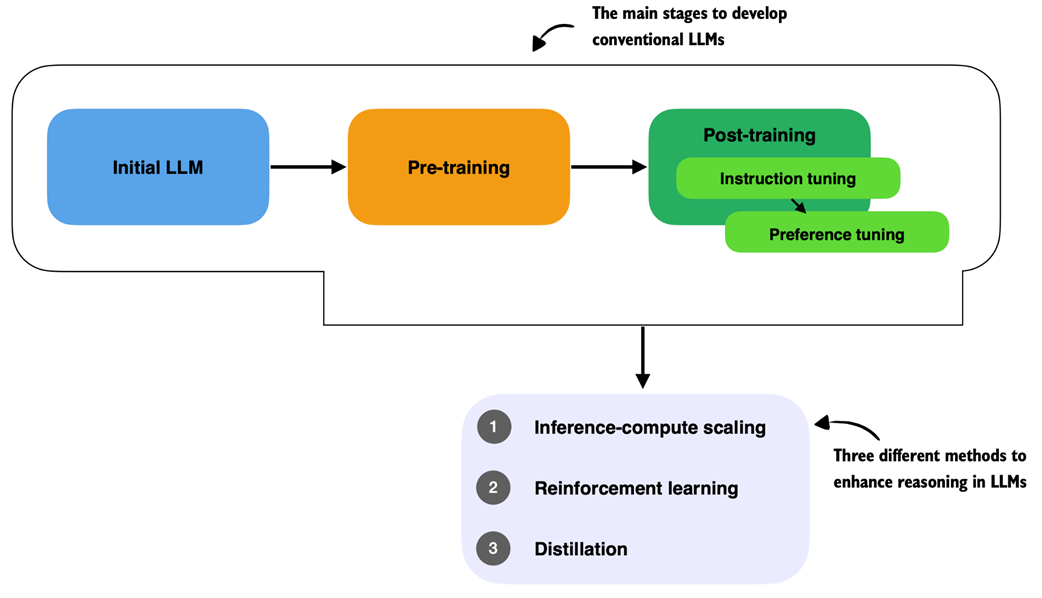

The chapter revisits the standard LLM pipeline—massive pre-training for next-token prediction followed by post-training through instruction tuning and preference tuning—and positions reasoning enhancements as additional layers on top. It groups the main approaches into three categories: inference-time compute scaling (spending more thinking time or samples at inference without changing weights), reinforcement learning with verifiable rewards to update weights (distinct from RLHF used for preference alignment), and distillation that transfers behaviors from stronger teachers to smaller students. It contrasts pattern matching with logical reasoning, noting that LLMs often simulate reasoning by leveraging statistical associations rather than explicit rule-checking, which works well in familiar contexts but can falter on novel or deeply compositional problems.

The motivation for building reasoning models from scratch is both strategic and practical: the field is rapidly shifting toward models that know when and how long to think, yet reasoning is not universally beneficial. These systems can be costlier due to longer outputs and multi-sample workflows, and may overthink simple tasks, so choosing the right tool matters. Implementing methods end to end helps practitioners understand these trade-offs. The roadmap starts from a conventional, post-trained base model, establishes evaluation to track gains, and then adds inference-time techniques followed by training-based methods, equipping readers to design, prototype, and assess modern reasoning approaches.

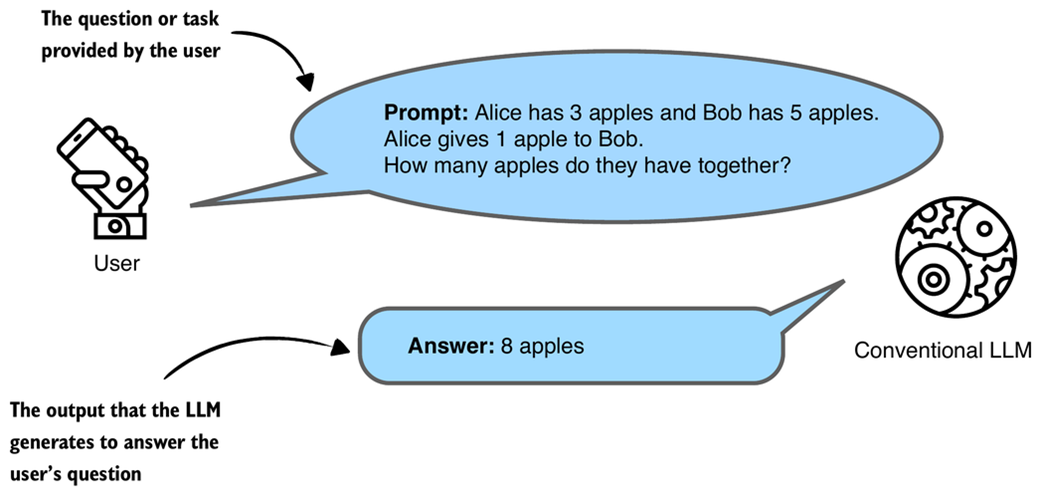

A simplified illustration of how a conventional, non-reasoning LLM might respond to a question with a short answer.

A simplified illustration of how a reasoning LLM might tackle a multi-step reasoning task using a chain-of-thought. Rather than just recalling a fact, the model combines several intermediate reasoning steps to arrive at the correct conclusion. The intermediate reasoning steps may or may not be shown to the user, depending on the implementation.

Overview of a typical LLM training pipeline. The process begins with an initial model initialized with random weights, followed by pre-training on large-scale text data to learn language patterns by predicting the next token. Post-training then refines the model through instruction fine-tuning and preference fine-tuning, which enables the LLM to follow human instructions better and align with human preferences.

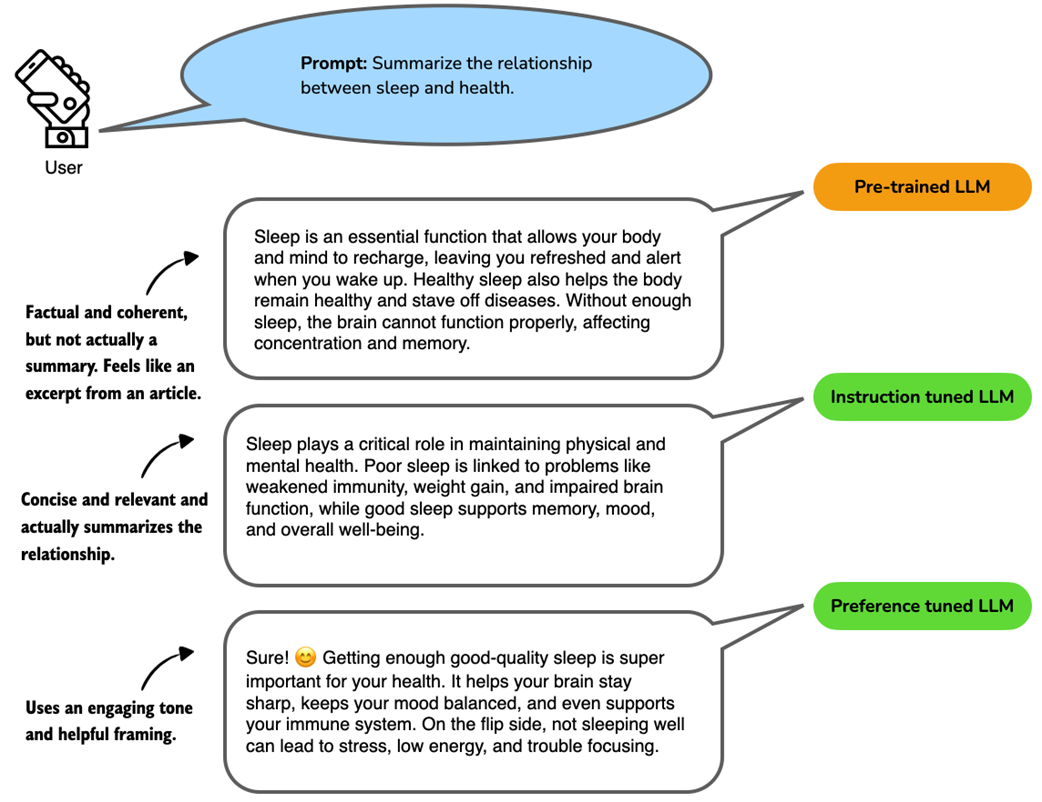

Example responses from a language model at different training stages. The prompt asks for a summary of the relationship between sleep and health. The pre-trained LLM produces a relevant but unfocused answer without directly following the instructions. The instruction-tuned LLM generates a concise and accurate summary aligned with the prompt. The preference-tuned LLM further improves the response by using a friendly tone and engaging language, which makes the answer more relatable and user-centered.

Three approaches commonly used to improve reasoning capabilities in LLMs. These methods (inference-compute scaling, reinforcement learning, and distillation) are typically applied after the conventional training stages (initial model training, pre-training, and post-training with instruction and preference tuning), but reasoning techniques can also be applied to the pre-trained base model.

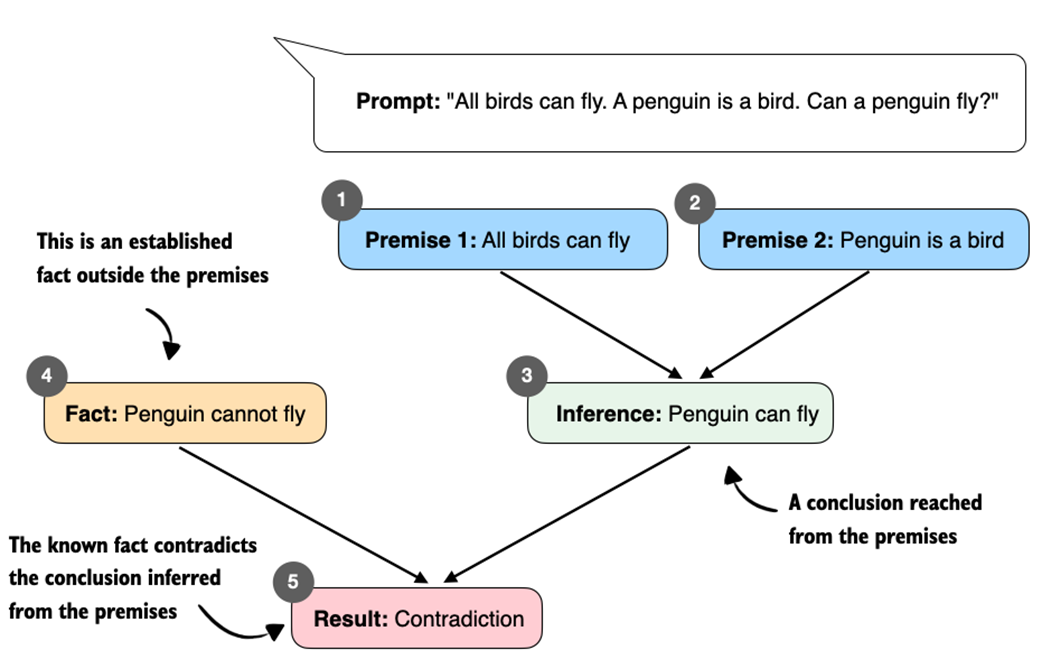

Illustration of how contradictory premises lead to a logical inconsistency. From "All birds can fly" and "A penguin is a bird," we infer "Penguin can fly." This conclusion conflicts with the established fact "Penguin cannot fly," which results in a contradiction.

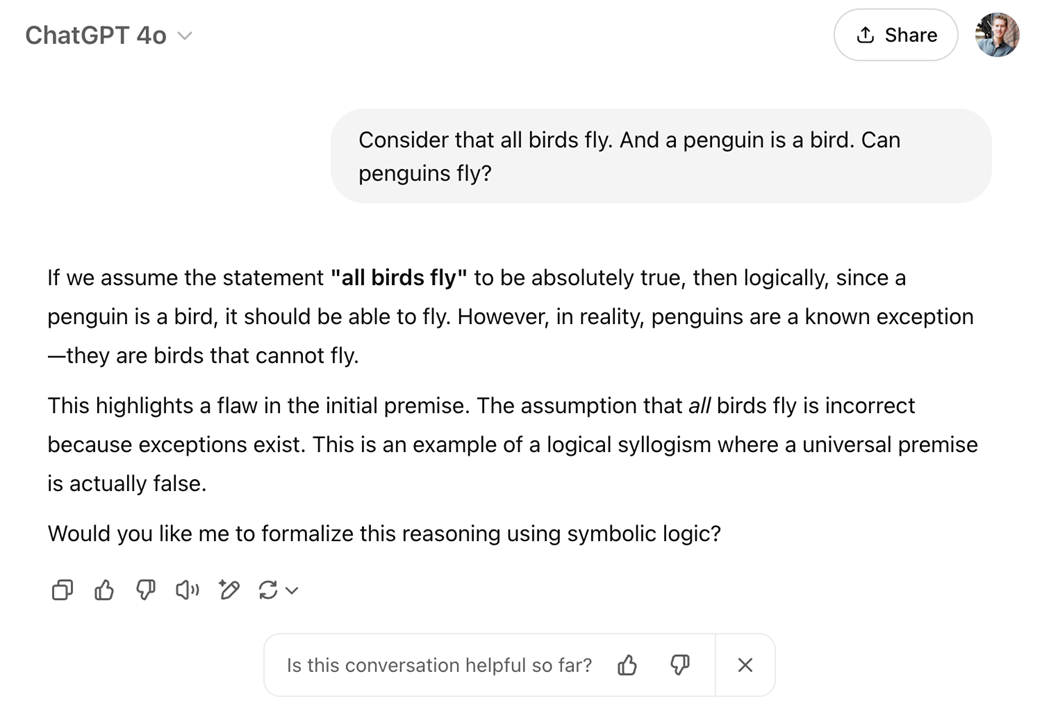

An illustrative example of how a language model (GPT-4o in ChatGPT) appears to "reason" about a contradictory premise.

Token-by-token generation in an LLM. At each step, the LLM takes the full sequence generated so far and predicts the next token, which may represent a word, subword, or punctuation mark depending on the tokenizer. The newly generated token is appended to the sequence and used as input for the next step. This iterative decoding process is used in both standard language models and reasoning-focused models.

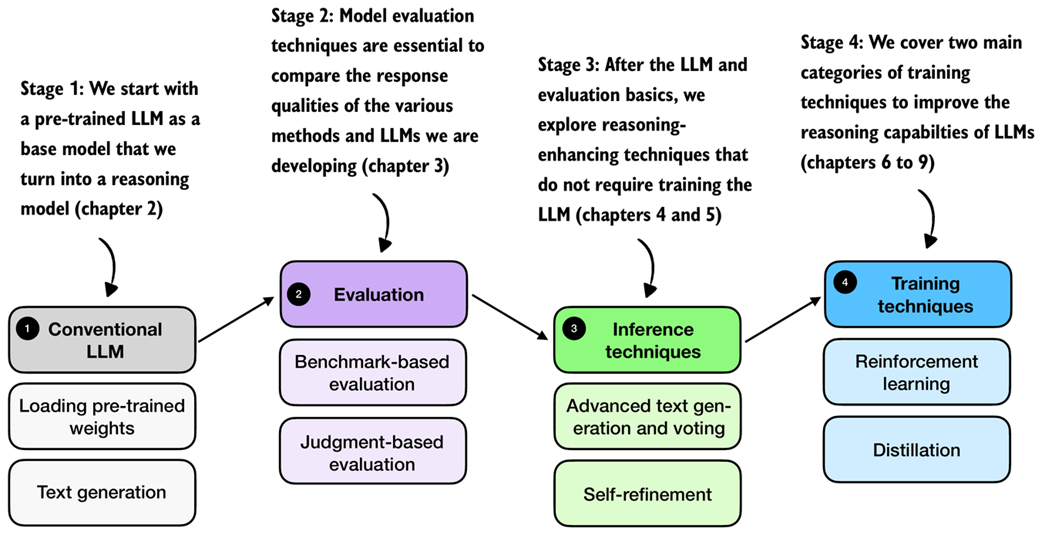

A mental model of the main reasoning model development stages covered in this book. We start with a conventional LLM as base model (stage 1). In stage 2, we cover evaluation strategies to track the reasoning improvements introduced via the reasoning methods in stages 3 and 4.

Summary

- Conventional LLM training occurs in several stages:

- Pre-training, where the model learns language patterns from vast amounts of text.

- Instruction fine-tuning, which improves the model's responses to user prompts.

- Preference tuning, which aligns model outputs with human preferences.

- Reasoning methods are applied on top of a conventional LLM.

- Reasoning in LLMs refers to improving a model so that it explicitly generates intermediate steps (chain-of-thought) before producing a final answer, which often increases accuracy on multi-step tasks.

- Reasoning in LLMs is different from rule-based reasoning and it also likely works differently than human reasoning; currently, the common consensus is that reasoning in LLMs relies on statistical pattern matching.

- Pattern matching in LLMs relies purely on statistical associations learned from data, which enables fluent text generation but lacks explicit logical inference.

- Improving reasoning in LLMs can be achieved through:

- Inference-time compute scaling, enhancing reasoning without retraining (e.g., chain-of-thought prompting).

- Reinforcement learning, training models explicitly with reward signals.

- Supervised fine-tuning and distillation, using examples from stronger reasoning models.

- Building reasoning models from scratch provides practical insights into LLM capabilities, limitations, and computational trade-offs.

Build a Reasoning Model (From Scratch) ebook for free

Build a Reasoning Model (From Scratch) ebook for free