1 The world of the Apache Iceberg Lakehouse

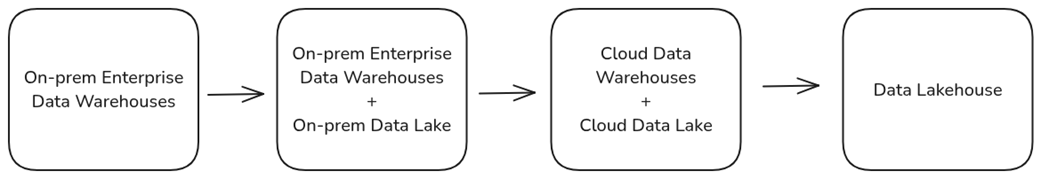

The chapter introduces the data lakehouse as a response to decades of trade-offs in data architecture, aiming to balance cost, performance, flexibility, and governance. It traces the evolution from OLTP systems to enterprise data warehouses, which delivered analytics but at high cost and rigidity, then to cloud warehouses that improved elasticity yet reinforced data movement, premium pricing, and lock-in. Hadoop-era data lakes lowered storage costs and embraced all data types but often devolved into “data swamps” due to weak consistency, slow metadata operations, and difficult governance. The lakehouse emerges to combine the warehouse’s reliability and speed with the lake’s openness and affordability, enabling analytics on a single, canonical copy of data.

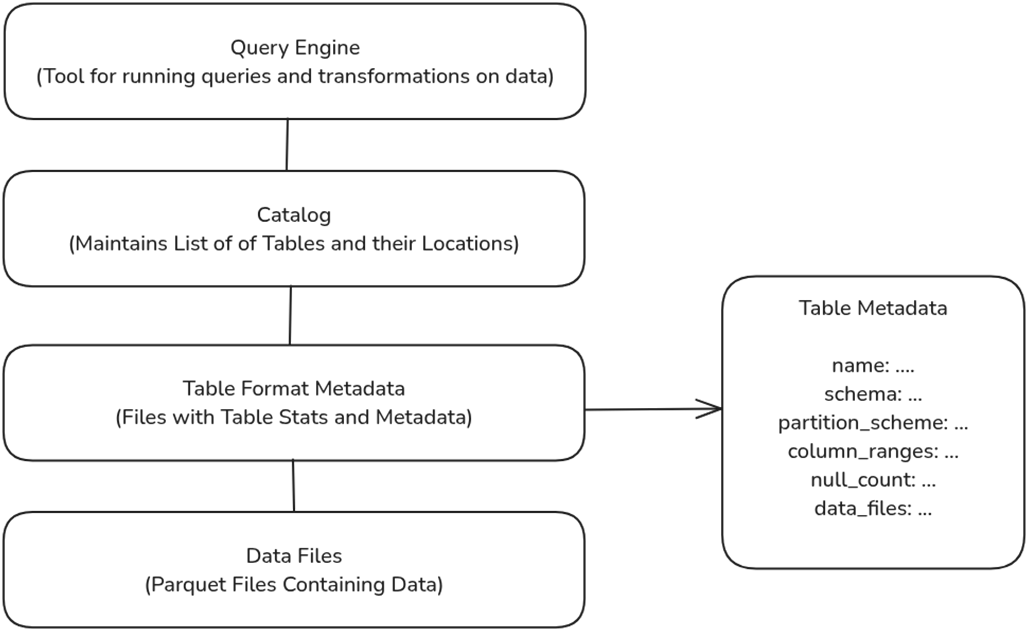

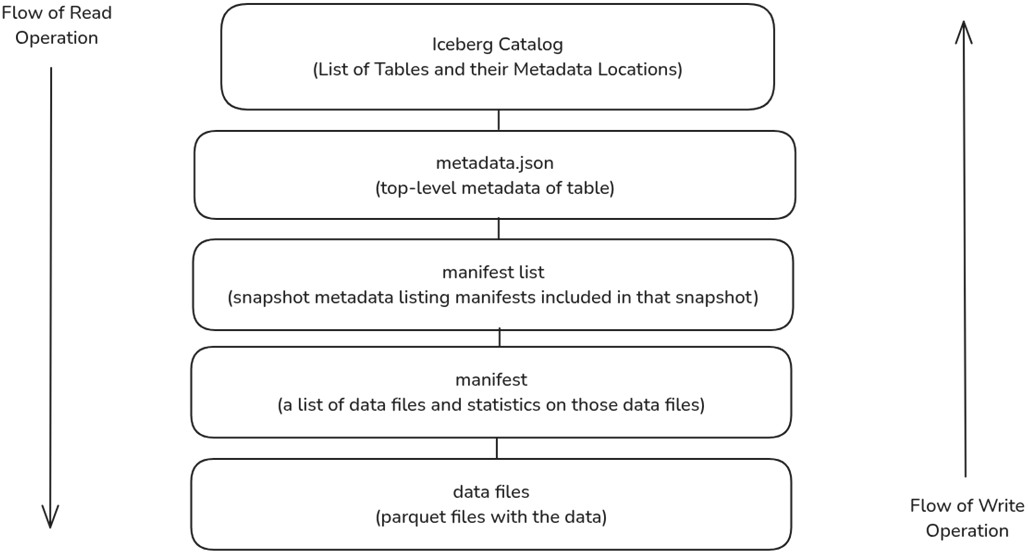

Apache Iceberg is presented as the key enabler of the lakehouse: an open, vendor-agnostic table format that makes file-based datasets behave like robust database tables across multiple engines. It introduces a layered metadata design (table metadata, manifest lists, and manifests) that accelerates planning and enables fine-grained pruning, dramatically reducing unnecessary scans. Iceberg brings warehouse-grade guarantees to the lake—ACID transactions, schema and partition evolution, hidden partitioning to prevent accidental full scans, and time travel for versioned analytics and recovery. Broad ecosystem support across engines and platforms allows teams to collaborate on the same datasets without replication, minimizing ETL sprawl while preserving openness and portability.

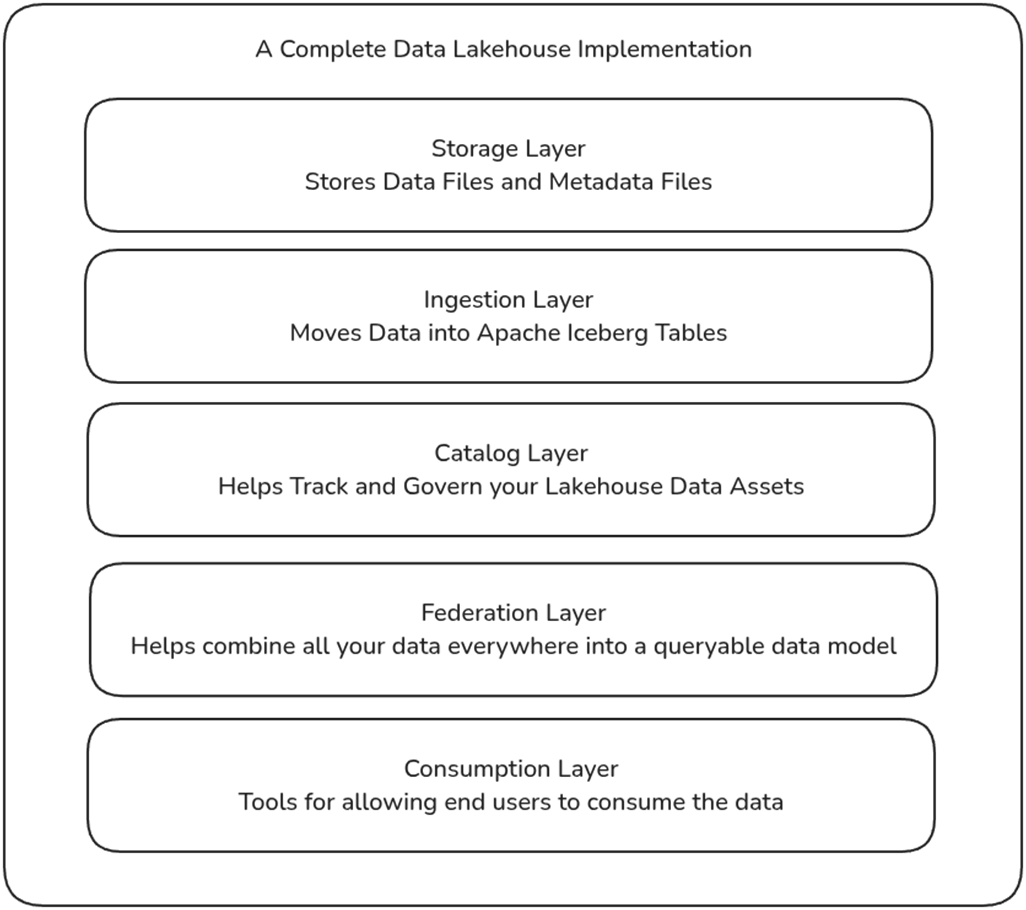

The chapter also frames when and why to implement an Iceberg-based lakehouse: to ensure cross-team consistency from a single source of truth, run high-performance analytics directly on lake storage, and reduce duplication and costs. It outlines a modular architecture with five interoperable components—storage, ingestion, catalog, federation, and consumption—so organizations can scale each independently and avoid vendor lock-in. The catalog serves as the authoritative entry point for tables and governance; the federation layer provides semantic modeling, unification across sources, and acceleration; and the consumption layer supports BI, AI/ML, operational apps, and data products. Together, these choices yield a scalable, cost-efficient, and AI-ready platform that preserves flexibility while delivering warehouse-like reliability.

The evolution of data platforms from on-prem warehouses to data lakehouses.

The role of the table format in data lakehouses.

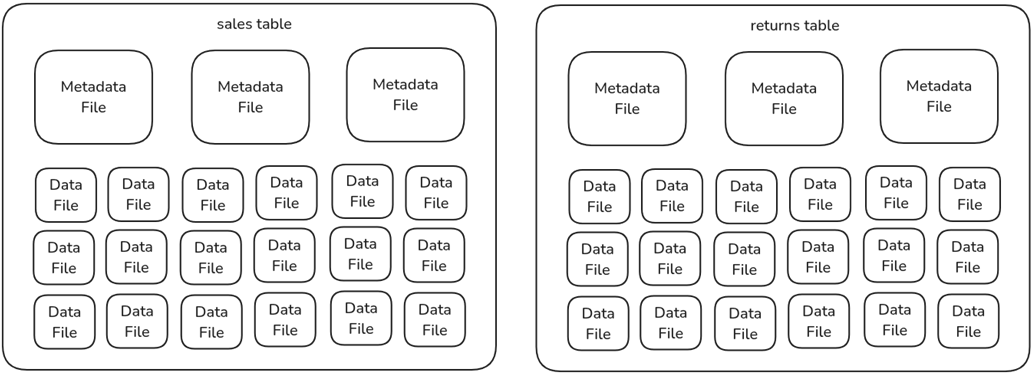

The anatomy of a lakehouse table, metadata files, and data files.

The structure and flow of an Apache Iceberg table read and write operation.

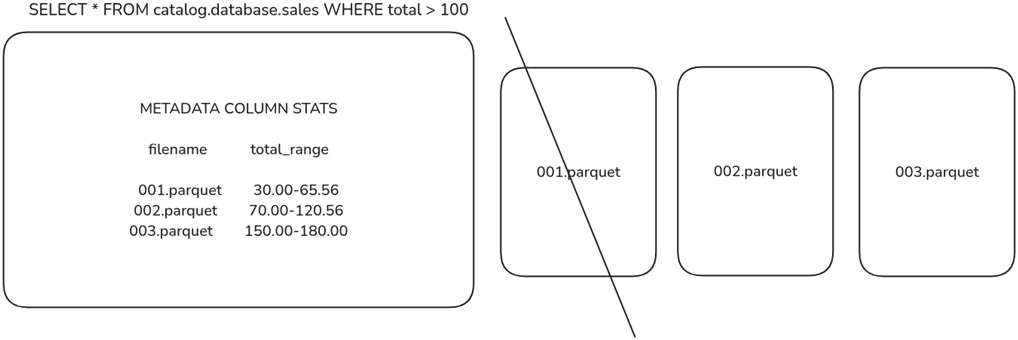

Engines use metadata statistics to eliminate data files from being scanned for faster queries.

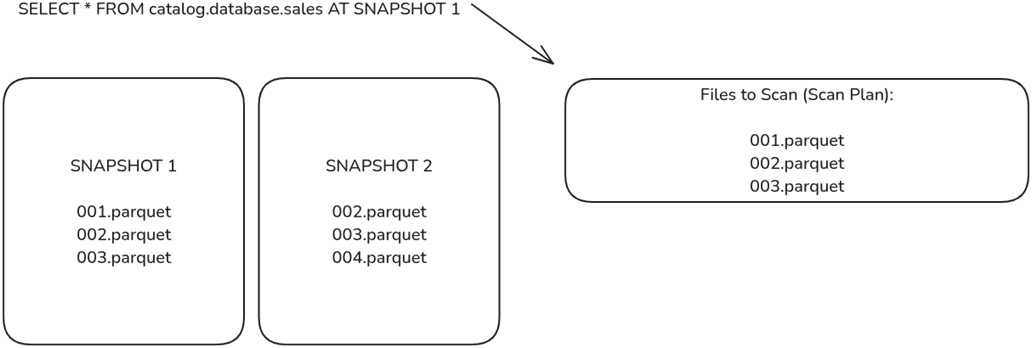

Engines can scan older snapshots, which will provide a different list of files to scan, enabling scanning older versions of the data.

The components of a complete data lakehouse implementation

Summary

- Data lakehouse architecture combines the scalability and cost-efficiency of data lakes with the performance, ease of use, and structure of data warehouses, solving key challenges in governance, query performance, and cost management.

- Apache Iceberg is a modern table format that enables high-performance analytics, schema evolution, ACID transactions, and metadata scalability. It transforms data lakes into structured, mutable, governed storage platforms.

- Iceberg eliminates significant pain points of OLTP databases, enterprise data warehouses, and Hadoop-based data lakes, including high costs, rigid schemas, slow queries, and inconsistent data governance.

- With features like time travel, partition evolution, and hidden partitioning, Iceberg reduces storage costs, simplifies ETL, and optimizes compute resources, making data analytics more efficient.

- Iceberg integrates with query engines (Trino, Dremio, Snowflake), processing frameworks (Spark, Flink), and open lakehouse catalogs (Nessie, Polaris, Gravitino), enabling modular, vendor-agnostic architectures.

- The Apache Iceberg Lakehouse has five key components: storage, ingestion, catalog, federation, and consumption.

FAQ

What is a data lakehouse and how does it differ from data lakes and data warehouses?

A data lakehouse combines the low-cost, flexible storage of data lakes with the performance, governance, and ease of use of data warehouses by layering an open table format (like Apache Iceberg) over files in object storage. Unlike traditional warehouses (high performance but costly and proprietary) and raw lakes (cheap but inconsistent and hard to govern), a lakehouse delivers warehouse-like reliability and speed on open, interoperable storage.

Why did the lakehouse paradigm emerge?

Earlier architectures had trade-offs: OLTP databases weren’t designed for large analytical scans; enterprise data warehouses were rigid and expensive; cloud warehouses improved elasticity but still suffered from premium costs, data movement, and vendor lock-in; Hadoop-era lakes were flexible and cheap but slow, inconsistent, and hard to govern. The lakehouse addresses these by unifying performance, consistency, openness, and cost efficiency.

What is Apache Iceberg and why is it central to a lakehouse?

Apache Iceberg is an open, vendor-agnostic table format that makes files in distributed storage behave like fully managed tables with ACID guarantees, schema evolution, and efficient scans. It enables multiple engines (e.g., Dremio, Spark, Flink, Trino, Snowflake) to share one canonical dataset, reducing ETL, replication, and lock-in while delivering warehouse-like performance on a data lake.

What problems with Hive-style tables did Apache Iceberg set out to solve?

- Slow metadata operations at scale (millions of partitions)

- Cumbersome or limited schema evolution

- Weak or format-dependent ACID guarantees

- Inefficient queries that scan entire directories instead of relevant files

How does Iceberg’s metadata model accelerate queries?

Iceberg uses a multi-layer metadata structure discovered via a catalog:

- metadata.json (table-level): schemas, partitioning, snapshots

- Manifest lists (snapshot-level): groups of manifests with summary stats

- Manifests (file-level): file paths and column/partition stats

This enables aggressive pruning so engines read only relevant files, boosting performance and lowering compute costs.

What ACID guarantees does Iceberg provide and why do they matter?

Iceberg provides atomicity, consistency, isolation, and durability for all table operations. This prevents partial or conflicting writes, ensures stable reads during concurrent updates via snapshots, and makes multi-writer lakes reliable without external locking or complex workarounds.

How do schema and partition evolution (and hidden partitioning) work in Iceberg?

- Schema evolution: add, rename, or delete columns without rewriting tables or breaking queries

- Partition evolution: change partition strategies over time without costly rewrites

- Hidden partitioning: automatically applies partition filters so users don’t need to specify partition columns, reducing accidental full-table scans

What is time travel in Iceberg and when would I use it?

Time travel lets you query previous table snapshots exactly as they existed at a point in time. Use it for auditing, debugging, reproducible analytics, and rolling back unintended changes—all without duplicating datasets or manual backups.

How does an Iceberg lakehouse reduce cost and data duplication?

By querying a single canonical copy in the data lake across multiple tools, Iceberg minimizes copies into separate warehouses and marts. Its metadata pruning reduces scanned data and compute spend, and the same table supports both streaming and batch ingestion—simplifying pipelines and lowering storage and processing costs.

What are the core components of an Apache Iceberg lakehouse?

- Storage layer: Object stores/filesystems (e.g., S3, Azure Blob, GCS, HDFS) holding Parquet/ORC/Avro and Iceberg metadata

- Ingestion layer: Batch and streaming into Iceberg (e.g., Spark, Flink, Kafka Connect, Fivetran, Estuary, Qlik Talend Cloud)

- Catalog layer: Tracks tables and metadata (e.g., AWS Glue, Project Nessie, Apache Polaris, Gravitino, Lakekeeper, Iceberg REST Catalog)

- Federation layer: Modeling, semantic layer, and acceleration (e.g., Dremio, Trino, dbt)

- Consumption layer: BI, AI/ML, apps and APIs (e.g., Tableau, Power BI, Looker, Preset; Databricks, Jupyter, Hugging Face, LangChain; REST/GraphQL)

Architecting an Apache Iceberg Lakehouse ebook for free

Architecting an Apache Iceberg Lakehouse ebook for free