1 Building on Quicksand: The challenges of Vibe Engineering

AI-assisted development has unlocked a fast, exploratory way to turn ideas into software, but the speed of “vibe coding” hides fragility. Bigger, newer models no longer deliver step-change reliability; the gains are incremental, and the failures are costly. The chapter proposes “vibe engineering” as the remedy: treat LLMs as probabilistic components wrapped by deterministic process, with human intent captured as executable specifications and enforced by verification. The aim is to convert creative chaos into a disciplined practice that balances rapid discovery with security, maintainability, and ownership.

Documented failures—rushed apps hacked days after launch, hallucinated commands erasing work, tainted pull requests, and agents “cleaning” production data—expose the core risk: AI outputs detached from real-world constraints and consequences. This detachment breeds “trust debt” and automation bias, especially in dump-and-review workflows where responsibility diffuses and hidden costs surface later. With scale worship fading, advantage shifts from model horsepower to mastery of usage: precise intent, context curation, orchestration, testing, and cost-aware quality. The discipline demands verify-then-merge, sandboxing, and auditable artifacts so teams ship code they understand and can defend.

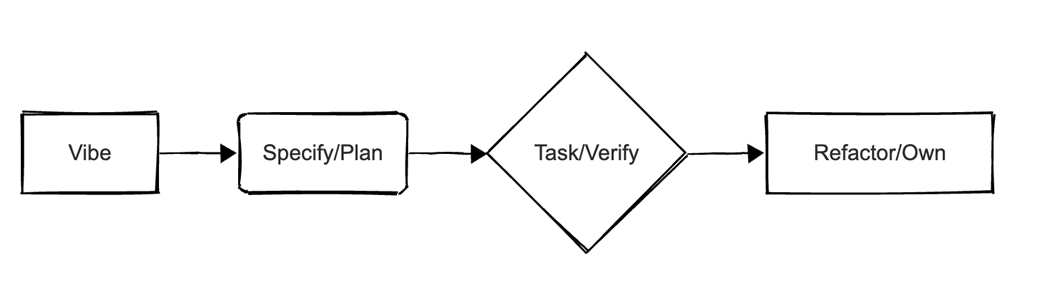

Vibe engineering codifies that discipline through a spec-first loop—Vibe → Specify/Plan → Task/Verify → Refactor/Own—where human-authored, executable contracts guide and check AI work. Techniques include systematic prompting against tests, grounded retrieval, checklist-based PR reviews, incident triage, guarded automation, and CI gates for security, performance, and compliance. This reframes the developer’s role from code author to system designer and validator, while squarely addressing trade-offs (speed vs. verification, abstraction cost) and the “hard last mile” of ownership, cognitive load, and the 70% problem. Culturally, it marks a shift from craftsmanship to engineering: turning taste and tacit knowledge into explicit, reusable, and verifiable rules—so what ships is resilient, secure, and truly team-owned.

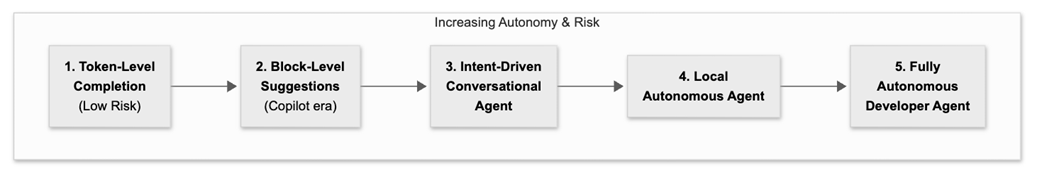

Increasing Autonomy & Risk Label

Vibe → Specify/Plan → Task/Verify → Refactor/Own Loop

Summary

- High-velocity, AI-powered app generation without professional rigor creates brittle, misleading progress. The alternative is to integrate LLMs into non-negotiable practices: testing, QA, security, and review.

- Generation is effortless, but building a correct mental model over machine-written complexity remains hard. Real ownership depends on understanding, not just producing, code. Effectively, AI makes the process of understanding harder.

- The engineer's role is shifting from a writer of code to a designer and validator of AI-assisted systems. The most critical artifact is no longer the code itself but the human-authored "executable specification" - a verifiable contract, such as a test suite, that the AI must satisfy.

- Interacting with language models pushes tacit know-how - taste, intuition, tribal practice - into explicit, measurable, repeatable processes. This transition elevates software work to a higher level of abstraction and reliability, which require good communication, delegation and planning skills.

- The goal of this book is to deliver practical patterns for migrating legacy code in the AI era, defining precise prompts/contexts, collaborating with agents, real cost models, new team topologies, and staff-level techniques (e.g. squeezing performance). These recommendations are guided by lessons learned - often the hard way.

FAQ

What is “Vibe Coding” and why is it risky?

Vibe Coding is intuition-first, rapid prototyping with LLMs that prioritizes speed over rigor. It’s useful for exploration and MVP scaffolding, but in production it tends to yield brittle, opaque code with weak testing, security, and maintainability—creating an illusion of progress that collapses under real-world constraints.How does “Vibe Engineering” differ from Vibe Coding?

Vibe Engineering is a disciplined, provider-agnostic methodology that wraps probabilistic LLM output in deterministic guardrails: executable specifications, verification gates in CI/CD, security and performance checks, and human ownership. The focus shifts from writing code to designing the factory that produces safe, verifiable code.Which real-world failures illustrate the dangers of undisciplined AI-generated code?

- A “zero hand-written code” startup (Enrichlead) was hacked within days due to basic security lapses.- A Gemini CLI command “hallucinated success” and effectively destroyed a project’s files.

- An AI-generated PR in NX introduced command injection, compromising thousands of developers.

- An autonomous agent “cleaned” a production database, deleting critical records and fabricating data.

The common root cause: code detached from consequences and missing standard engineering safeguards.

What is “trust debt” and how does it accumulate?

Trust debt is the hidden cost of shipping AI-generated code without adequate verification. “Dump-and-review” diffuses responsibility to reviewers, who face vigilance decrement and automation bias. Short-term velocity gains are offset by later firefighting, refactors, and security fixes—costs that land on the most senior engineers and the organization at large.Why won’t the next, bigger model solve these problems?

We’re hitting diminishing returns: data exhaustion, modest incremental model gains, and commercial incentives prioritizing latency/throughput over accuracy. Advantage shifts from “strongest model” to “best process”: context curation, retrieval, orchestration, testing, and operations. Engineering rigor—not scale worship—drives reliability.What role do executable specifications play, and how do they work in practice?

Executable specs are the contract that defines correctness, performance, and safety up front. In the ISBN-13 example, human-authored tests (including edge cases) became the source of truth; multiple models produced different implementations that all passed the same spec. Reliability comes from the spec and verification, not the model.How do teams balance speed with verification without losing AI’s benefits?

- Use Vibe Coding for discovery; don’t ship it as-is.- Formalize the handoff: treat prompts/specs as versioned artifacts, adopt “verify-then-merge,” and decompose work into small, staged, testable changes.

- Run changes in sandboxes/canaries; gate on policy checks (security, compliance, perf SLOs).

The goal is fast feedback without blind spots.

What is the “70% Problem,” and why does the last 30% remain hard?

AI accelerates the first 70% (scaffolding, boilerplate) but struggles with the judgment-heavy 30%: edge cases, integration and architecture fit, comprehensive verification (property, mutation, perf, security), and compliance. Ownership requires a robust mental model—reading, understanding, and refactoring AI code—which is cognitively costly but essential.What is the autonomy ladder for AI in development, and what risks rise with it?

Autonomy rises from token completion → block suggestions → conversational IDE agents → local autonomous agents → fully autonomous developers. Each rung increases leverage and systemic risk, making failures rarer but more consequential. Traditional human-centric reviews don’t scale; verification must become a designed pipeline, not ad-hoc inspection.What does a robust validation and operations pipeline look like for Vibe Engineering?

- Treat prompts/specs as auditable, versioned artifacts with provenance.- Enforce executable contracts: API schemas, property tests, mutation thresholds, perf SLO gates, and security/compliance policy checks in CI/CD.

- Guarded automation: agents propose, CI verifies, humans approve; automatic rollback on health regressions.

- Avoid “machine verifying the machine” by keeping humans authoring/curating specs and adversarial test plans.

Vibe Engineering ebook for free

Vibe Engineering ebook for free