1 Why tailoring LLM architectures matters

Large language models deliver broad, general-purpose competence, but that breadth makes them slow, costly, and hard to differentiate for specialized tasks. This chapter argues for tailoring: reshaping models into small, efficient specialists that meet concrete business needs. Rather than relying only on prompts, RAG, or conventional fine-tuning, it advocates model rearchitecting—surgical, architecture-level interventions that remove or reconfigure what a task doesn’t need and strengthen what it does—so organizations can achieve faster inference, lower costs, and domain-specific quality.

It details why generic LLMs struggle in production: escalating and unpredictable costs (token usage, tool-calling agents, idle but expensive infrastructure), vendor lock-in when fine-tuning closed APIs, and a “generic trap” where everyone gets similar answers. Prompt engineering and RAG help but don’t truly reshape reasoning or create differentiation, while large open models often chase benchmarks that don’t reflect real tasks. The opacity of black-box APIs and even many open models further complicates regulated use. The chapter shows how analyzing neuron activations and applying targeted pruning and knowledge distillation can restore control and efficiency, citing evidence that careful pruning can substantially shrink models with minimal performance loss.

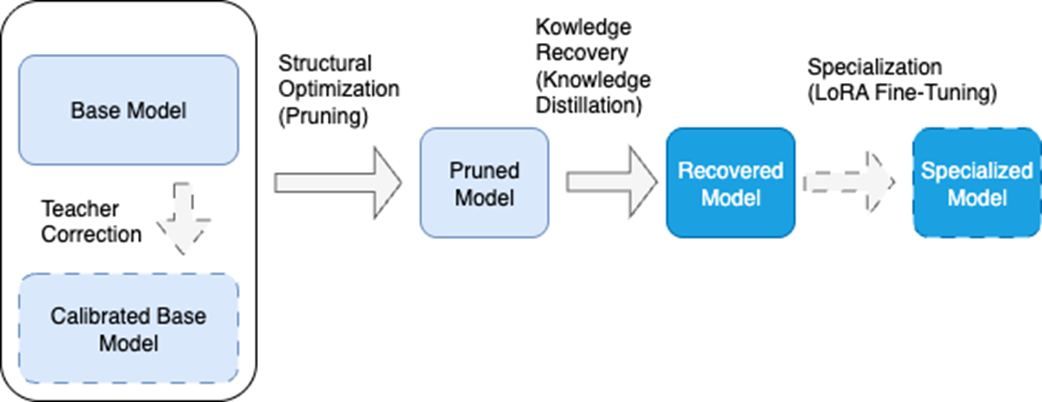

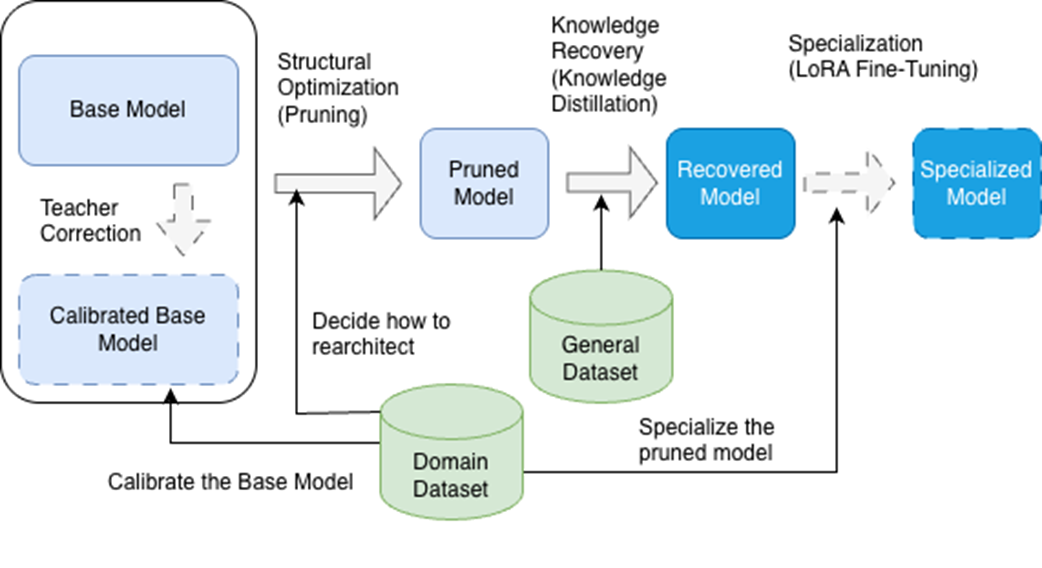

The proposed solution is a practical pipeline. First, perform structural optimization via pruning, ideally guided by a domain dataset to identify low-value components. Second, recover capabilities through knowledge distillation, transferring not only outputs but reasoning patterns from a teacher to the pruned student. Third, optionally specialize with parameter-efficient fine-tuning (e.g., LoRA), preceded by an optional light “teacher correction” to align the base model. This pipeline supports both deep specialization and pure efficiency gains, from edge deployment to targeted capability boosts. The chapter also outlines the tools (PyTorch, the Hugging Face ecosystem, standard evals, and the optiPfair library) and a learning roadmap that moves from fundamentals to activation-level diagnostics and fairness pruning—equipping practitioners to build smaller, faster, more accurate models tailored to their real-world tasks.

The model tailoring pipeline consists of core phases (shown with solid arrows) and optional phases (shown with dashed arrows). In the first phase, we adapt the structure to the model's objectives through pruning. Next, we recover the capabilities it may have lost through knowledge distillation. Finally, we can optionally specialize the model through fine-tuning. An optional initial phase calibrates the base model using the dataset for which we're going to specialize the final model.

Dataset integration in the tailoring pipeline. The domain-specific dataset guides the calibration of the base model, informs structural optimization decisions, and drives the final specialization through LoRA fine-tuning. A general dataset supports Knowledge Recovery, ensuring the pruned model retains broad capabilities before domain-specific specialization. This dual approach optimizes each phase for the project's specific objectives.

Summary

- The use of oversized generic LLMs brings problems like high production costs, lack of differentiation from competitors, and zero explainability of decisions.

- Models increase their effectiveness and efficiency by adapting their architecture to a specific domain and task.

- The model tailoring process consists of three phases: Structure Optimization, Knowledge Recovery, and Specialization.

- The domain-specific dataset is a main element and common thread throughout the entire process, ensuring that each optimization and specialization phase is aligned with the final objective.

- Knowledge distillation transfers capabilities from the original teacher model to the pruned student model, learning not only the correct answers, but also the reasoning process that leads to them.

- Fine-tuning techniques, like LoRA, allow domain specialization by training only a small number of parameters, drastically reducing costs and time needed.

- Modern architectures like LLaMA, Mistral, Gemma, and Qwen share structures that make them ideal for rearchitecting techniques.

- By mastering these techniques, developers can go from being model users to model architects.

Rearchitecting LLMs ebook for free

Rearchitecting LLMs ebook for free