1 The data science process

Data science is presented as a practical, cross‑disciplinary craft aimed at improving decisions in business and science through data engineering, statistics, machine learning, and analytics. Success depends less on exotic tools and more on clear, quantitative goals, sound methodology, and a repeatable workflow carried out by a collaborative team. Key roles include a project sponsor who defines success and provides sign‑off, a client who represents day‑to‑day users and domain context, a data scientist who drives strategy and analysis, and supporting data architecture and operations partners to secure data and deploy results. A motivating example follows a bank seeking to reduce losses from bad loans, illustrating how problem framing and stakeholder alignment shape the entire effort.

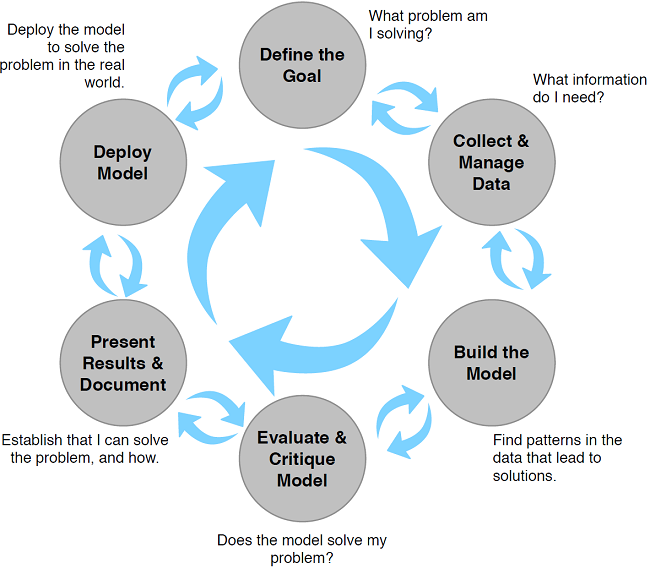

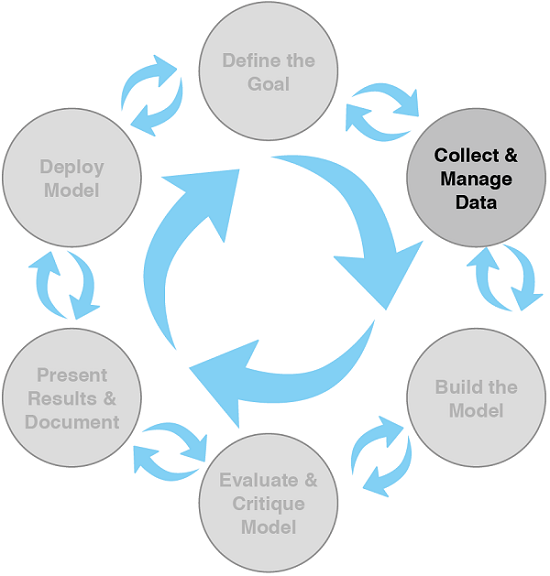

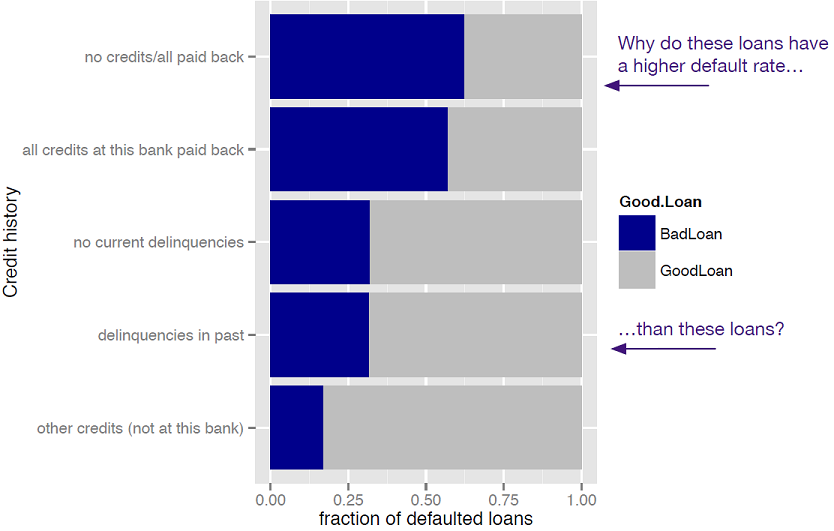

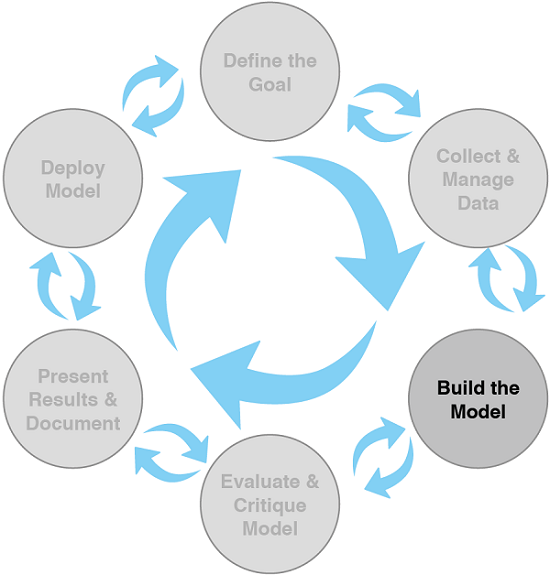

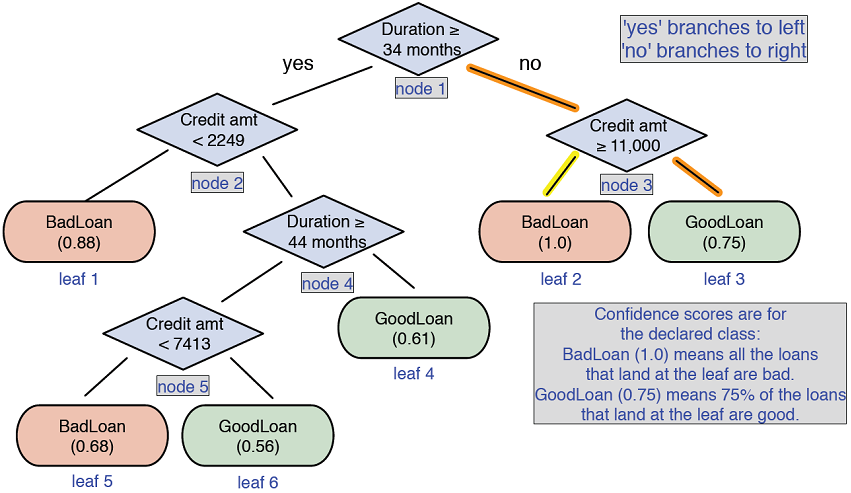

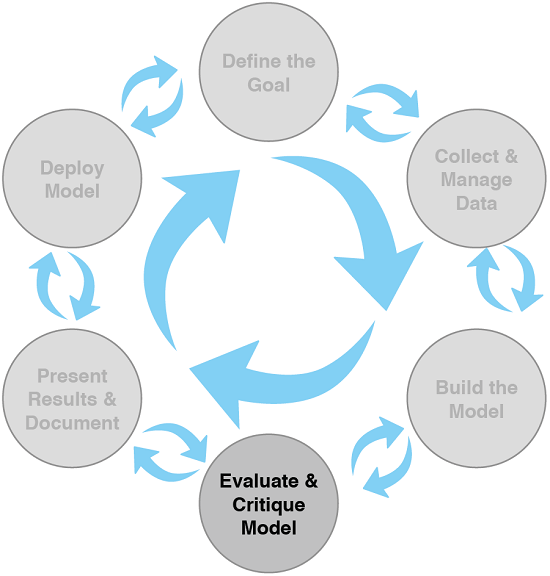

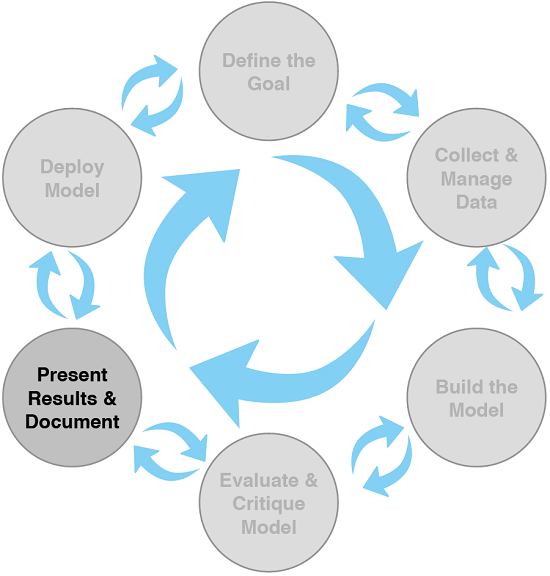

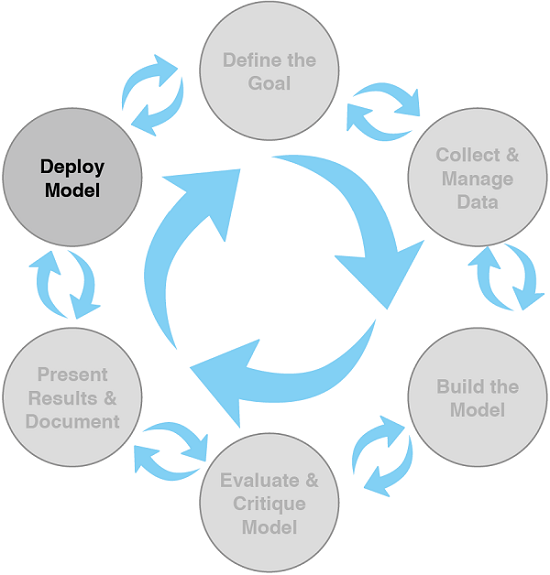

The chapter outlines an iterative lifecycle: defining a measurable goal, collecting and managing data, modeling, evaluation and critique, presentation and documentation, and deployment and maintenance. Goals must be specific and testable to bound scope and enable acceptance criteria. Data work typically dominates effort and can reveal issues such as sample bias that force reframing of objectives or features. Modeling tasks span classification, scoring, ranking, clustering, relation finding, and characterization; method choice is influenced by assumptions, data representation, and user needs for interpretability and confidence. Evaluation goes beyond overall accuracy to consider baselines and operationally relevant metrics (for example, recall, precision, and false positive rate), with frequent loops back to earlier stages to refine data, features, or goals.

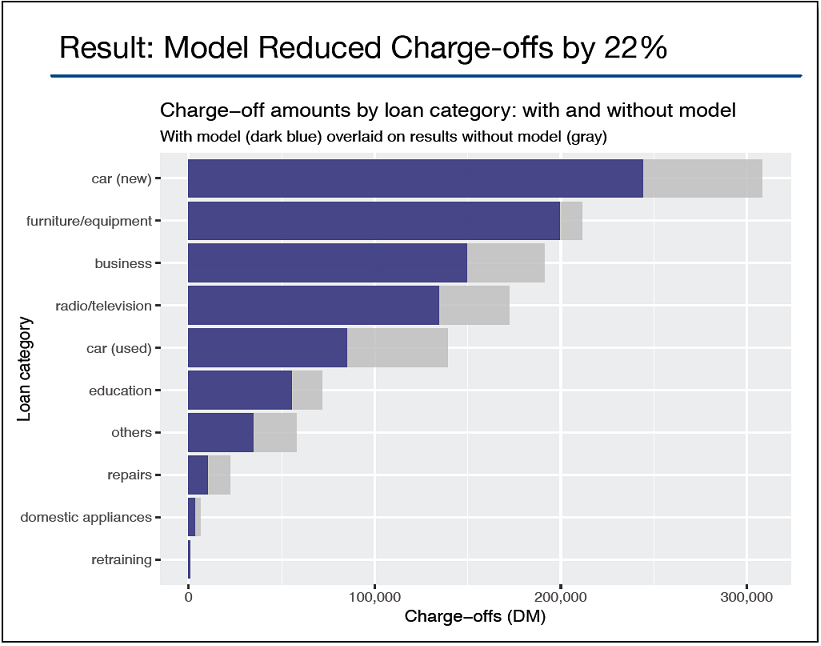

Deliverables must match audiences: sponsors need business impact framed in their metrics; end users need guidance on interpreting outputs, confidence scores, and when to override; operations needs clarity on performance, constraints, and maintenance. Deployment often starts with a pilot to surface unanticipated issues, and models require monitoring and updates as conditions change. The chapter closes by stressing expectation setting: quantify what “good enough” means, establish lower bounds via a null model or existing process, and ensure improvements are statistically meaningful and aligned to business priorities. Throughout, the process emphasizes transparent communication, measurable targets, trade‑offs between competing metrics, and a plan for ongoing stewardship of the model in production.

Figure 1.1. The lifecycle of a data science project: loops within loops

Figure 1.2. The fraction of defaulting loans by credit history category. The dark region of each bar represents the fraction of loans in that category that defaulted.

Figure 1.3. A decision tree model for finding bad loan applications, with confidence scores

Figure 1.4. Example slide from an executive presentation

Summary

The data science process involves a lot of back-and-forth—between the data scientist and other project stakeholders, and between the different stages of the process. Along the way, you’ll encounter surprises and stumbling blocks; this book will teach you procedures for overcoming some of these hurdles. It’s important to keep all the stakeholders informed and involved; when the project ends, no one connected with it should be surprised by the final results.

In the next chapters, we’ll look at the stages that follow project design: loading, exploring, and managing the data. Chapter 2 covers a few basic ways to load the data into R, in a format that’s convenient for analysis.

In this chapter you have learned:

- That a successful data science project involves more than just statistics. It also requires a variety of roles to represent business and client interests, as well as operational concerns.

- That you should make sure you have a clear, verifiable, quantifiable goal.

- That you should make sure you’ve set realistic expectations for all stakeholders.

FAQ

What does “data science” mean in this chapter’s context?

Data science is a cross‑disciplinary practice that applies data engineering, descriptive statistics, data mining, machine learning, and predictive analytics to make data‑driven decisions and manage their consequences. The chapter focuses on solving business and scientific problems using these techniques.Which roles are involved in a data science project and what do they do?

- Project sponsor: represents business interests and decides success or failure.- Client: represents end users and serves as domain expert.

- Data scientist: sets analytic strategy, executes the work, and communicates with stakeholders.

- Data architect: manages data and storage (often outside the core team).

- Operations: manages infrastructure and deployment (often outside the core team). Roles may overlap in practice.

Practical Data Science with R, Second Edition ebook for free

Practical Data Science with R, Second Edition ebook for free