1 Using generative AI in web apps

Generative AI web apps weave advanced models—especially large language models—into modern interfaces to create original text, images, audio, and video on demand. By generating content dynamically, they enable conversational experiences, intelligent automation, and personalized interactions that go beyond static logic. This chapter introduces what these apps can do, how they differ from traditional AI, and the core concepts needed to design and deploy them. It sets expectations for the tech stack used throughout the book—React, Next.js, and the Vercel AI SDK with models from providers like Google AI and OpenAI—and previews secure access to external tools and data via the Model Context Protocol, assuming readers have basic JavaScript and React familiarity.

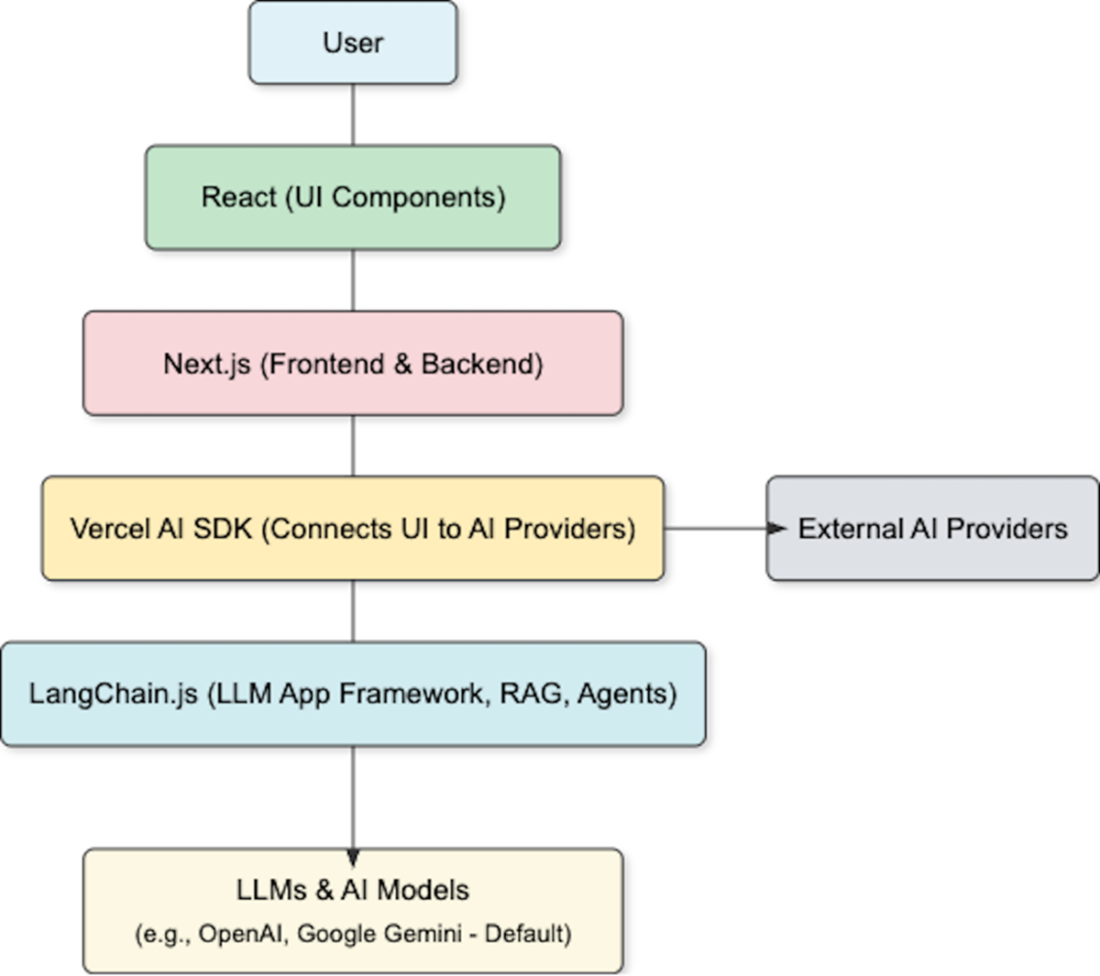

At a high level, these apps combine user-facing UIs and conversational agents with robust backends that preprocess inputs, select and call models, post-process outputs, and deliver results—often with a feedback loop. The architecture spans caching, serverless functions, containers and orchestration, and model-serving frameworks, alongside data pipelines and third-party API integrations, all built to deploy and scale reliably. The chapter outlines a typical interaction flow from user input to response delivery, and surveys model choices and trade-offs—transformer-based LLMs, autoregressive models, GANs, VAEs, and RNNs; pre-trained services versus self-hosting; plus performance considerations like latency and resource costs. The practical tooling centers on React and Next.js for UI and backend, the Vercel AI SDK for provider-agnostic integration, and LangChain.js for capabilities such as Retrieval-Augmented Generation.

Because these systems are powerful, the chapter emphasizes responsibility and pragmatism: managing quality and hallucinations, containing costs, guarding against misuse, and complying with privacy and data-protection rules when handling sensitive information. It recommends concrete validation tactics (clear objectives, careful prompting, parameter tuning, cross-checks), approaches to mitigate bias (curated knowledge bases, diverse training sources, auditing), and UX practices that improve trust and satisfaction (fast, accessible, personalized, and multimodal interactions with features like streaming). Acknowledging the impact on developer workflows, it frames AI as an accelerant for higher-value work. The book reinforces these principles through hands-on projects, including a voice-driven interview assistant with AI feedback and a RAG-powered knowledge system, to help readers build production-ready generative AI applications.

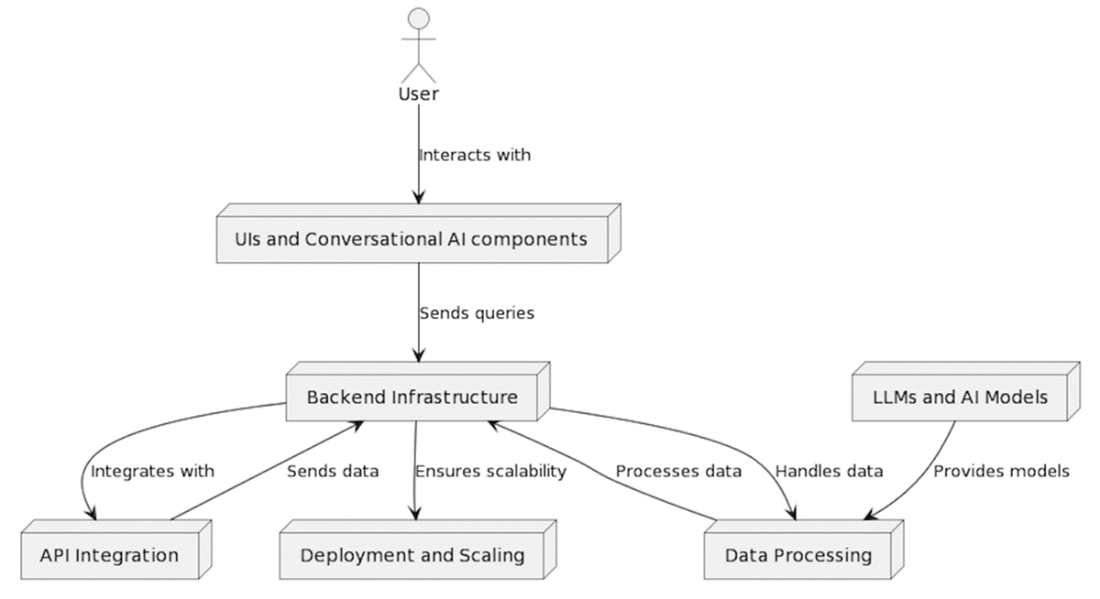

The flow of information and interactions between the key components of a generative AI web application.

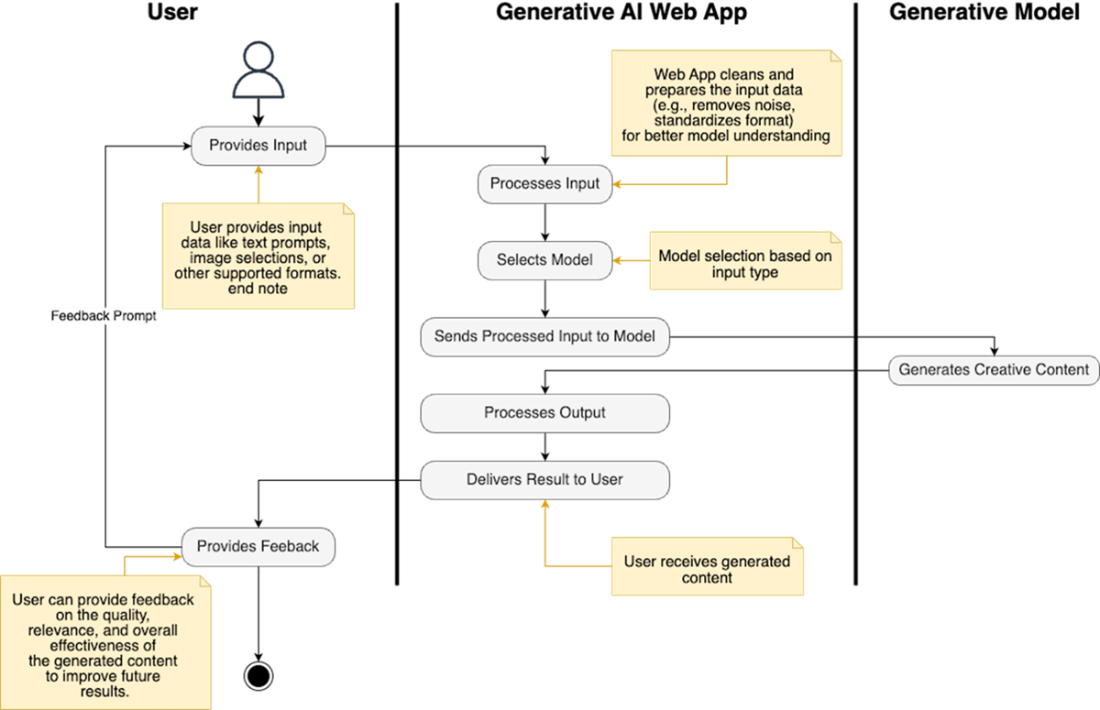

How an AI web app works: users input data, the app processes it, selects a model, generates content, delivers it, and optionally collects feedback.

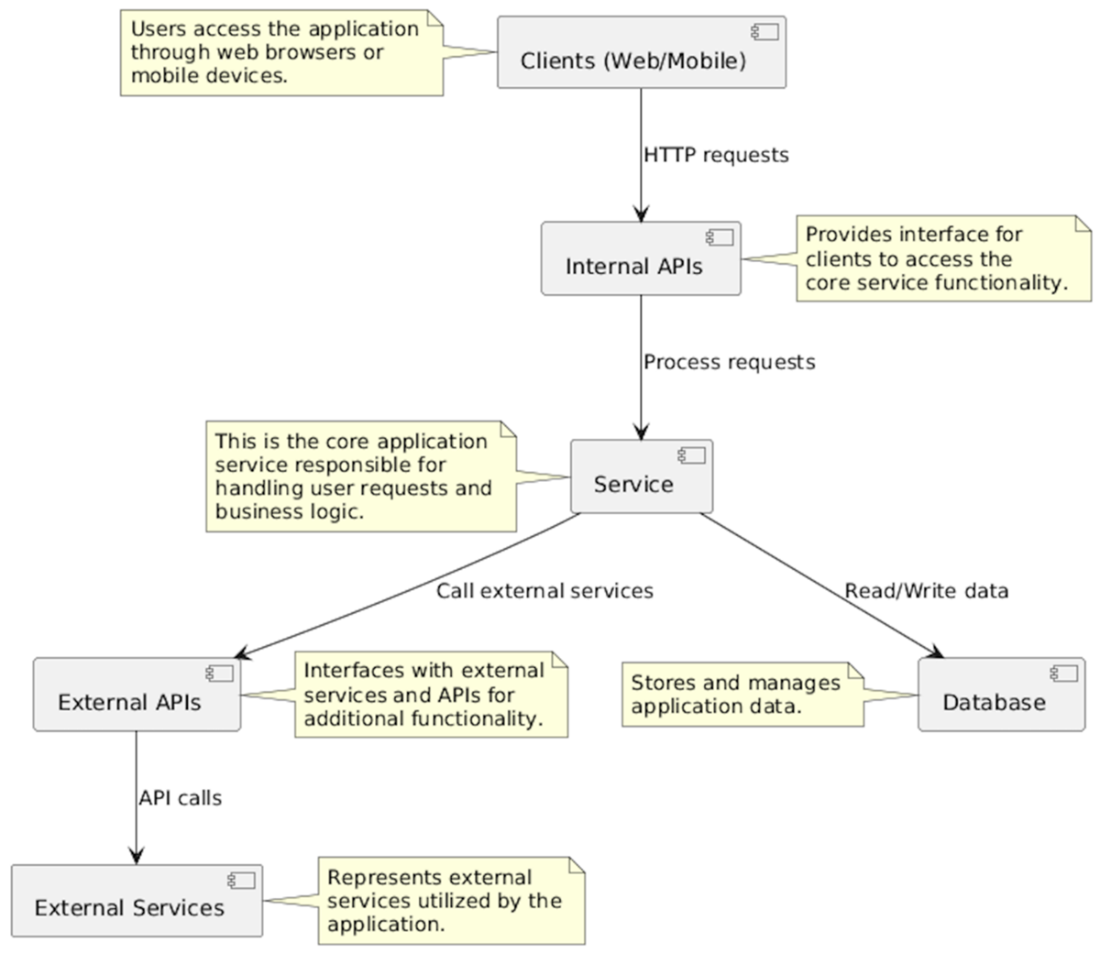

Simplified architecture diagram of a web application ecosystem. Clients, including web browsers and mobile devices, interact with the core application service, which handles user requests and business logic. The service interacts with a database to store and manage application data. Additionally, the service communicates with external APIs to access additional functionality and interacts with external services utilized by the application.

Leveraging key technologies to create generative AI web applications

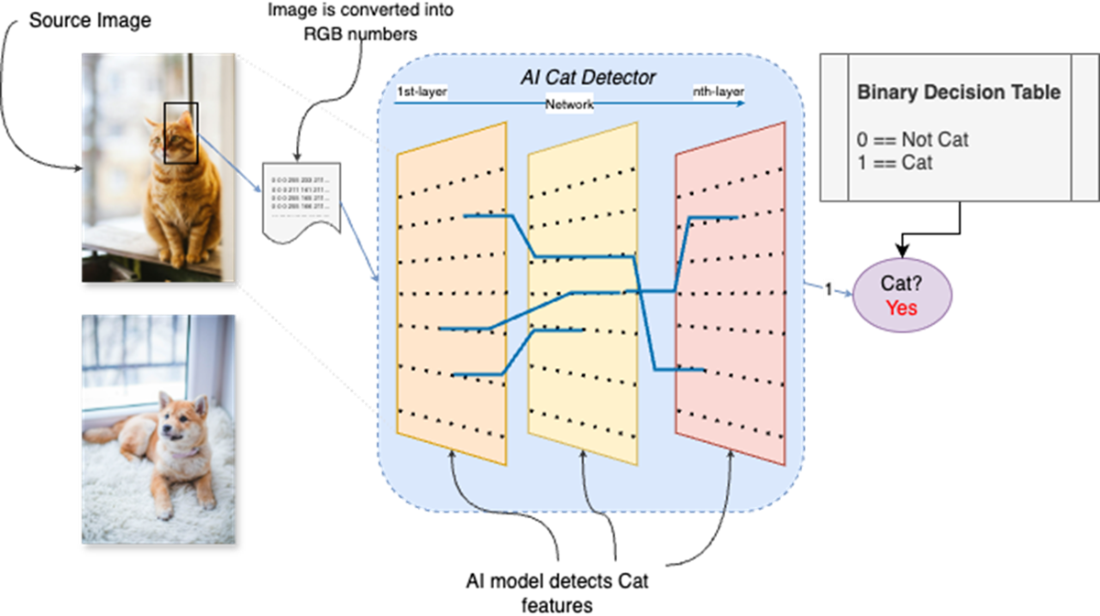

How AI can be used to detect whether a picture of a cat is a cat or not. It accepts an image as input and responds with yes or no (or 0 and 1).

Summary

- Generative AI can generate not only text, but all sorts of media resources like images, video clips and audio. This greatly enhances their potential usage in web applications, and real-world uses of generative AI in web applications range from digital marketing and customer experience management to mock interview applications.

- Generative AI web apps center on powerful models like large language models (LLMs) to create content from user input. The apps require a full supporting ecosystem to integrate with the model, including UI and conversational AI components, backend infrastructure, data processing pipelines, API integration, and deployment and scaling mechanisms.

- The apps we build in this book will use JavaScript and React to display the UI interface components, along with Next.js and the Vercel AI SDK to manage the backend and interact with external AI service providers.

- Choosing the right model for an app is a key architectural decision and depends on the task required. Different model types ( such as LLMs, GANs, autoregressive, transformers, VAE, and RNNs) excel at different kinds of problems. But the model architecture is just one consideration; developers also need to consider the quality and type of data it was trained on.

- Software engineers have been using AI long before generative AI came into existence. Common applications include machine learning, search recommendations, chatbots and computer vision.

- Foundational research like Google's "Attention is All You Need" laid the groundwork for transformative technologies such as transformers, which simplified natural language processing tasks by leveraging attention mechanisms. Transformers revolutionized language modeling by improving efficiency and accuracy in understanding textual data, addressing long-standing challenges faced by traditional AI models.

- Limitations of generative AI include quality control issues, resource intensiveness, security concerns, and regulatory compliance. Concerns include its potential impact on jobs, the reliability of outputs, handling bias, and enhancing the user experience.

FAQ

What is a generative AI web app and what can it do?

It’s a web application that integrates advanced AI models—often large language models (LLMs)—to generate text, images, audio, or video dynamically. This enables conversational interfaces, personalized content, intelligent automation, and new interactive experiences like code assistants and creative tools.

How does generative AI differ from traditional AI?

Traditional AI typically classifies or predicts (e.g., “cat vs not cat”). Generative AI learns patterns well enough to produce new content that resembles its training data. Modern capabilities are powered by transformers and self-attention, which capture long-range context to generate coherent, contextually relevant outputs.

What are the core components of a generative AI web app?

- LLMs and AI models for content generation

- UIs and conversational components (chatbots, agents)

- Backend infrastructure (caching, containers/orchestration, serverless, model serving)

- Data processing (pre- and post-processing, feature extraction)

- API integrations to external services

- Deployment and scaling for reliability and spikes

How does the user-to-model interaction flow work?

- User input through the UI (text, images, selections)

- Backend processing (cleaning, feature extraction, model selection)

- Content generation via selected model and APIs (optionally with RAG)

- Response delivery with an optional feedback loop to refine future results

Which tools and frameworks does the chapter recommend, and why?

- React for UI components and accessibility

- Next.js for backend integration and data fetching

- Vercel AI SDK for multi-provider AI abstractions, streaming, and state

- LangChain.js for RAG, agents, and composable chains

- Models/providers: Google Gemini (default), OpenAI as needed

These choices offer seamless integration, good developer ergonomics, and production-ready patterns.

How should I choose the right model and provider?

- Match model type to task (text, image, code, multimodal)

- Assess training data alignment with your domain

- Evaluate latency, cost, and resource needs

- Plan UI, pre-processing, and post-processing

- Consider RAG or fine-tuning for domain specificity

Should I use pre-trained APIs or host my own models?

Pre-trained APIs (e.g., Gemini, OpenAI) are fastest to adopt and reduce operational burden. Self-hosting offers maximum control and customization but requires significant ML expertise, infrastructure, and cost. The chapter’s projects use pre-trained APIs for practicality.

What real-world use cases does the chapter highlight?

- Digital marketing: image generation, image-to-image, and copywriting

- Customer experience: chatbots, sentiment analysis, dynamic replies

- Mock interviews: AI interviewer agents, speech-to-text, adaptive difficulty

What are the main risks and limitations to plan for?

- Quality control and hallucinations affecting accuracy

- Resource intensity and cost of training/inference

- Security risks and potential misuse (impersonation, misinformation)

- Regulatory compliance and PII handling (GDPR, CCPA)

- Bias in outputs; mitigate via diverse data, scope control, and bias audits

How can I improve reliability and user experience?

- Validate outputs: clear objectives, context framing, parameter tuning, cross-checking

- Enhance UX: streaming responses, multimodal inputs, personalization

- Strengthen systems: caching, scalable deployment, feedback loops

- Use RAG to ground answers in verified knowledge sources

Build AI-Enhanced Web Apps ebook for free

Build AI-Enhanced Web Apps ebook for free