4 Improving reasoning with inference-time scaling

This chapter shows how to improve a model’s reasoning without retraining it by spending more compute during inference. It frames “inference-time scaling” as a practical complement to training-time scaling: you can get better answers by letting the model think longer and/or by sampling more. The chapter extends a flexible text-generation pipeline to plug in new decoding strategies and demonstrates, on math problems, that these inference-time methods can more than double accuracy compared to a greedy baseline—at the cost of generating more tokens and thus higher latency and compute.

The first method is chain-of-thought prompting: nudging the model to explain its steps (for example, by appending “Explain step by step.”) often boosts reliability on multi-step problems because it aligns with patterns seen in training data and creates chances for self-correction. The chapter then equips the generator to produce diverse answers by rescaling logits with temperature and sampling with multinomial draws, while adding top-p (nucleus) filtering to suppress low-probability tokens and balance diversity with coherence. These components are integrated into a pluggable generation function, highlighting the trade-off: richer, more accurate reasoning typically means longer outputs and higher cost, and not every task or model benefits (overthinking can hurt on simple items, and some reasoning-tuned models already explain themselves).

The second method, self-consistency, uses temperature and top-p to sample multiple complete solutions, extracts the final answer from each, and returns the majority vote. Although simple, this voting step materially improves accuracy and can be parallelized across devices. Empirically, a greedy base model around 15% accuracy rises to about 41% with chain-of-thought; temperature+top-p alone yields only modest gains; self-consistency brings the base model into the low 30% range with more samples; and combining self-consistency with chain-of-thought reaches roughly 52%—while runtime grows substantially. A reasoning-tuned model also benefits (about 48% to 55%). The chapter closes with practical guidance on temperature (roughly 0.5–0.9) and top-p (about 0.7–0.9), caveats such as tie handling and the need for extractable final answers, and a preview of the next chapter’s broader, iterative self-refinement approach.

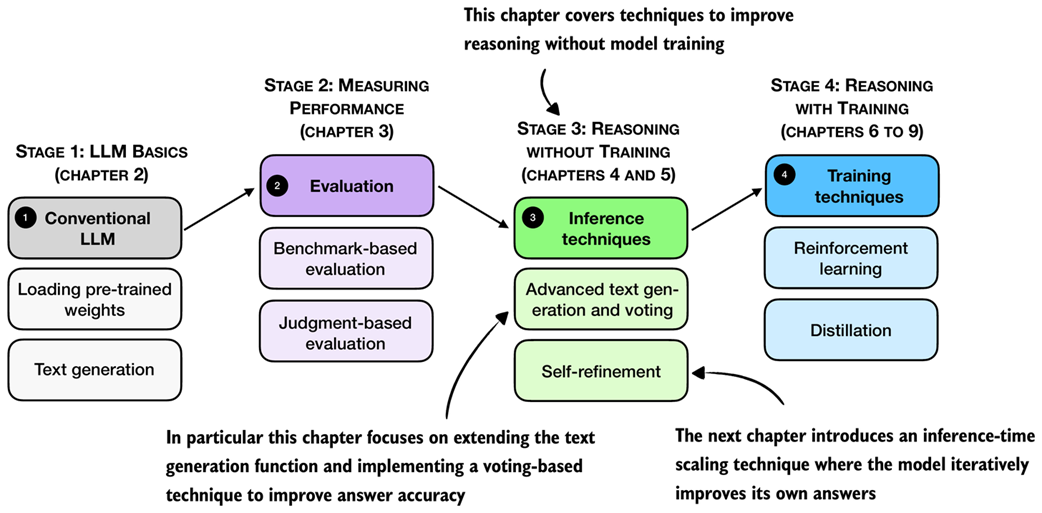

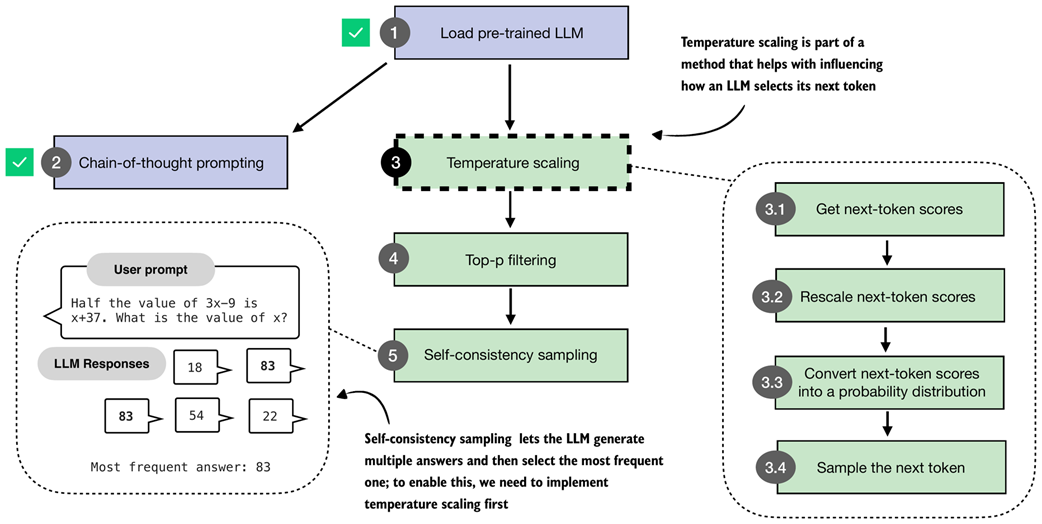

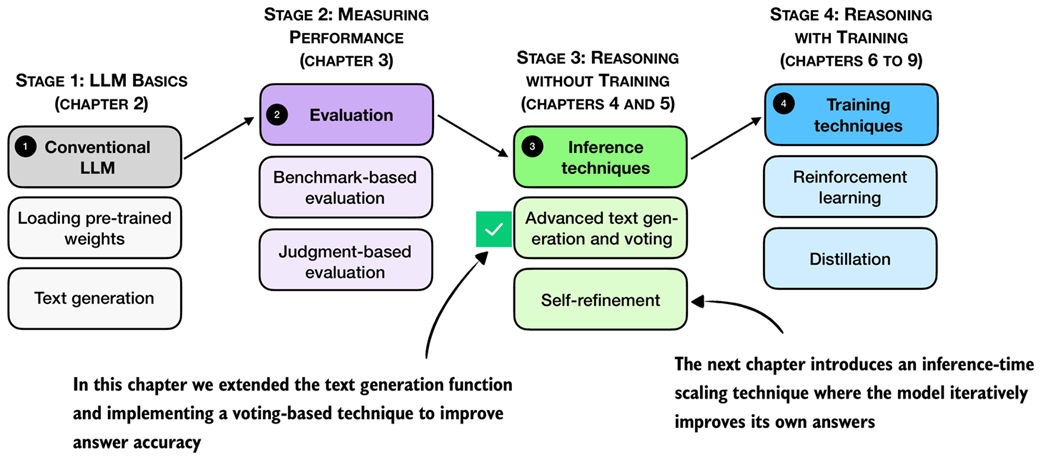

A mental model of the topics covered in this book. This chapter focuses on techniques that improve reasoning without additional training (stage 3). In particular, it extends the text-generation function and implements a voting-based method to improve answer accuracy. The next chapter then introduces an inference-time scaling approach where the model iteratively refines its own answers.

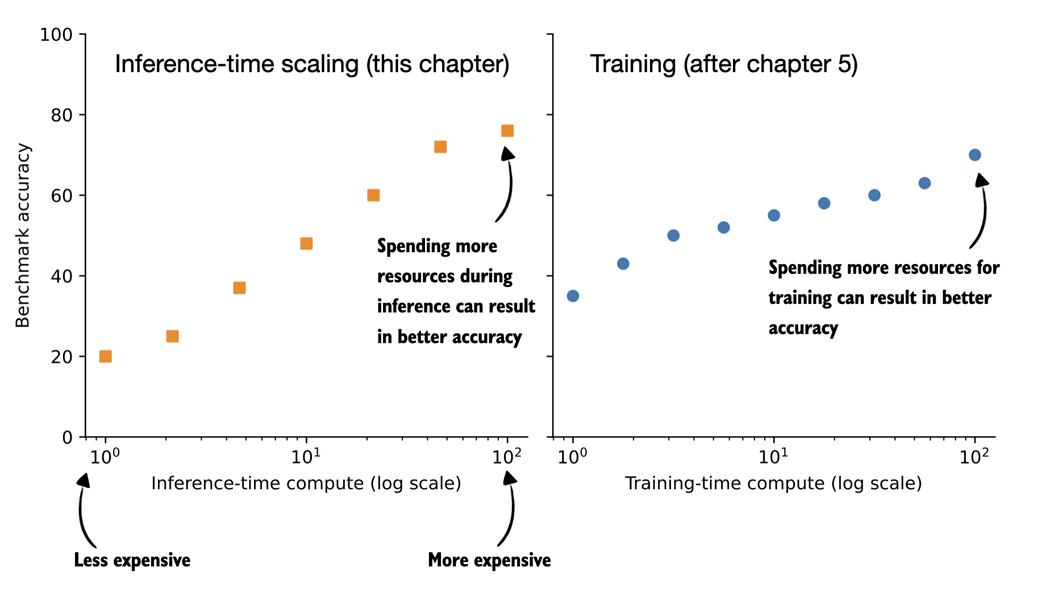

Comparison of inference-time scaling (this chapter) and training-time scaling (after chapter 5). Both improve accuracy by using more compute, but inference-time scaling does this on the fly, without changing the model's weight parameters. The plots are inspired by OpenAI's article introducing their first reasoning model (https://openai.com/index/learning-to-reason-with-llms/).

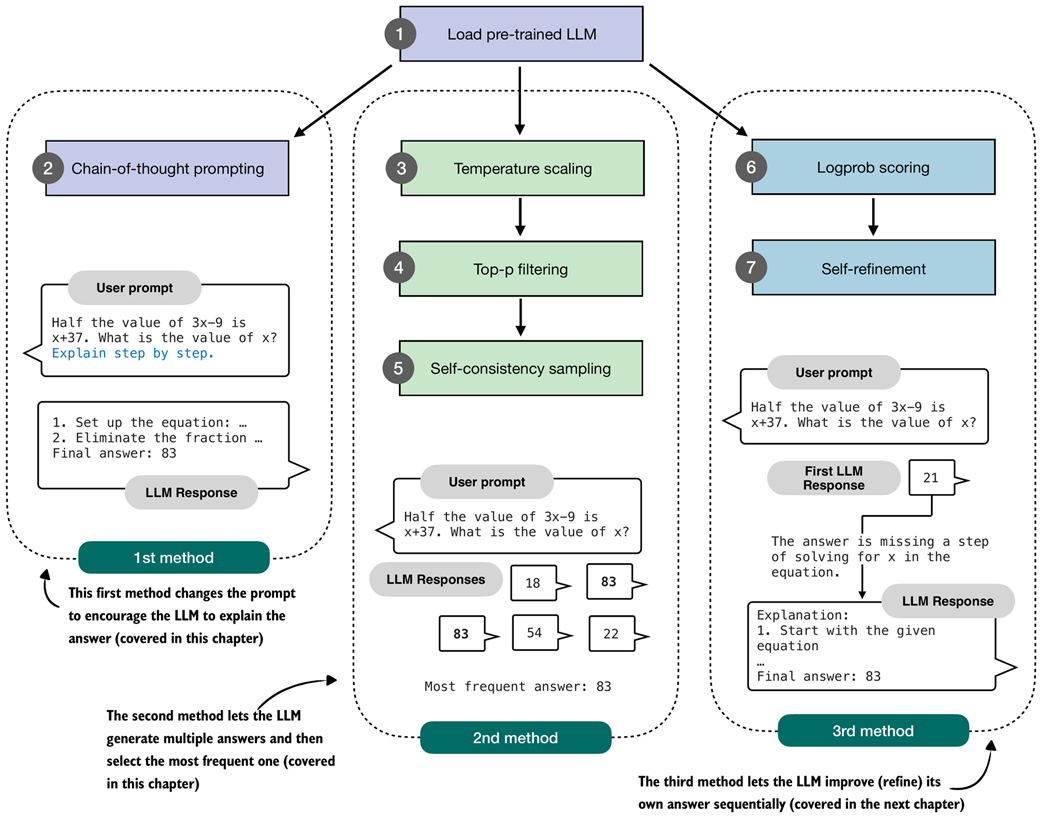

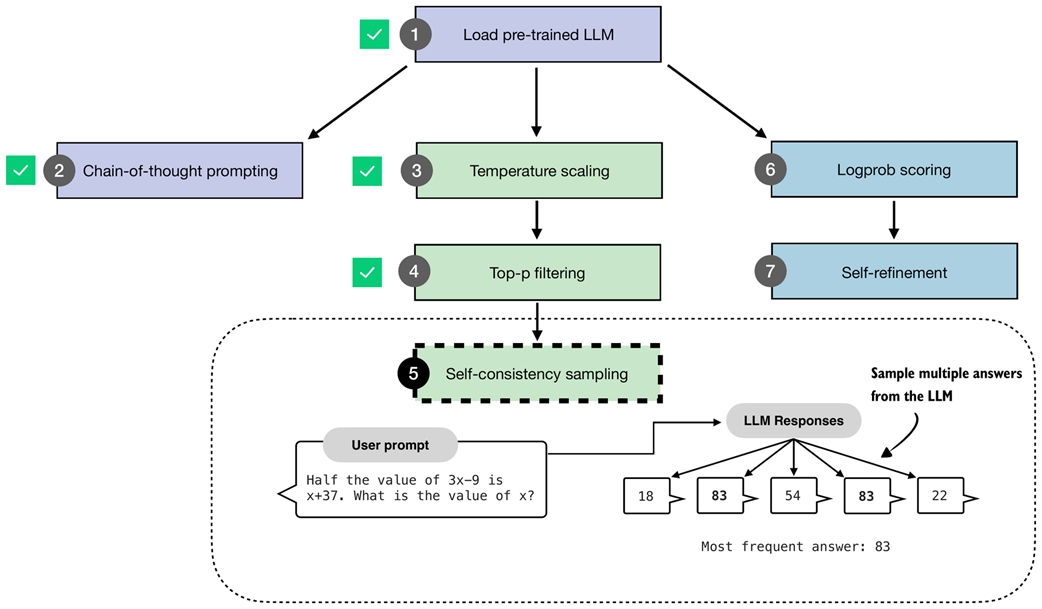

Overview of three inference-time methods to improve reasoning covered in this book. The first modifies the prompt to encourage step-by-step reasoning, and the second samples multiple answers and selects the most frequent one. Both are discussed in this chapter. The third method, in which the model iteratively refines its own response, is introduced in the next chapter.

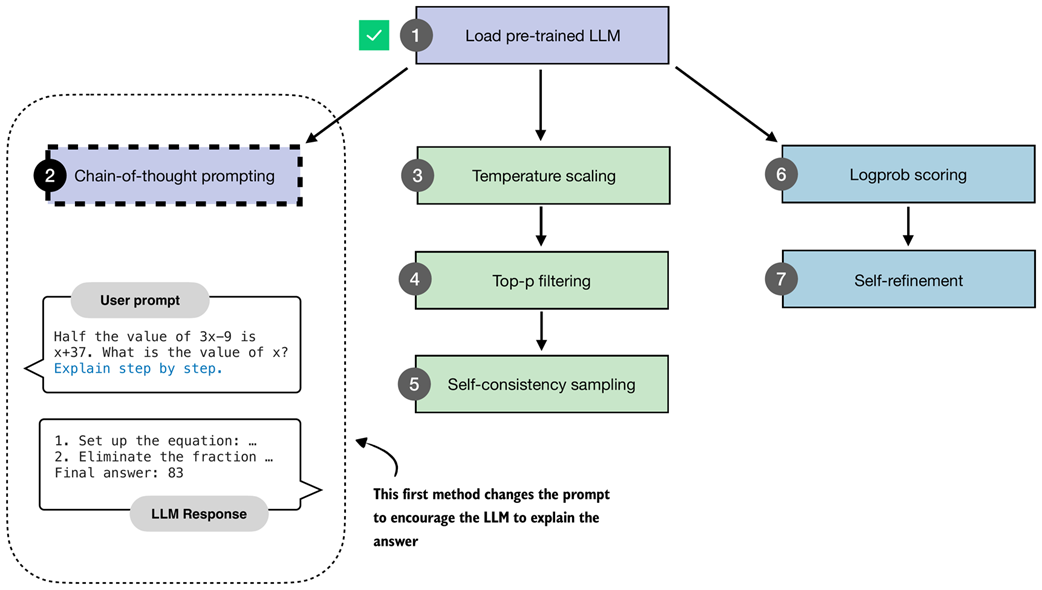

The first inference-time method, chain-of-thought prompting, modifies the prompt to encourage the model to explain its reasoning step by step before producing a final answer.

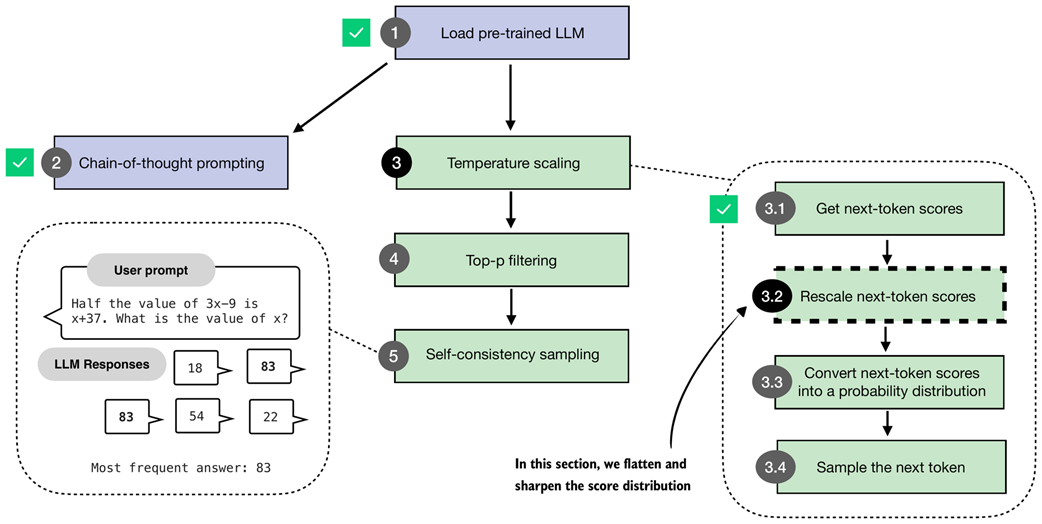

The second inference-time method, self-consistency sampling, generates multiple answers and selects the most frequent one. This method relies on temperature scaling, covered in this section, which influences how the model samples its next token.

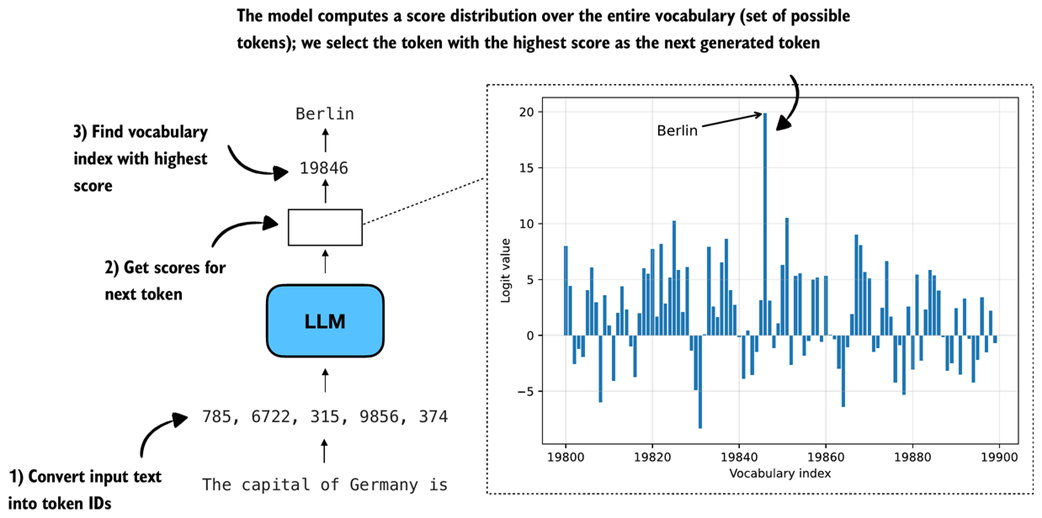

Illustration of how an LLM generates the next token. The model converts the input into token IDs, computes scores for all possible next tokens, and selects the one with the highest score as the next output.

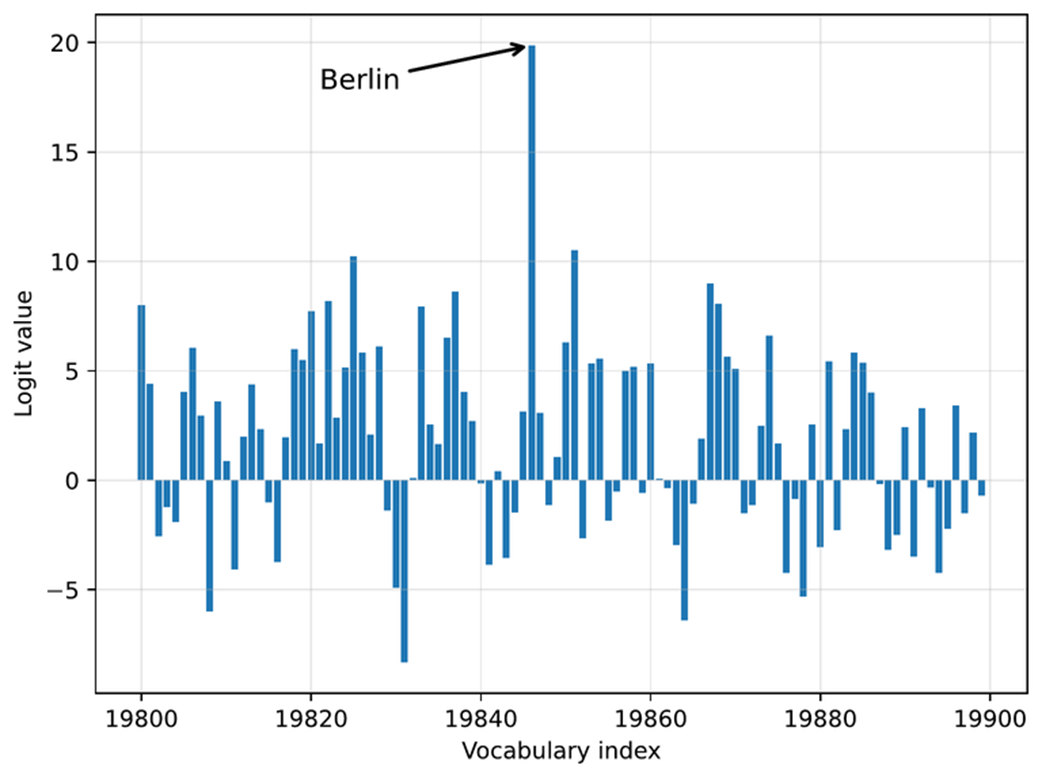

Example of next-token logits for a language model. Each bar represents a possible token's score, with "Berlin" having the highest logit value and being selected as the next token.

In this section, we implement the core part of temperature scaling (step 3.2), which adjusts the next-token scores. This allows us to control how confidently the model selects its next token in later steps.

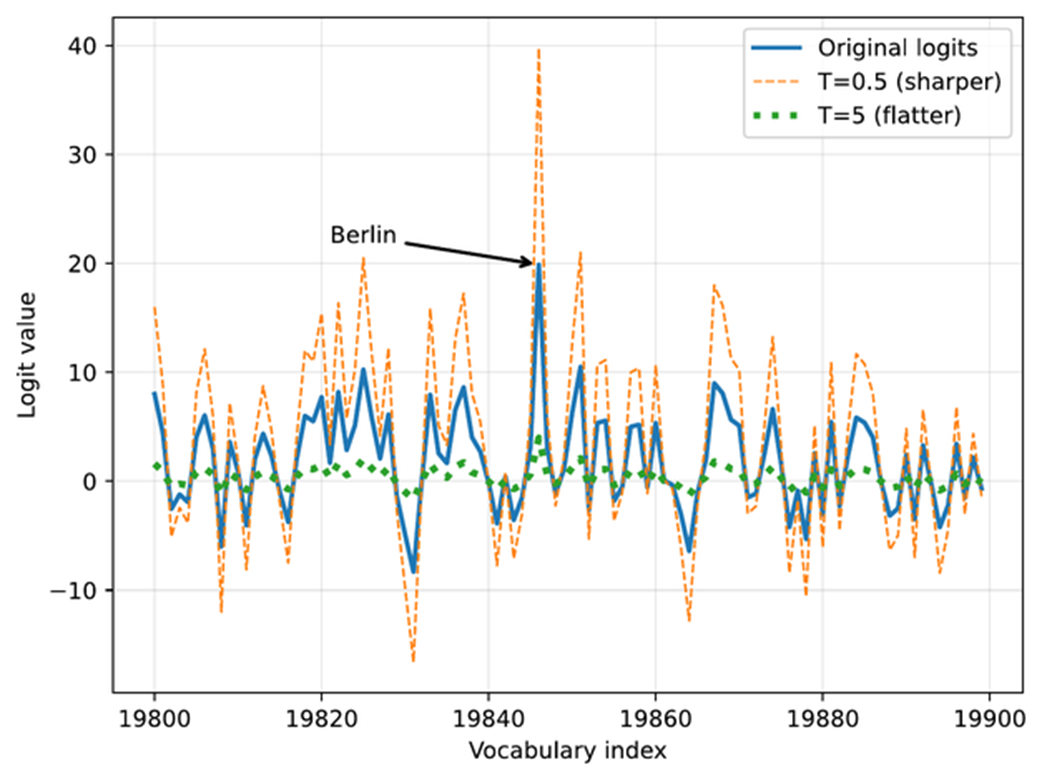

The effect of temperature scaling on logits. Lower temperatures make the distribution sharper, while higher temperatures flatten it. (Please note that this visualization is shown as a line plot for readability, though a bar plot would more accurately represent the discrete vocabulary scores.)

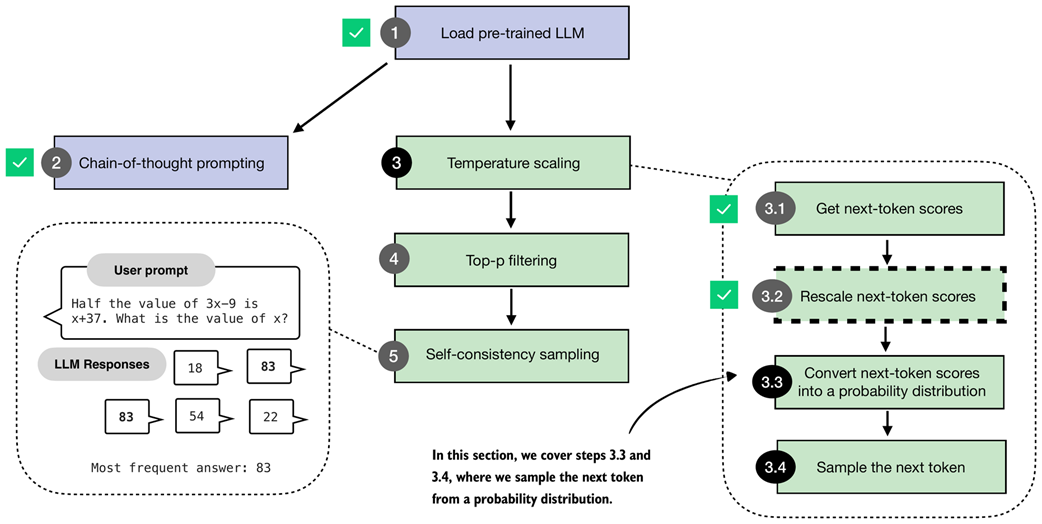

Overview of the sampling process for generating tokens. In this section, we focus on steps 3.3 and 3.4, where the next-token scores are converted into a probability distribution, and the next token is sampled based on that distribution.

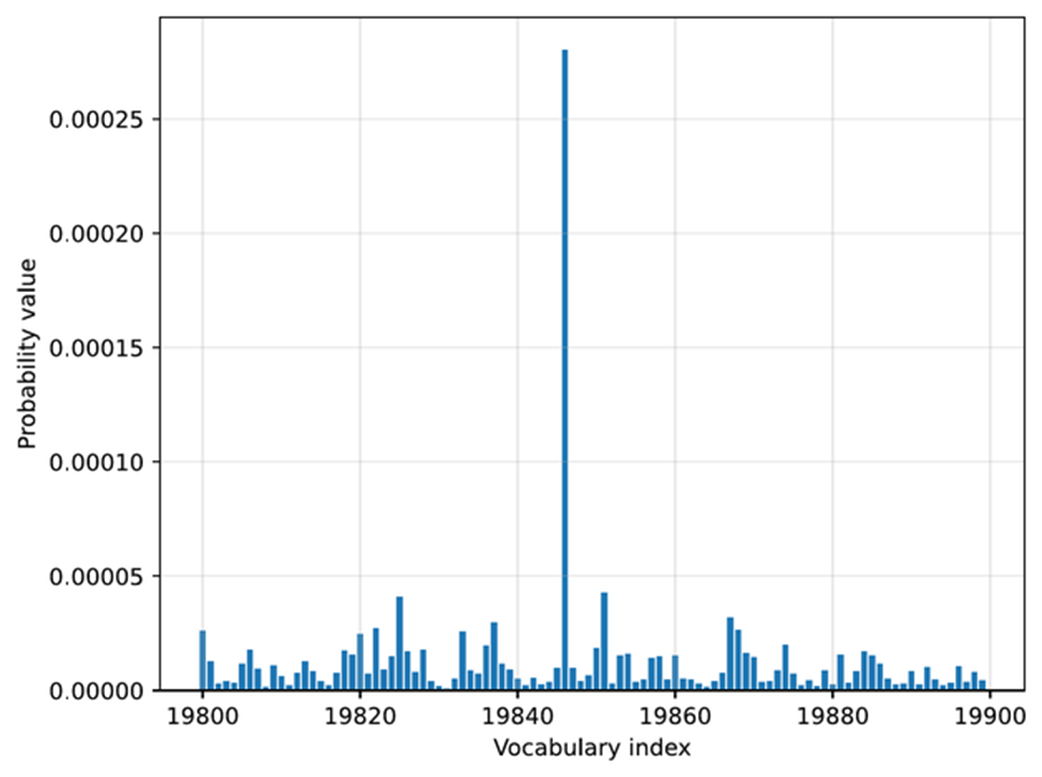

Token probabilities obtained by applying the softmax function to the rescaled logits. The token of the highest probability (corresponding to " Berlin", but with the label omitted for code simplicity) is selected as the next output.

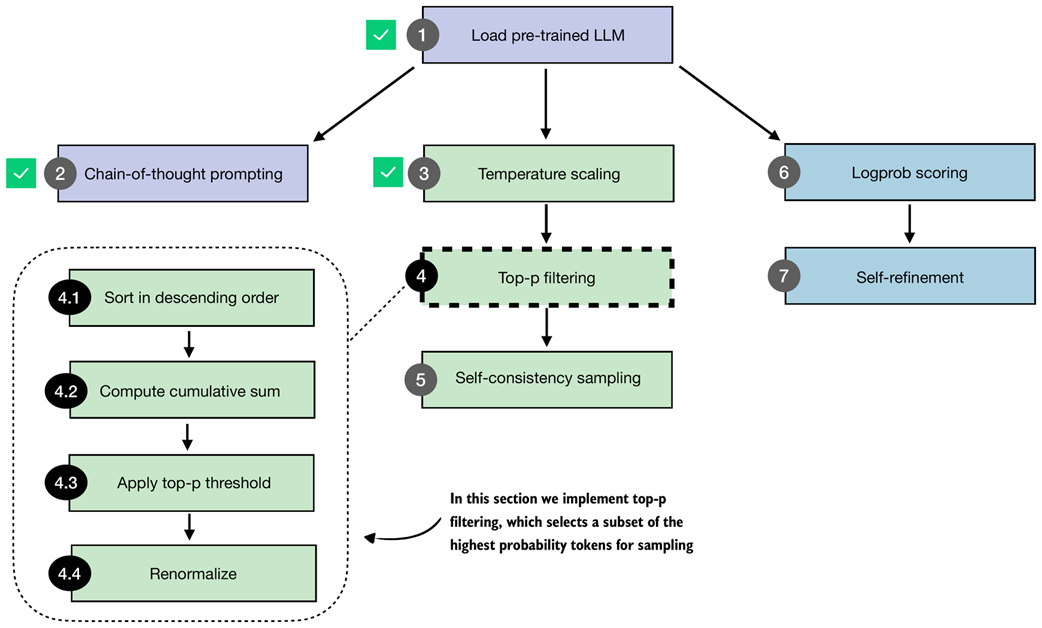

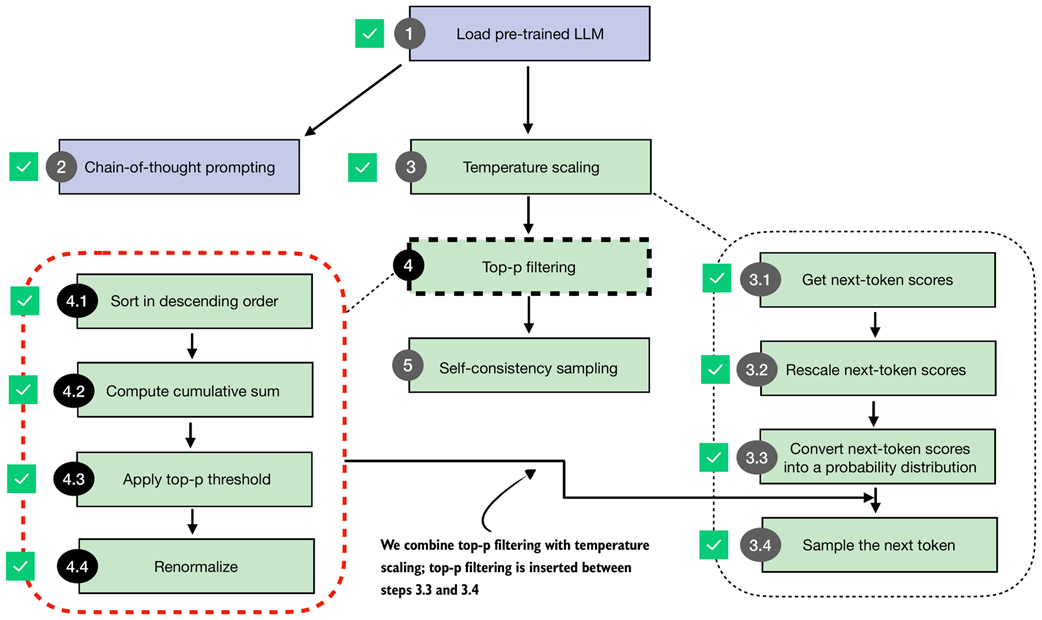

Overview of the top-p filtering process. The filter keeps only the highest-probability tokens by sorting them, applying a cumulative cutoff, selecting the top-p subset, and renormalizing the result.

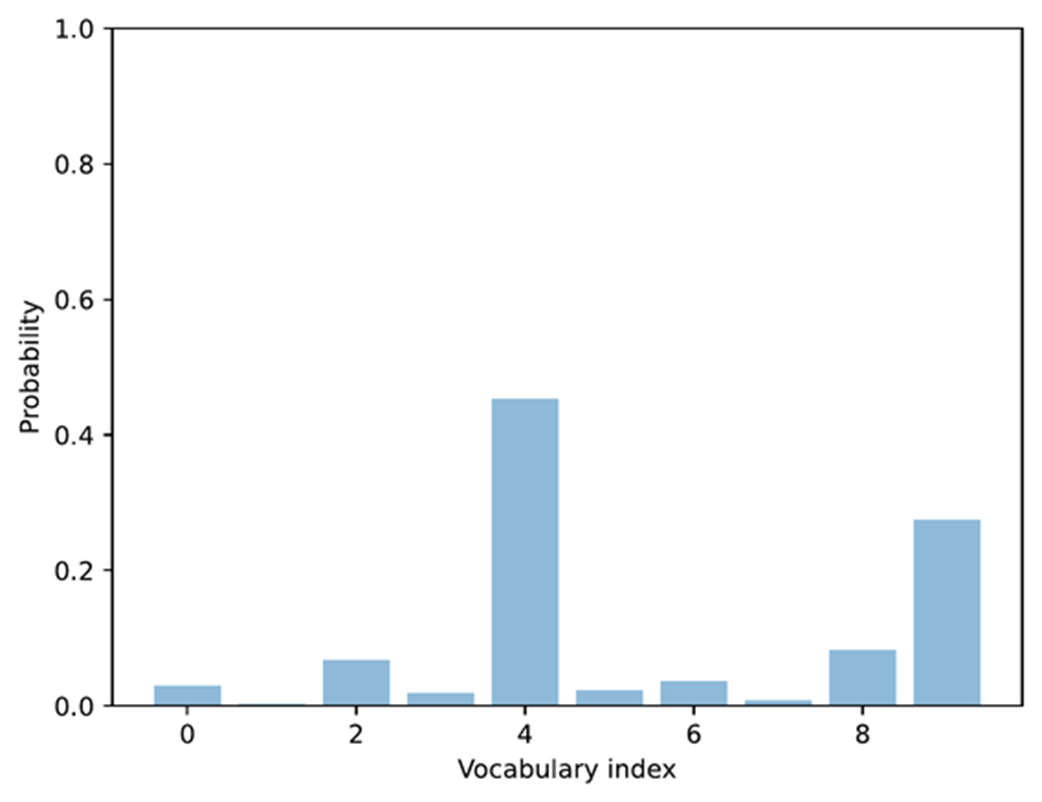

Example of token probabilities before top-p filtering. The distribution includes many low-probability tokens, which will later be truncated by applying a cumulative probability threshold.

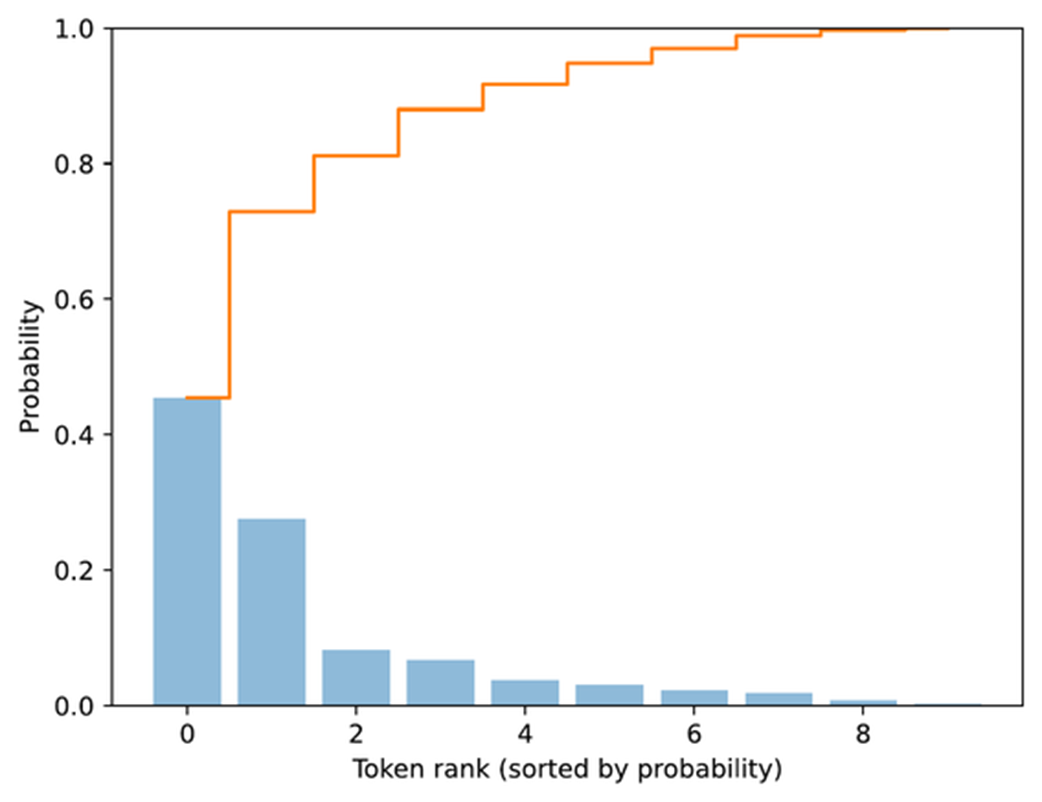

Visualization of sorted token probabilities and their cumulative sum. This step prepares for top-p filtering by showing how probabilities accumulate when ordered from highest to lowest, which helps determine where to set the cutoff threshold.

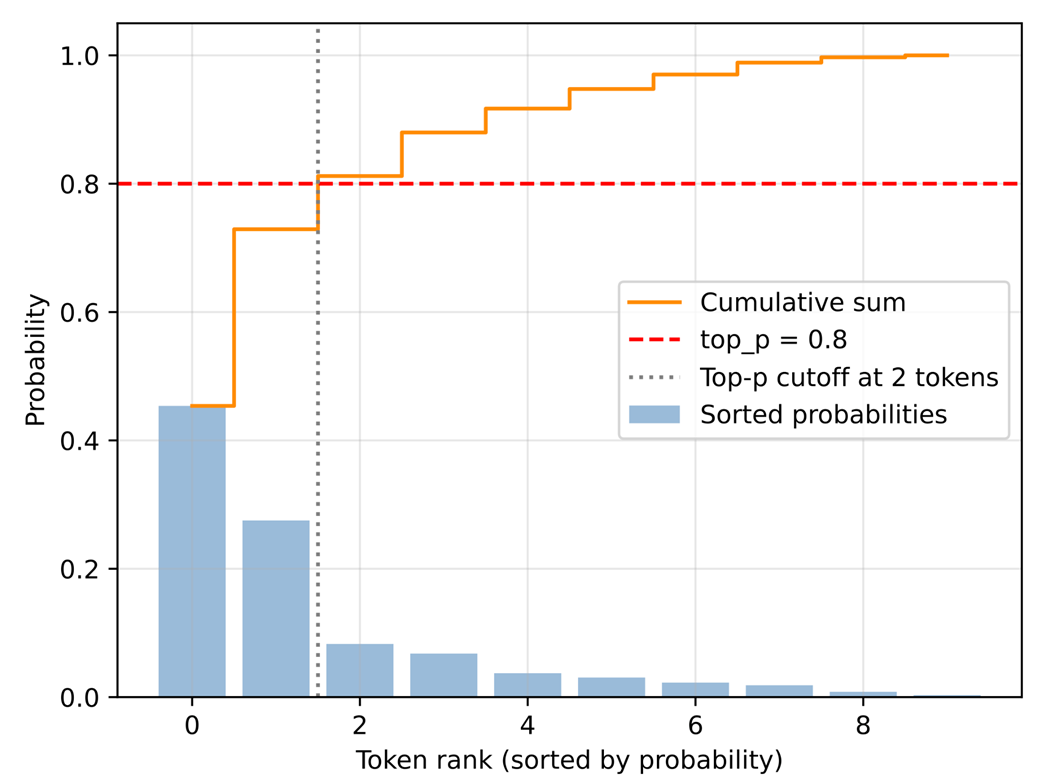

Illustration of top-p (nucleus) filtering. Tokens are sorted by probability, and the smallest subset whose cumulative probability exceeds the threshold (p = 0.8) is kept for sampling.

Integration of top-p filtering with temperature scaling. After rescaling the next-token scores, top-p filtering is applied between steps 3.3 and 3.4 to limit sampling to the most probable tokens.

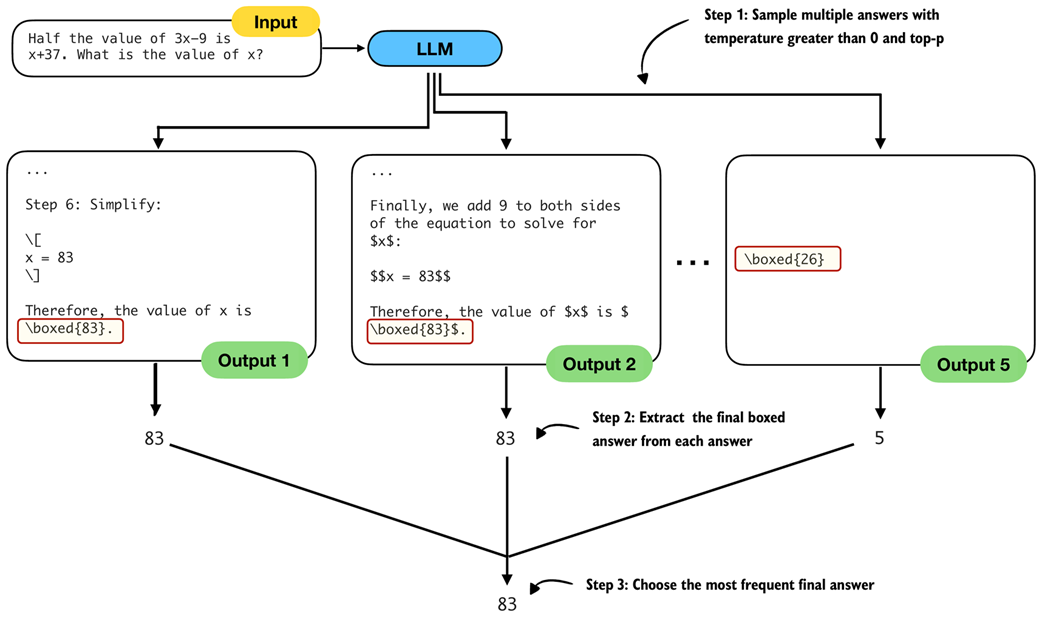

The self-consistency sampling method generates multiple responses from the LLM and selects the most frequent answer, which improves answer accuracy through majority voting across these sampled responses.

The three main steps for implementing self-consistency sampling. First, we generate multiple answers for the same prompt using a temperature greater than zero and top-p filtering to generate different answers. Second, we extract the final boxed answer from each generated solution. Third, we select the most frequently extracted answer as the final prediction.

Summary of this chapter's focus on inference-time techniques. Here, the text generation function was extended with a voting-based method to improve answer accuracy. The next chapter introduces self-refinement, in which the model iteratively improves its responses.

Summary

- Reasoning abilities and answer accuracy can be improved without retraining the model by increasing compute at inference time (inference-time scaling).

- This chapter focuses on two such techniques: chain-of-thought prompting and self-consistency; a third method, self-refinement, which was briefly described, will be covered in for the next chapter.

- A flexible text generation wrapper (generate_text_stream_concat_flex) that uses different sampling strategies that can be plugged in without changing the surrounding code.

- Next tokens are produced from logits via softmax

- Temperature scaling changes logits to control the diversity of the generated text.

- Top-p (nucleus) sampling filters out low-probability tokens to reduce the chance of generating nonsensical answers

- Chain-of-thought prompting (like "Explain step by step." or similar) often yields more accurate answers by encouraging the model to write out intermediate reasoning, though it increases the number of generated tokens and thus increases the runtime cost.

- Self-consistency sampling generates multiple answers, extracts the final boxed result from each, and selects the most frequent answer via majority vote to improve the answer accuracy.

- Experiments on the MATH-500 dataset show that combining chain-of-thought prompting with self-consistency can substantially boost accuracy compared to the baseline without sampling, at the cost of much longer runtimes.

- The central trade-off of inference-time scaling: higher accuracy in exchange for more compute.

Build a Reasoning Model (From Scratch) ebook for free

Build a Reasoning Model (From Scratch) ebook for free