Overview

1 AI Engineering - The Blueprint

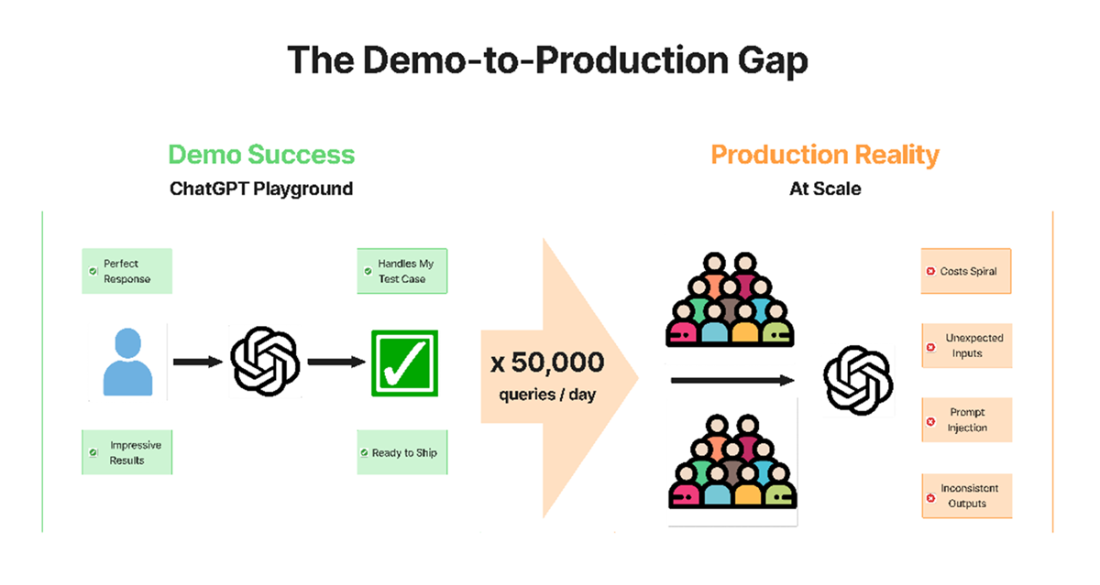

This chapter defines AI Engineering as the disciplined extension of software engineering to problems involving modern AI, clearly separating it from Prompt Engineering. Where prompt techniques help you communicate with models, AI Engineering delivers production reliability by adding architecture, validation, and operations. The narrative contrasts a chatbot failure that arose from weak engineering practices with large-scale successes that paired models with rigorous routing, grounding, guardrails, and monitoring. It also highlights the demo-to-production gap: prototypes that look good in isolation often collapse under real-world constraints like latency, cost, scale, security, and policy compliance.

The chapter presents a practical blueprint built from five cooperating layers. Prompt routing steers each request to the most appropriate (and cost-effective) model; Retrieval Augmented Generation grounds answers in authoritative sources via semantic search; structured Prompt Engineering standardizes behavior and output formats; agents handle multi-step, tool-using workflows; and operational infrastructure provides evaluation, monitoring, security, and lifecycle management. A step-by-step walkthrough of a customer support query shows how routing curbs spend, RAG reduces hallucinations, structured prompts enforce tone and format, automated validation checks compliance and citations, and confidence scoring triggers human escalation—producing fast, accurate, and auditable outcomes.

Beyond architecture, the chapter offers decision rules and diagnostics. Simple prompting is sufficient for low-stakes, human-reviewed tasks; AI Engineering is warranted when outputs integrate with systems, must be consistent, carry risk, need to scale economically, or face adversarial inputs. Failures map to missing layers: cost overruns indicate routing gaps, hallucinations point to absent grounding, inconsistency suggests prompt issues, policy violations reveal weak validation, multi-step breakdowns need better workflows or agents, and injection incidents call for stronger security. The chapter closes by outlining a learning path from robust prompting to end-to-end production systems, equipping you with a reusable mental model to build reliable, scalable, and cost-efficient AI applications.

Summary

- Ad-hoc prompting collapses at production scale - Air Canada's chatbot hallucinated policies costing $3.2M, while Klarna's engineered system handled 2.3M conversations monthly through systematic architecture, not better prompts.

- The demo-to-production gap emerges at scale - single-case success fails when serving thousands daily, exposing edge cases, context limits, cost explosions, and security vulnerabilities invisible in testing.

- Even simple tasks hide engineering complexity - product descriptions need parameterized templates, structured schemas, validation frameworks, and performance monitoring to sustain quality beyond initial demos.

- Production reliability comes from layered defenses - routing cuts costs 60-80%, RAG eliminates hallucinations through verified grounding, validation catches errors like the $3,650 in unauthorized gift cards promised to 73 customers.

- Behind successful interactions lies invisible infrastructure - Sarah's two-minute payment resolution required routing, knowledge retrieval, synthesis guardrails, validation, and confidence scoring that simpler approaches cannot provide.

- This blueprint transforms isolated techniques into production systems - you'll build architectures that prevent Air Canada's disasters while achieving Klarna's scale, handling thousands of daily interactions with measurable reliability.

FAQ

What is AI Engineering?

AI Engineering is software engineering that incorporates modern AI (LLMs, embeddings, vector databases) to solve problems involving unstructured data such as text, images, or audio. It applies the same disciplines as traditional engineering—architecture, testing, error handling, monitoring—while extending them with AI-specific patterns like retrieval, routing, validation, and structured generation.How is AI Engineering different from Prompt Engineering?

Prompt Engineering focuses on communicating effectively with language models. AI Engineering builds production systems around those prompts: routing requests to the right models, grounding outputs with retrieval, validating and monitoring quality, integrating with APIs and databases, and managing cost, latency, and reliability at scale.When do I need AI Engineering instead of simple prompting?

Use AI Engineering when:

- Outputs feed systems (databases, workflows, APIs) and need structure

- Consistent quality is required across thousands of users

- Failures have consequences (customer-facing, financial, legal/medical)

- Cost matters at scale and must be optimized

- Security risks exist (prompt injection, data exfiltration)What are the five architectural layers in the blueprint?

- Prompt Routing: Sends each query to the most suitable, cost-effective resource

- Retrieval Augmented Generation (RAG): Grounds responses in authoritative sources

- Prompt Engineering: Structures instructions, context, and output formats

- Autonomous Agents: Orchestrate multi-step, tool-using workflows

- Operational Infrastructure: Evaluation, monitoring, security, and lifecycle managementWhat is the demo-to-production gap?

Prototype prompts often work on single examples but break at scale. Production requires architecture to:

- Ensure consistent quality and tone

- Manage context limits and edge cases

- Control latency and throughput

- Optimize cost per query

- Add validation, testing, logging, and monitoringHow does prompt routing reduce cost and improve reliability?

Routing analyzes intent and complexity, sending simple requests to cheaper, faster models and complex ones to advanced models. Typical results:

- 60–80% cost reduction by avoiding unnecessary premium-model calls

- Lower latency for simple queries

- More predictable budgets and capacity planningHow does Retrieval Augmented Generation (RAG) cut hallucinations?

RAG retrieves relevant, authoritative documents (policies, product data, manuals) and injects them into the prompt. Benefits:

- Factual grounding with citations

- Current, domain-specific information vs. relying on model training data

- Lower error rates and fewer invented policies or detailsWhat validation and monitoring do production AI systems need?

Examples include:

- Policy compliance checks against source documents

- Hallucination detection (LLM-as-a-judge or rule-based assertions)

- Tone/brand voice verification

- Citation existence and quote accuracy checks

- Confidence scoring with human escalation below thresholds

- Continuous evaluation for drift, latency, and costHow do I scale a good prompt into a production pipeline?

Move from ad-hoc prompting to an engineered flow:

- Parameterize templates and enforce structured outputs (e.g., JSON schemas)

- Add validation (schema, range, cross-field rules) and retry logic

- Integrate with databases/APIs for automated insertion and workflows

- Use routing to manage costs and context limits

- Monitor quality, latency, and spend continuouslyWhat outcomes can this blueprint deliver in practice?

Organizations report:

- Large cost savings via routing and automation

- Faster response/resolution times with consistent quality

- Reduced hallucinations through RAG and validation layers

- Higher customer satisfaction and lower manual workload

- Safe scaling with observability, guardrails, and human-in-the-loop where needed

AI Engineering in Practice ebook for free

AI Engineering in Practice ebook for free